News

‘Ludicrous+’ Tesla Model S P100D could see 0-60 in 2.1 sec., when ‘stripped down’

Following yesterday’s announcement that a new ‘Ludicrous+’ mode has made Tesla’s flagship P100D even quicker by dropping 0-60 mph times down to 2.4 seconds, a new tweet from Elon Musk today suggests that things could get even quicker if the vehicle was “stripped down”.

The first of a series of tweets by Musk today noted that the new P100D Ludicrous+ Easter egg on a Model S will allow it to accelerate to 60 mph in as little as 2.34 seconds. That tweet got a response from the folks at Motor Trend, who kindly offered to validate Tesla’s claim.

Bring it on! We'd be happy to validate the P100D, as we did the P90D's Ludicrous (2.6 sec) 0-60 mph time https://t.co/WzK5OmByfz

— motortrend (@MotorTrend) January 12, 2017

Also responding was Twitter user Trevor Clark who calls out Faraday Future’s impressive 2.39 second 0-60 mph time in the FF 91, but through a backhanded compliment. Clark, who mentions Musk in the tweet, notes that Faraday Future’s 1,050 horsepower electric car has no interior and uses lightweight race seats.

Related: Tech reviewer and YouTube personality MKBHD reviews Faraday Future’s FF 91

Musk would later clarify that the 2.34 second 0-60 mph time is for a production car, however a stripped down race-ready Tesla could see a mind-boggling 0-60 in 2.1 seconds. “Good point. 2.34 would be a production Tesla. Stripped down, maybe as low as 2.1.”

Good point. 2.34 would be a production Tesla. Stripped down, maybe as low as 2.1.

— Elon Musk (@elonmusk) January 12, 2017

Aside from tweeting about Tesla’s Ludicrous+ performance, the serial tech entrepreneur also noted that the company’s next iteration of Autopilot enhancement is expected to see a worldwide over-the-air roll out in the coming week. First generation Autopilot vehicles on “hardware 1” are expected to see the software update as early as this weekend, according to Musk’s tweet.

If the results look good from the latest point release, then we are days away from a release to all HW1 and to all HW2 early next week

— Elon Musk (@elonmusk) January 12, 2017

Autopilot continues to be one of Musk’s top priorities, as evidenced by the recent hiring of Chris Lattner as the new Vice President of Autopilot Software. Lattner is an 11 year veteran of Apple, where he was credited with creating of Apple’s Swift programming language used for building apps.

Once the software is fully activated, drivers of cars with the Hardware 2 package will be able to enjoy what Tesla now refers to as Enhanced Autopilot. Vehicles equipped with Autopilot 2.0 hardware, or otherwise referred to as “hardware 2″ by Musk, will be capable of operating in Level 5 fully autonomous mode once regulatory approval for self-driving cars on public roads is obtained.

Until then, Enhanced Autopilot “will match speed to traffic conditions, keep within a lane, automatically change lanes without requiring driver input, transition from one freeway to another, exit the freeway when your destination is near, self-park when near a parking spot and be summoned to and from your garage,” according to the company.

News

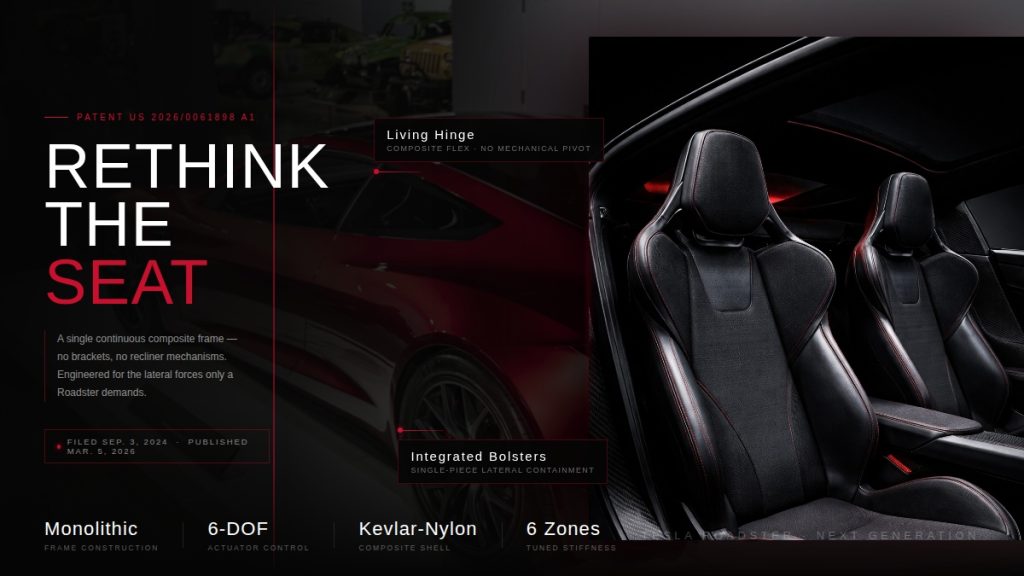

Tesla Roadster patent hints at radical seat redesign ahead of reveal

A newly published Tesla patent could offer one of the clearest signals yet that the long-awaited next-generation Roadster is nearly ready for its public debut.

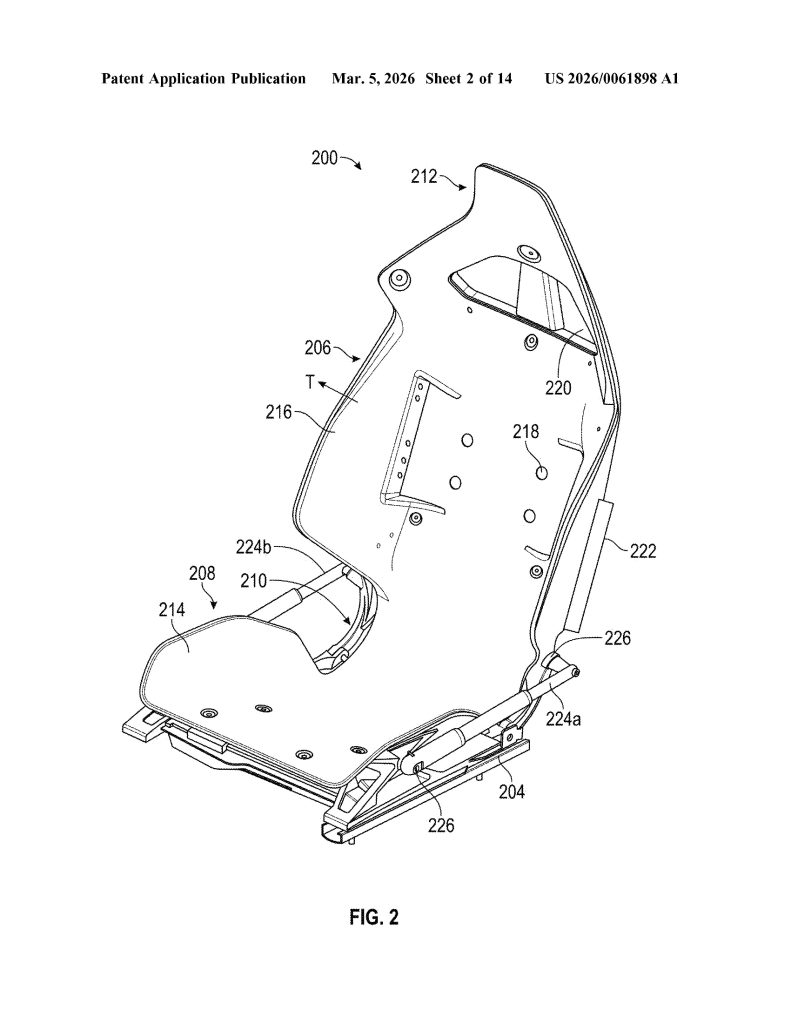

Patent No. US 20260061898 A1, published on March 5, 2026, describes a “vehicle seat system” built around a single continuous composite frame – a dramatic departure from the dozens of metal brackets, recliner mechanisms, and rivets that make up a traditional car seat. Tesla is calling it a monolithic structure, with the seat portion, backrest, headrest, and bolsters all thermoformed as one unified piece.

The approach mirrors Tesla’s broader manufacturing philosophy. The same company that pioneered massive aluminum castings to eliminate hundreds of body components is now applying that logic to the cabin. Fewer parts means fewer potential failure points, less weight, and a cleaner assembly process overall.

Tesla ramps hiring for Roadster as latest unveiling approaches

The timing of the filing is difficult to ignore. Elon Musk has publicly targeted April 1, 2026 as the date for an “unforgettable” Roadster design reveal, and two new Roadster trademarks were filed just last month. A patent describing a seat architecture suited for a hypercar, and one that Tesla has promised will hit 60 mph in under two seconds.

The Roadster, originally unveiled in 2017, has been one of Tesla’s most anticipated yet most delayed products. With a target price around $200,000 and engineering ambitions to match, it is being positioned as the ultimate showcase for what Tesla’s technology can do.

The patent was first flagged by @seti_park on X.

Tesla Roadster Monolithic Seat: Feature Highlights via US Patent 20260061898 A1

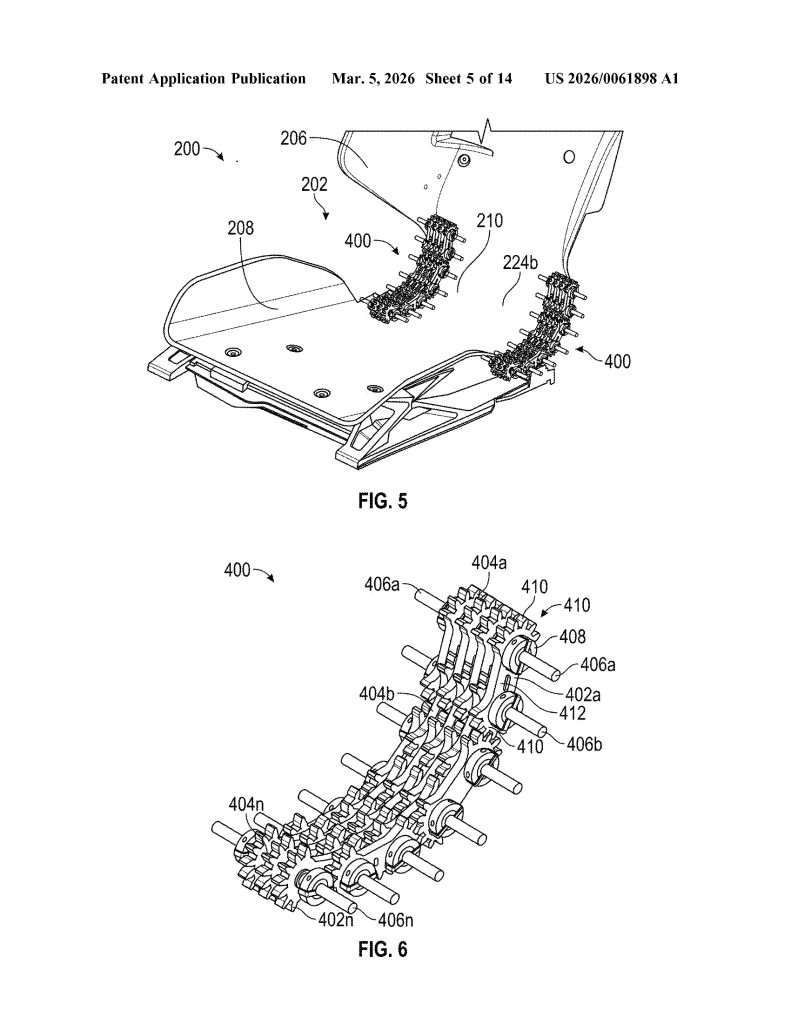

- Single Continuous Frame (Monolithic Construction). The core invention is a seat assembly built from one continuous frame that integrates the seat portion, backrest portion, and hinge into a single component — eliminating the need for separate structural parts and mechanical joints typical in conventional seats.

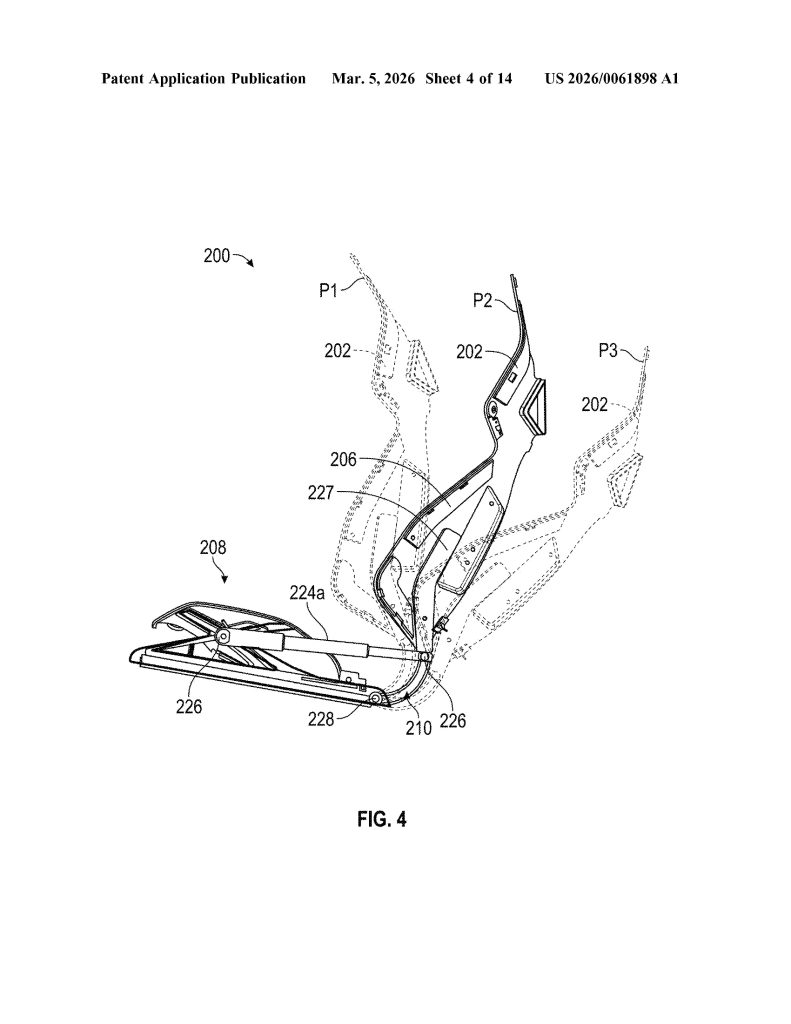

- Integrated Flexible Hinge. Rather than a traditional mechanical recliner, the hinge is built directly into the continuous frame and is designed to flex, and allowing the backrest to move relative to the seat portion. The hinge can be implemented as a fiber composite leaf spring or an assembly of rigid linkages.

- Thermoformed Anisotropic Composite Material. The continuous frame is manufactured via thermoforming from anisotropic composite materials, including fiberglass-nylon, fiberglass-polymer, nylon carbon composite, Kevlar-nylon, or Kevlar-polymer composites, enabling a molded-to-shape monolithic structure.

- Regionally Tuned Stiffness Zones. The frame is engineered with up to six distinct stiffness regions (R1–R6) across the seat, backrest, hinge, headrest, and bolsters. Each zone can have a different stiffness, allowing precise ergonomic and structural tuning without adding separate components.

- Linkage Assembly Hinge Mechanism. The hinge incorporates one or more linkage assemblies consisting of multiple interlocking links with gears, connected by rods. When driven by motors or actuators, these linkages act as a flexible member to control backrest movement along a precise, ergonomically optimized trajectory.

- Multi-Actuator Six-Degree-of-Freedom Positioning System. The seat uses four distinct actuator pairs, all controlled by a central controller. These actuators work in coordinated combinations to achieve fore/aft, height, cushion tilt, and backrest rotation adjustments simultaneously.

- ECU-Based Controller Architecture. An Electronic Control Unit (ECU) and programmable controller manage all seat actuators, receive user input via a user interface (touchscreen, buttons, or switches), and incorporate sensor feedback to confirm and maintain desired seat positions, essentially making this a software-driven seat system.

- Airbag-Integrated Bolster Deployment System. The backrest bolsters (216) are geometrically shaped and sized to guide airbag deployment along a specific, pre-configured trajectory. Left and right bolsters can have different shapes so that each guides its respective airbag along a distinct trajectory, improving occupant protection.

- Ventilation Holes Formed into the Backrest. The continuous frame includes one or more ventilation holes formed directly into the backrest portion, configured to either receive airflow into or deliver airflow from the seat frame — enabling passive or active thermal comfort without requiring separate ventilation components.

- Soft Trim Recess for Tool-Free Integration. The headrest and backrest portions together define a molded recess, specifically designed to receive and secure a soft trim component (foam, fabric, or cushioning) directly into the continuous frame, eliminating the need for separate attachment hardware and simplifying final assembly.

Elon Musk

Elon Musk’s xAI plans $659M expansion at Memphis supercomputer site

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site.

Elon Musk’s artificial intelligence company xAI has filed a permit to construct a new building at its growing data center complex outside Memphis, Tennessee.

As per a report from Data Center Dynamics, xAI plans to spend about $659 million on a new facility adjacent to its Colossus 2 data center. Permit documents submitted to the Memphis and Shelby County Division of Planning and Development show the proposed structure would be a four-story building totaling about 312,000 square feet.

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site. Permit filings indicate the structure would reach roughly 75 feet high, though the specific function of the building has not been disclosed.

The filing was first reported by the Memphis Business Journal.

xAI uses its Memphis data centers to power Grok, the company’s flagship large language model. The company entered the Memphis area in 2024, launching its Colossus supercomputer in a repurposed Electrolux factory located in the Boxtown district.

The company later acquired land for the Colossus 2 data center in March last year. That facility came online in January.

A third data center is also planned for the cluster across the Tennessee–Mississippi border. Musk has stated that the broader campus could eventually provide access to about 2 gigawatts of compute power.

The Memphis cluster is also tied to new power infrastructure commitments announced by SpaceX President Gwynne Shotwell. During a White House event with United States President Donald Trump, Shotwell stated that xAI would develop 1.2 gigawatts of power for its supercomputer facility as part of the administration’s “Ratepayer Protection Pledge.”

“As you know, xAI builds huge supercomputers and data centers and we build them fast. Currently, we’re building one on the Tennessee-Mississippi state line… xAI will therefore commit to develop 1.2 GW of power as our supercomputer’s primary power source. That will be for every additional data center as well…

“The installation will provide enough backup power to power the city of Memphis, and more than sufficient energy to power the town of Southaven, Mississippi where the data center resides. We will build new substations and invest in electrical infrastructure to provide stability to the area’s grid,” Shotwell said.

Shotwell also stated that xAI plans to support the region’s water supply through new infrastructure tied to the project. “We will build state-of-the-art water recycling plants that will protect approximately 4.7 billion gallons of water from the Memphis aquifer each year. And we will employ thousands of American workers from around the city of Memphis on both sides of the TN-MS border,” she said.

News

Tesla wins another award critics will absolutely despise

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Tesla just won another award that critics will absolutely despise, as it has been recognized once again as the company with the most sustainable supply chain.

Tesla has once again proven its critics wrong, securing the number one spot on the 2026 Lead the Charge Auto Supply Chain Leaderboard for the second consecutive year, Lead the Charge rankings show.

NEWS: Tesla ranked 1st on supply chain sustainability in the 2026 Lead the Charge auto/EV supply chain scorecard.

“@Tesla remains the top performing automaker of the Leaderboard for the second year running, and increased its overall score by 6 percentage points, while Ford only… pic.twitter.com/nAgGOIrGFS

— Sawyer Merritt (@SawyerMerritt) March 4, 2026

This independent ranking, produced by a coalition of environmental, human rights, and investor groups including the Sierra Club, Transport & Environment, and others, evaluates 18 major automakers on their efforts to build equitable, sustainable, and fossil-free supply chains for electric vehicles.

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Perhaps the most impressive achievement came in the batteries subsection, where Tesla posted a massive +20-point jump to reach 51 percent, becoming the first automaker ever to surpass 50 percent in this critical area.

Tesla achieved this milestone through transparency, fully disclosing Scope 3 emissions breakdowns for battery cell production and key materials like lithium, nickel, cobalt, and graphite.

The company also requires suppliers to conduct due diligence aligned with OECD guidelines on responsible sourcing, which it has mentioned in past Impact Reports.

While Tesla leads comfortably in climate and environmental performance, it scores 48 percent in human rights and responsible sourcing, slightly behind Ford’s 49 percent.

The company made notable gains in workers’ rights remedies, but has room to improve on issues like Indigenous Peoples’ rights.

Overall, the leaderboard highlights that a core group of leaders, Tesla, Ford, Volvo, Mercedes, and Volkswagen, are advancing twice as fast as their peers, proving that cleaner, more ethical EV supply chains are not just possible but already underway.

For Tesla detractors who claim EVs aren’t truly green or that the company cuts corners, this recognition from sustainability-focused NGOs delivers a powerful rebuttal.

Tesla’s vertical integration, direct supplier contracts, low-carbon material agreements (like its North American aluminum deal with emissions under 2kg CO₂e per kg), and raw materials reporting continue to set the industry standard.

As the world races toward electrification, Tesla isn’t just building cars; it’s building a more responsible future.