News

Tesla Autopilot Abusers need to be held accountable, but how?

Tesla Autopilot Abusers need to be held accountable for their actions. For years, Tesla engineers have worked long and hard to improve Autopilot and Full Self-Driving. Hundreds of thousands of hours of work have been put into these driving assistance programs, whether it would be through software, coding, and programming or through other mediums. However, years of hard work, diligence, and improvement can be wiped away from the public’s perception in a minute with one foolish, irresponsible, and selfish act that can be derived from an owner’s need to show off their car’s semi-autonomous functionalities to others.

The most recent example of this is with Param Sharma, a self-proclaimed “rich as f***” social media influencer who has spent the last few days sparring with Tesla enthusiasts through his selfish and undeniably dangerous act of jumping in the backseat while his car is operating on Autopilot. Sharma has been seen on numerous occasions sitting in the backseat of his car while the vehicle drives itself. It is almost a sure thing that Sharma is using several cheat devices in his Tesla to bypass typical barriers the company has installed to ensure drivers are paying attention. These include a steering wheel sensor, seat sensors, and seatbelt sensors, all of which must be controlled or connected by the driver at the time of Autopilot’s use. We have seen several companies and some owners use DIY hack devices to bypass these safety thresholds. These are hazardous acts for several reasons, the most important being the lack of appreciation for other human lives.

This is a preview from our weekly newsletter. Each week I go ‘Beyond the News’ and handcraft a special edition that includes my thoughts on the biggest stories, why it matters, and how it could impact the future.

While Tesla fans and enthusiasts are undoubtedly confident in the abilities of Autopilot and Full Self-Driving, they will also admit that the use of these suites needs to be used responsibly and as the company describes. Tesla has never indicated that its vehicles can drive themselves, which can be characterized as “Level 5 Autonomy.” The company also indicates that drivers must keep their hands on the steering wheel at all times. There are several safety features that Tesla has installed to ensure that these are recognized by the car’s operator. If these safety precautions are not followed, the driver runs the risk of being put in “Autopilot Jail,” where they will not have the feature available to them for the remainder of their drive.

As previously mentioned, there are cheat devices for all of these safety features, however. This is where Tesla cannot necessarily control what goes on, and law enforcement, in my opinion, is more responsible than the company actually is. It is law enforcement’s job to stop this from happening if an officer sees it occurring. Nobody should be able to climb into the backseat of their vehicle while it is driving. A least not until many years of testing are completed, and many miles of fully autonomous functionalities are proven to be accurate and robust enough to handle real-world traffic.

The reason Tesla should step in, in my opinion, and create a list of repeat offenders who have proven themselves to be irresponsible and not trustworthy enough for Autopilot and FSD, is because if an accident happens while these influencers or everyday drivers are taking advantage of Autopilot’s capabilities, Tesla, along with every other company working to develop Level 5 Autonomous vehicles, takes a huge step backward. Not only will Tesla feel the most criticism from the media, but it will be poured on as the company is taking no real steps to prevent it from happening. Unbelievably, we in the Tesla community know what the vehicles can and what safety precautions have been installed to prevent these incidents from happening. However, mainstream media outlets do not have an explicit and in-depth understanding of Tesla’s capabilities. There is plenty of evidence to suggest that they have no intentions of improving their comprehension of what Tesla does daily.

While talking to someone about this subject on Thursday, they highlighted that this isn’t Tesla’s concern. And while I believe that it really isn’t, I don’t think that’s an acceptable answer to solve all of the abuses going on with the cars. Tesla should take matters into its own hands, and I believe it should because it has done it before. Elon Musk and Tesla decided to expand the FSD Beta testing pool recently, but the company also revoked access to some people who have decided that they would not use the functionality properly. Why is this any different in the case of AP/FSD? Just because someone pays for something doesn’t mean the company cannot revoke access to it. If you pay for access to play video games online and hack or use abusive language, there are major consequences. Your console can get banned, and you would be required to buy a completely new unit if you ever wished to play online video games again.

While unfortunate, Tesla will have to make a stand against those who abuse Autopilot, in my opinion. There needs to be heavier consequences by the company simply because an accident caused by abuse or misuse of the functionalities could set the company back several years and put their work to solve Level 5 Autonomy in a vacuum. There is entirely too much at stake here to even begin to let people off the hook. I believe that Tesla’s actions should follow law enforcement action. When police officers find someone violating the proper use of the system, the normal reckless driving charges should be held up, and there should be increasingly worse consequences for every subsequent offense. Perhaps after the third offense, Tesla could be contacted and could have AP/FSD taken off of the car. There could be a probationary period or a zero-tolerance policy; it would all be up to the company.

I believe that this needs to be taken so seriously, and there need to be consequences because of the blatant disregard for other people and their work. The irresponsible use of AP/FSD by childish drivers means that Tesla’s hard work is being jeopardized by horrible behavior. While many people don’t enjoy driving, it still requires responsibility, and everyone on the road is entrusting you to drive responsibly. It could cost your life or, even worse, someone else’s.

A big thanks to our long-time supporters and new subscribers! Thank you.

I use this newsletter to share my thoughts on what is going on in the Tesla world. If you want to talk to me directly, you can email me or reach me on Twitter. I don’t bite, be sure to reach out!

News

BREAKING: Tesla launches public Robotaxi rides in Austin with no Safety Monitor

Tesla has officially launched public Robotaxi rides in Austin, Texas, without a Safety Monitor in the vehicle, marking the first time the company has removed anyone from the vehicle other than the rider.

The Safety Monitor has been present in Tesla Robotaxis in Austin since its launch last June, maintaining safety for passengers and other vehicles, and was placed in the passenger’s seat.

Tesla planned to remove the Safety Monitor at the end of 2025, but it was not quite ready to do so. Now, in January, riders are officially reporting that they are able to hail a ride from a Model Y Robotaxi without anyone in the vehicle:

I am in a robotaxi without safety monitor pic.twitter.com/fzHu385oIb

— TSLA99T (@Tsla99T) January 22, 2026

Tesla started testing this internally late last year and had several employees show that they were riding in the vehicle without anyone else there to intervene in case of an emergency.

Tesla has now expanded that program to the public, but it is currently unclear if that is the case across its entire fleet of vehicles in Austin at this point.

Tesla Robotaxi goes driverless as Musk confirms Safety Monitor removal testing

The Robotaxi program also operates in the California Bay Area, where the fleet is much larger, but Safety Monitors are placed in the driver’s seat and utilize Full Self-Driving, so it is essentially the same as an Uber driver using a Tesla with FSD.

In Austin, the removal of Safety Monitors marks a substantial achievement for Tesla moving forward. Now that it has enough confidence to remove Safety Monitors from Robotaxis altogether, there are nearly unlimited options for the company in terms of expansion.

While it is hoping to launch the ride-hailing service in more cities across the U.S. this year, this is a much larger development than expansion, at least for now, as it is the first time it is performing driverless rides in Robotaxi anywhere in the world for the public to enjoy.

Investor's Corner

Tesla Earnings Call: Top 5 questions investors are asking

Tesla has scheduled its Earnings Call for Q4 and Full Year 2025 for next Wednesday, January 28, at 5:30 p.m. EST, and investors are already preparing to get some answers from executives regarding a wide variety of topics.

The company accepts several questions from retail investors through the platform Say, which then allows shareholders to vote on the best questions.

Tesla does not answer anything regarding future product releases, but they are willing to shed light on current timelines, progress of certain projects, and other plans.

There are five questions that range over a variety of topics, including SpaceX, Full Self-Driving, Robotaxi, and Optimus, which are currently in the lead to be asked and potentially answered by Elon Musk and other Tesla executives:

- You once said: Loyalty deserves loyalty. Will long-term Tesla shareholders still be prioritized if SpaceX does an IPO?

- Our Take – With a lot of speculation regarding an incoming SpaceX IPO, Tesla investors, especially long-term ones, should be able to benefit from an early opportunity to purchase shares. This has been discussed endlessly over the past year, and we must be getting close to it.

- When is FSD going to be 100% unsupervised?

- Our Take – Musk said today that this is essentially a solved problem, and it could be available in the U.S. by the end of this year.

- What is the current bottleneck to increase Robotaxi deployment & personal use unsupervised FSD? The safety/performance of the most recent models or people to monitor robots, robotaxis, in-car, or remotely? Or something else?

- Our Take – The bottleneck seems to be based on data, which Musk said Tesla needs 10 billion miles of data to achieve unsupervised FSD. Once that happens, regulatory issues will be what hold things up from moving forward.

- Regarding Optimus, could you share the current number of units deployed in Tesla factories and actively performing production tasks? What specific roles or operations are they handling, and how has their integration impacted factory efficiency or output?

- Our Take – Optimus is going to have a larger role in factories moving forward, and later this year, they will have larger responsibilities.

- Can you please tie purchased FSD to our owner accounts vs. locked to the car? This will help us enjoy it in any Tesla we drive/buy and reward us for hanging in so long, some of us since 2017.

- Our Take – This is a good one and should get us some additional information on the FSD transfer plans and Subscription-only model that Tesla will adopt soon.

Tesla will have its Earnings Call on Wednesday, January 28.

Elon Musk

Elon Musk shares incredible detail about Tesla Cybercab efficiency

Elon Musk shared an incredible detail about Tesla Cybercab’s potential efficiency, as the company has hinted in the past that it could be one of the most affordable vehicles to operate from a per-mile basis.

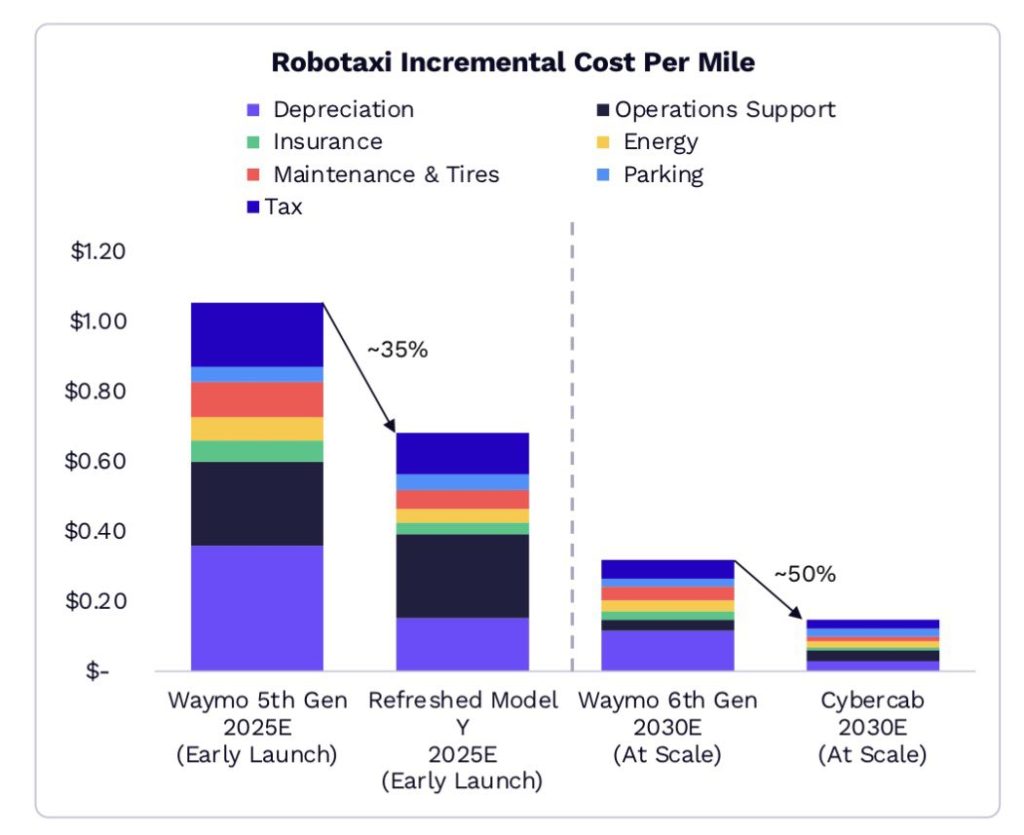

ARK Invest released a report recently that shed some light on the potential incremental cost per mile of various Robotaxis that will be available on the market in the coming years.

The Cybercab, which is detailed for the year 2030, has an exceptionally low cost of operation, which is something Tesla revealed when it unveiled the vehicle a year and a half ago at the “We, Robot” event in Los Angeles.

Musk said on numerous occasions that Tesla plans to hit the $0.20 cents per mile mark with the Cybercab, describing a “clear path” to achieving that figure and emphasizing it is the “full considered” cost, which would include energy, maintenance, cleaning, depreciation, and insurance.

Probably true

— Elon Musk (@elonmusk) January 22, 2026

ARK’s report showed that the Cybercab would be roughly half the cost of the Waymo 6th Gen Robotaxi in 2030, as that would come in at around $0.40 per mile all in. Cybercab, at scale, would be at $0.20.

Credit: ARK Invest

This would be a dramatic decrease in the cost of operation for Tesla, and the savings would then be passed on to customers who choose to utilize the ride-sharing service for their own transportation needs.

The U.S. average cost of new vehicle ownership is about $0.77 per mile, according to AAA. Meanwhile, Uber and Lyft rideshares often cost between $1 and $4 per mile, while Waymo can cost between $0.60 and $1 or more per mile, according to some estimates.

Tesla’s engineering has been the true driver of these cost efficiencies, and its focus on creating a vehicle that is as cost-effective to operate as possible is truly going to pay off as the vehicle begins to scale. Tesla wants to get the Cybercab to about 5.5-6 miles per kWh, which has been discussed with prototypes.

Additionally, fewer parts due to the umboxed manufacturing process, a lower initial cost, and eliminating the need to pay humans for their labor would also contribute to a cheaper operational cost overall. While aspirational, all of the ingredients for this to be a real goal are there.

It may take some time as Tesla needs to hammer the manufacturing processes, and Musk has said there will be growing pains early. This week, he said regarding the early production efforts:

“…initial production is always very slow and follows an S-curve. The speed of production ramp is inversely proportionate to how many new parts and steps there are. For Cybercab and Optimus, almost everything is new, so the early production rate will be agonizingly slow, but eventually end up being insanely fast.”