Elon Musk’s cautionary statements about uncontrolled experimentation with artificial intelligence (AI) have caused some to ridicule him as a fear-monger, and have given many in the mainstream press the idea that he is opposed to using AI, which is very far from the truth. In fact, AI is a major component of Tesla’s Autopilot system, and the company applies it in several other areas as well.

It was only recently that Tesla publicly revealed that it is working on its own AI hardware. At the NIPS machine learning conference in December, Elon Musk announced that Tesla is “developing specialized AI hardware that we think will be the best in the world.” The company has offered few details, but it’s widely assumed that the main application will be processing the algorithms for Tesla’s Autopilot software.

As Bernard Marr reports in a recent article in Forbes, there’s little doubt that Tesla is way ahead of its potential rivals in the data-gathering department. Every Model S and X built with the Autopilot hardware suite, which was introduced in September 2014, has the potential to become self-driving, and all Tesla vehicles, Autopilot-enabled or not, continually gather data and send it to the cloud. The company has many more sensors on the roads than any of its Detroit or Silicon Valley rivals, and the number will mushroom when Model 3 production hits its stride.

Tesla is crowd-sourcing data not only from its vehicles, but could one day obtain data on its drivers through internal cameras that detect hand placement on instruments or a person’s state of alertness. The company uses the information not only to improve Autopilot by generating data-dense maps, but also to diagnose driving behavior. Many believe that this sort of data will prove to be a valuable commodity that could be sold to third parties (much as data on web-browsing habits is today). McKinsey and Company has estimated that the market for vehicle-gathered data could be worth $750 billion a year by 2030.

Forbes explains that the AI built into Tesla’s system operates at several levels. Machine learning in the cloud educates the entire fleet, while within each individual vehicle, “edge computing” can make decisions about actions a car needs to take immediately. There’s also a third level of decision-making, in which cars can form networks with other Tesla vehicles nearby in order to share local information. In the future, when there are lots of autonomous cars on the road, these networks could also interface with cars from other makers, and systems such as traffic cameras, road-based sensors, and mobile phones.

At this point, no one knows what new forms of AI technology the mad scientists in Palo Alto are cooking up, but Forbes found some clues on the Facebook page of Tesla’s hardware partner Nvidia: “In contrast to the usual approach to operating self-driving cars, we did not program any explicit object detection, mapping, path planning or control components into this car. Instead, the car learns on its own to create all necessary internal representations necessary to steer, simply by observing human drivers.”

This unsupervised learning model contrasts with the more familiar approach of supervised learning, in which algorithms are trained beforehand about right or wrong decisions. Each approach has its pros and cons, and it’s likely that Tesla’s strategy includes both.

Forbes reports that Tesla’s use of AI is not limited to Autopilot – the company employs machine learning in the design and manufacturing processes, to process customer data, and even to scan the text in online forums for insights into commonly-reported problems. It’s ironic that some in the press choose to portray Elon Musk as an AI Luddite, when in fact Tesla may be one of the most sophisticated users of the technology.

===

Note: Article originally published on evannex.com, by Charles Morris

Source: Forbes

News

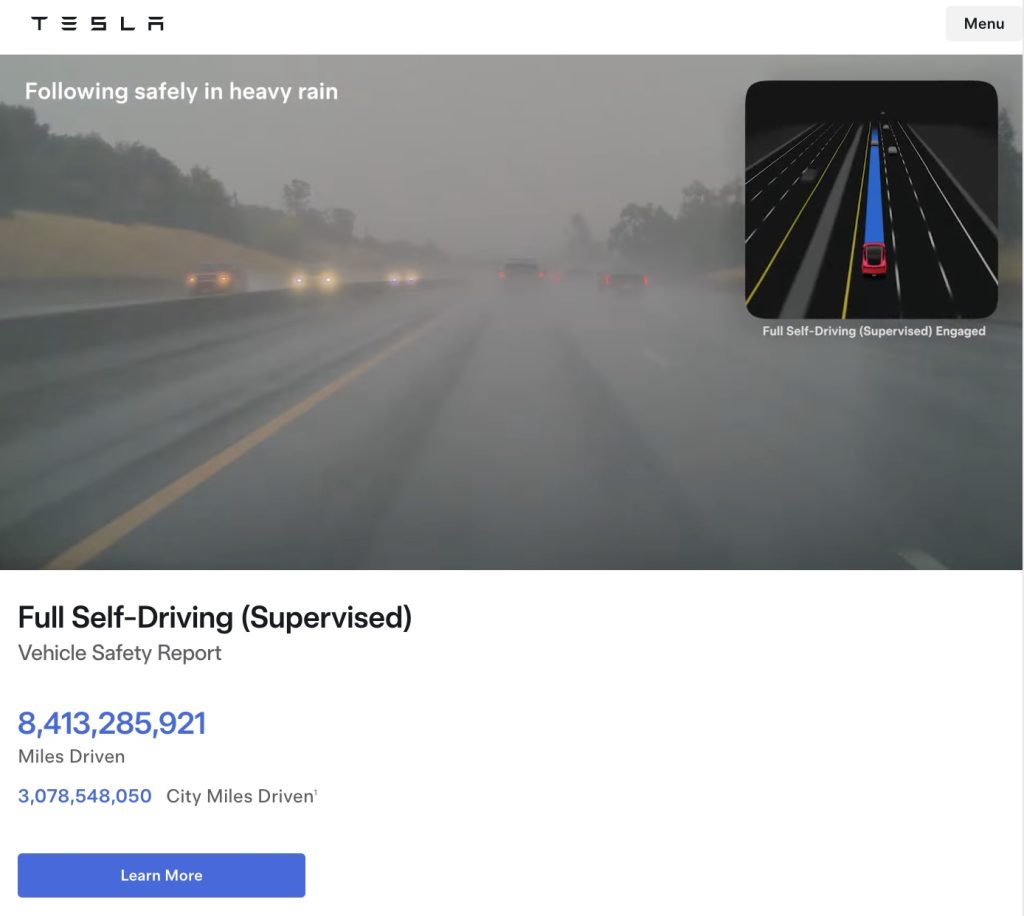

Tesla FSD (Supervised) fleet passes 8.4 billion cumulative miles

The figure appears on Tesla’s official safety page, which tracks performance data for FSD (Supervised) and other safety technologies.

Tesla’s Full Self-Driving (Supervised) system has now surpassed 8.4 billion cumulative miles.

The figure appears on Tesla’s official safety page, which tracks performance data for FSD (Supervised) and other safety technologies.

Tesla has long emphasized that large-scale real-world data is central to improving its neural network-based approach to autonomy. Each mile driven with FSD (Supervised) engaged contributes additional edge cases and scenario training for the system.

The milestone also brings Tesla closer to a benchmark previously outlined by CEO Elon Musk. Musk has stated that roughly 10 billion miles of training data may be needed to achieve safe unsupervised self-driving at scale, citing the “long tail” of rare but complex driving situations that must be learned through experience.

The growth curve of FSD Supervised’s cumulative miles over the past five years has been notable.

As noted in data shared by Tesla watcher Sawyer Merritt, annual FSD (Supervised) miles have increased from roughly 6 million in 2021 to 80 million in 2022, 670 million in 2023, 2.25 billion in 2024, and 4.25 billion in 2025. In just the first 50 days of 2026, Tesla owners logged another 1 billion miles.

At the current pace, the fleet is trending towards hitting about 10 billion FSD Supervised miles this year. The increase has been driven by Tesla’s growing vehicle fleet, periodic free trials, and expanding Robotaxi operations, among others.

With the fleet now past 8.4 billion cumulative miles, Tesla’s supervised system is approaching that threshold, even as regulatory approval for fully unsupervised deployment remains subject to further validation and oversight.

Elon Musk

Elon Musk fires back after Wikipedia co-founder claims neutrality and dubs Grokipedia “ridiculous”

Musk’s response to Wales’ comments, which were posted on social media platform X, was short and direct: “Famous last words.”

Elon Musk fired back at Wikipedia co-founder Jimmy Wales after the longtime online encyclopedia leader dismissed xAI’s new AI-powered alternative, Grokipedia, as a “ridiculous” idea that is bound to fail.

Musk’s response to Wales’ comments, which were posted on social media platform X, was short and direct: “Famous last words.”

Wales made the comments while answering questions about Wikipedia’s neutrality. According to Wales, Wikipedia prides itself on neutrality.

“One of our core values at Wikipedia is neutrality. A neutral point of view is non-negotiable. It’s in the community, unquestioned… The idea that we’ve become somehow ‘Wokepidea’ is just not true,” Wales said.

When asked about potential competition from Grokipedia, Wales downplayed the situation. “There is no competition. I don’t know if anyone uses Grokipedia. I think it is a ridiculous idea that will never work,” Wales wrote.

After Grokipedia went live, Larry Sanger, also a co-founder of Wikipedia, wrote on X that his initial impression of the AI-powered Wikipedia alternative was “very OK.”

“My initial impression, looking at my own article and poking around here and there, is that Grokipedia is very OK. The jury’s still out as to whether it’s actually better than Wikipedia. But at this point I would have to say ‘maybe!’” Sanger stated.

Musk responded to Sanger’s assessment by saying it was “accurate.” In a separate post, he added that even in its V0.1 form, Grokipedia was already better than Wikipedia.

During a past appearance on the Tucker Carlson Show, Sanger argued that Wikipedia has drifted from its original vision, citing concerns about how its “Reliable sources/Perennial sources” framework categorizes publications by perceived credibility. As per Sanger, Wikipedia’s “Reliable sources/Perennial sources” list leans heavily left, with conservative publications getting effectively blacklisted in favor of their more liberal counterparts.

As of writing, Grokipedia has reportedly surpassed 80% of English Wikipedia’s article count.

News

Tesla Sweden appeals after grid company refuses to restore existing Supercharger due to union strike

The charging site was previously functioning before it was temporarily disconnected in April last year for electrical safety reasons.

Tesla Sweden is seeking regulatory intervention after a Swedish power grid company refused to reconnect an already operational Supercharger station in Åre due to ongoing union sympathy actions.

The charging site was previously functioning before it was temporarily disconnected in April last year for electrical safety reasons. A temporary construction power cabinet supplying the station had fallen over, described by Tesla as occurring “under unclear circumstances.” The power was then cut at the request of Tesla’s installation contractor to allow safe repair work.

While the safety issue was resolved, the station has not been brought back online. Stefan Sedin, CEO of Jämtkraft elnät, told Dagens Arbete (DA) that power will not be restored to the existing Supercharger station as long as the electric vehicle maker’s union issues are ongoing.

“One of our installers noticed that the construction power had been backed up and was on the ground. We asked Tesla to fix the system, and their installation company in turn asked us to cut the power so that they could do the work safely.

“When everything was restored, the question arose: ‘Wait a minute, can we reconnect the station to the electricity grid? Or what does the notice actually say?’ We consulted with our employer organization, who were clear that as long as sympathy measures are in place, we cannot reconnect this facility,” Sedin said.

The union’s sympathy actions, which began in March 2024, apply to work involving “planning, preparation, new connections, grid expansion, service, maintenance and repairs” of Tesla’s charging infrastructure in Sweden.

Tesla Sweden has argued that reconnecting an existing facility is not equivalent to establishing a new grid connection. In a filing to the Swedish Energy Market Inspectorate, the company stated that reconnecting the installation “is therefore not covered by the sympathy measures and cannot therefore constitute a reason for not reconnecting the facility to the electricity grid.”

Sedin, for his part, noted that Tesla’s issue with the Supercharger is quite unique. And while Jämtkraft elnät itself has no issue with Tesla, its actions are based on the unions’ sympathy measures against the electric vehicle maker.

“This is absolutely the first time that I have been involved in matters relating to union conflicts or sympathy measures. That is why we have relied entirely on the assessment of our employer organization. This is not something that we have made any decisions about ourselves at all.

“It is not that Jämtkraft elnät has a conflict with Tesla, but our actions are based on these sympathy measures. Should it turn out that we have made an incorrect assessment, we will correct ourselves. It is no more difficult than that for us,” the executive said.