News

SpaceX customer reaffirms third Falcon Heavy mission’s Q2 2019 launch target

Taiwan’s National Space Organization (NSO) has reaffirmed a Q2 2019 launch target for SpaceX’s third-ever Falcon Heavy mission, a US Air Force-sponsored test launch opportunity known as Space Test Program 2 (STP-2).

Set to host approximately two dozen customer spacecraft, one of the largest and most monetarily significant copassengers riding on STP-2 is Formosat-7, a six-satellite Earth sensing constellation built with the cooperation of Taiwan’s NSO and the United States’ NOAA (National Oceanic and Atmospheric Administration) for around $105M. If successfully launched, Formosat-7 will dramatically expand Taiwan’s domestic Earth observation and weather forecasting capabilities, important for a nation at high risk of typhoons and flooding rains.

Formosat-7, the latest generation of the series, is jointly developed by #Taiwan’s National Space Organization and the #US National Oceanic and Atmospheric Administration following an agreement signed in 2010. https://t.co/7hj2ijFutZ

— Asia Times (@asiatimesonline) January 7, 2019

Although Taiwan officials were unable to offer a target more specific than Q2 2019 (April to June), it’s understood by way of NASA comments and sources inside SpaceX that STP-2’s tentative launch target currently stands in April. For a number of reasons, chances are high that that ambitious launch target will slip into May or June. Notably, the simple fact that Falcon Heavy’s next two launches (Arabsat 6A and STP-2) are scheduled within just a few months of each other almost singlehandedly wipes out any possibility that both Heavy launches will feature all-new side and center boosters, strongly implying that whichever mission flies second will be launching on three flight-proven boosters.

To further ramp up the difficulty (and improbability), those three flight-proven Block 5 boosters would have to launch as an integrated Falcon Heavy, safely land (two by landing zone, one by drone ship), be transported to SpaceX facilities, and finally be refurbished and reintegrated for their second launch in no more than 30 to 120 days from start to finish. SpaceX’s record for Falcon 9 booster turnaround (the time between two launches) currently stands at 72 days for Block 4 hardware and 74 days for Block 5, meaning that the company could effectively need to simultaneously break its booster turnaround record three times in order to preserve a number of possible launch dates for both missions.

Look who was waving at passing planes over McGregor today!

A Falcon Heavy side booster on the McGregor test stand for a static fire test. pic.twitter.com/S7af6b0gHk

— NSF – NASASpaceflight.com (@NASASpaceflight) November 18, 2018

If it turns out the USAF is actually unwilling to fly its first Falcon Heavy mission on all flight-proven boosters (a strong possibility) or that that has never been the plan, STP-2’s claimed Q2 2019 target would likely have to slip several months into 2019. This would afford SpaceX more time and resources to build an extra three new Falcon Heavy boosters (two sides, one center), each of which requires a bare minimum of several weeks of dedicated production time and months of lead time (at least for the center core), all while preventing or significantly slowing the completed production of other new Falcon boosters.

The exact state of SpaceX’s Falcon 9 and Heavy production is currently unknown, with indications that the company might be building or have already finished core number B1055 or higher, but it’s safe to say that there is not exactly a lot of slack in the production lines in the first half of 2019. Most important, SpaceX likely needs to begin production of the human-rated Falcon 9 boosters that will ultimately launch the company’s first two crewed Crew Dragons as early as June and August, respectively.

- Falcon Heavy is seen here lifting off during its spectacular launch debut. (SpaceX)

- LZ-1 and LZ-2, circa February 2018. (SpaceX)

- A Falcon Heavy side booster was spotted eastbound in Arizona on November 10th. (Reddit – beast-sam)

- The second Falcon Heavy booster in four weeks was spotted Eastbound in Arizona by SpaceX Facebook group member Eric Schmidt on Dec. 3. (Eric Schmidt – Facebook)

- The second (and third) flight of Falcon Heavy is even closer to reality as a new side booster heads to Florida after finishing static fire tests in Texas. (Reddit /u/e32revelry)

- The next Falcon Heavy’s first side booster delivery was caught by several onlookers around December 21. (Instagram)

If the first Falcon 9 set to launch an uncrewed Crew Dragon (B1051) is anything to go off of, each human-rated Falcon 9 is put through an exceptionally time-consuming and strenuous range of tests to satisfy NASA’s requirements, requiring a considerable amount of extra resources (infrastructure, staff, time) to be produced and readied for launch. B1051 likely spent 3+ months in McGregor, Texas performing checks and one or several static fire tests, whereas a more normal Falcon booster typically spends no more than 3-6 weeks at SpaceX’s test facilities before shipping to its launch pad.

Ultimately, time will tell which hurdles the company’s executives (and hopefully engineers) have selected for its next two Falcon Heavy launches: an extraordinary feat of Falcon reusability or a Tesla-reminiscent period of Falcon production hell?

For prompt updates, on-the-ground perspectives, and unique glimpses of SpaceX’s rocket recovery fleet check out our brand new LaunchPad and LandingZone newsletters!

News

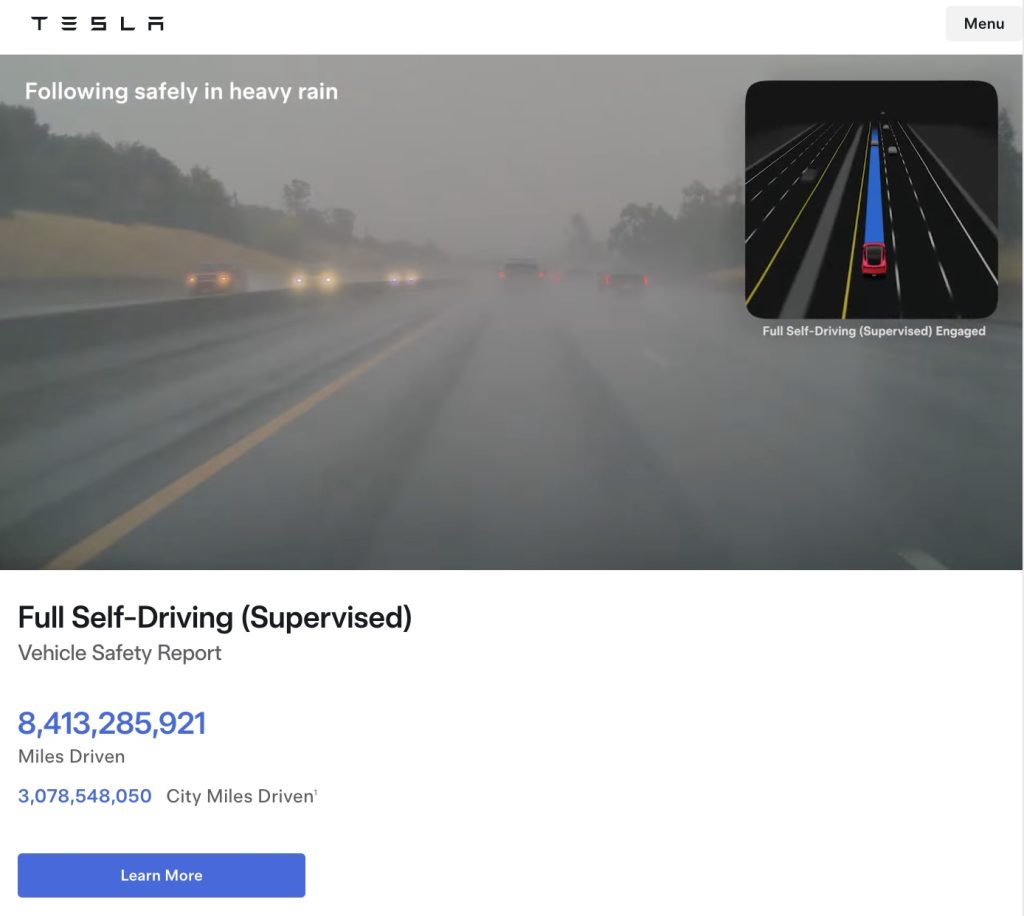

Tesla FSD (Supervised) fleet passes 8.4 billion cumulative miles

Tesla’s Full Self-Driving (Supervised) system has now surpassed 8.4 billion cumulative miles.

The figure appears on Tesla’s official safety page, which tracks performance data for FSD (Supervised) and other safety technologies.

Tesla has long emphasized that large-scale real-world data is central to improving its neural network-based approach to autonomy. Each mile driven with FSD (Supervised) engaged contributes additional edge cases and scenario training for the system.

The milestone also brings Tesla closer to a benchmark previously outlined by CEO Elon Musk. Musk has stated that roughly 10 billion miles of training data may be needed to achieve safe unsupervised self-driving at scale, citing the “long tail” of rare but complex driving situations that must be learned through experience.

The growth curve of FSD Supervised’s cumulative miles over the past five years has been notable.

As noted in data shared by Tesla watcher Sawyer Merritt, annual FSD (Supervised) miles have increased from roughly 6 million in 2021 to 80 million in 2022, 670 million in 2023, 2.25 billion in 2024, and 4.25 billion in 2025. In just the first 50 days of 2026, Tesla owners logged another 1 billion miles.

At the current pace, the fleet is trending towards hitting about 10 billion FSD Supervised miles this year. The increase has been driven by Tesla’s growing vehicle fleet, periodic free trials, and expanding Robotaxi operations, among others.

With the fleet now past 8.4 billion cumulative miles, Tesla’s supervised system is approaching that threshold, even as regulatory approval for fully unsupervised deployment remains subject to further validation and oversight.

Tesla’s Full Self-Driving (Supervised) system has now surpassed 8.4 billion cumulative miles.

The figure appears on Tesla’s official safety page, which tracks performance data for FSD (Supervised) and other safety technologies.

Tesla has long emphasized that large-scale real-world data is central to improving its neural network-based approach to autonomy. Each mile driven with FSD (Supervised) engaged contributes additional edge cases and scenario training for the system.

The milestone also brings Tesla closer to a benchmark previously outlined by CEO Elon Musk. Musk has stated that roughly 10 billion miles of training data may be needed to achieve safe unsupervised self-driving at scale, citing the “long tail” of rare but complex driving situations that must be learned through experience.

The growth curve of FSD Supervised’s cumulative miles over the past five years has been notable.

As noted in data shared by Tesla watcher Sawyer Merritt, annual FSD (Supervised) miles have increased from roughly 6 million in 2021 to 80 million in 2022, 670 million in 2023, 2.25 billion in 2024, and 4.25 billion in 2025. In just the first 50 days of 2026, Tesla owners logged another 1 billion miles.

At the current pace, the fleet is trending towards hitting about 10 billion FSD Supervised miles this year. The increase has been driven by Tesla’s growing vehicle fleet, periodic free trials, and expanding Robotaxi operations, among others.

With the fleet now past 8.4 billion cumulative miles, Tesla’s supervised system is approaching that threshold, even as regulatory approval for fully unsupervised deployment remains subject to further validation and oversight.

Elon Musk

Elon Musk fires back after Wikipedia co-founder claims neutrality and dubs Grokipedia “ridiculous”

Musk’s response to Wales’ comments, which were posted on social media platform X, was short and direct: “Famous last words.”

Elon Musk fired back at Wikipedia co-founder Jimmy Wales after the longtime online encyclopedia leader dismissed xAI’s new AI-powered alternative, Grokipedia, as a “ridiculous” idea that is bound to fail.

Musk’s response to Wales’ comments, which were posted on social media platform X, was short and direct: “Famous last words.”

Wales made the comments while answering questions about Wikipedia’s neutrality. According to Wales, Wikipedia prides itself on neutrality.

“One of our core values at Wikipedia is neutrality. A neutral point of view is non-negotiable. It’s in the community, unquestioned… The idea that we’ve become somehow ‘Wokepidea’ is just not true,” Wales said.

When asked about potential competition from Grokipedia, Wales downplayed the situation. “There is no competition. I don’t know if anyone uses Grokipedia. I think it is a ridiculous idea that will never work,” Wales wrote.

After Grokipedia went live, Larry Sanger, also a co-founder of Wikipedia, wrote on X that his initial impression of the AI-powered Wikipedia alternative was “very OK.”

“My initial impression, looking at my own article and poking around here and there, is that Grokipedia is very OK. The jury’s still out as to whether it’s actually better than Wikipedia. But at this point I would have to say ‘maybe!’” Sanger stated.

Musk responded to Sanger’s assessment by saying it was “accurate.” In a separate post, he added that even in its V0.1 form, Grokipedia was already better than Wikipedia.

During a past appearance on the Tucker Carlson Show, Sanger argued that Wikipedia has drifted from its original vision, citing concerns about how its “Reliable sources/Perennial sources” framework categorizes publications by perceived credibility. As per Sanger, Wikipedia’s “Reliable sources/Perennial sources” list leans heavily left, with conservative publications getting effectively blacklisted in favor of their more liberal counterparts.

As of writing, Grokipedia has reportedly surpassed 80% of English Wikipedia’s article count.

News

Tesla Sweden appeals after grid company refuses to restore existing Supercharger due to union strike

The charging site was previously functioning before it was temporarily disconnected in April last year for electrical safety reasons.

Tesla Sweden is seeking regulatory intervention after a Swedish power grid company refused to reconnect an already operational Supercharger station in Åre due to ongoing union sympathy actions.

The charging site was previously functioning before it was temporarily disconnected in April last year for electrical safety reasons. A temporary construction power cabinet supplying the station had fallen over, described by Tesla as occurring “under unclear circumstances.” The power was then cut at the request of Tesla’s installation contractor to allow safe repair work.

While the safety issue was resolved, the station has not been brought back online. Stefan Sedin, CEO of Jämtkraft elnät, told Dagens Arbete (DA) that power will not be restored to the existing Supercharger station as long as the electric vehicle maker’s union issues are ongoing.

“One of our installers noticed that the construction power had been backed up and was on the ground. We asked Tesla to fix the system, and their installation company in turn asked us to cut the power so that they could do the work safely.

“When everything was restored, the question arose: ‘Wait a minute, can we reconnect the station to the electricity grid? Or what does the notice actually say?’ We consulted with our employer organization, who were clear that as long as sympathy measures are in place, we cannot reconnect this facility,” Sedin said.

The union’s sympathy actions, which began in March 2024, apply to work involving “planning, preparation, new connections, grid expansion, service, maintenance and repairs” of Tesla’s charging infrastructure in Sweden.

Tesla Sweden has argued that reconnecting an existing facility is not equivalent to establishing a new grid connection. In a filing to the Swedish Energy Market Inspectorate, the company stated that reconnecting the installation “is therefore not covered by the sympathy measures and cannot therefore constitute a reason for not reconnecting the facility to the electricity grid.”

Sedin, for his part, noted that Tesla’s issue with the Supercharger is quite unique. And while Jämtkraft elnät itself has no issue with Tesla, its actions are based on the unions’ sympathy measures against the electric vehicle maker.

“This is absolutely the first time that I have been involved in matters relating to union conflicts or sympathy measures. That is why we have relied entirely on the assessment of our employer organization. This is not something that we have made any decisions about ourselves at all.

“It is not that Jämtkraft elnät has a conflict with Tesla, but our actions are based on these sympathy measures. Should it turn out that we have made an incorrect assessment, we will correct ourselves. It is no more difficult than that for us,” the executive said.