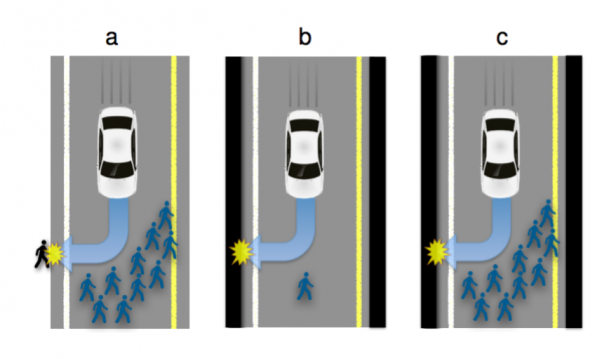

Fast forward a few years and you find your self-driving Tesla rounding a corner, only to find 10 pedestrians in the road ahead. There are walls on either side of the road that will kill or seriously injure you if your car crashes into them. How should the autonomous driving algorithm handle that situation?

Three researchers — Jean-Francois Bonnefon of the Department of Management Research at the Toulouse School of Economics, Azim Shariff of the Department of Psychology at the University of Oregon, and Iyad Rahwan of the Media Laboratory at the Massachusetts Institute of Technology posed that question to hundreds of people using Amazon’s Mechanical Turk, an online crowdsourcing tool.

According to a report published on Arxiv, most people are willing to sacrifice the driver in order to save the lives of others. 75% thought the best ethical solution was to swerve, but only 65% thought cars would actually be programmed to do so. Not surprisingly, the number of people who said the car should swerve dropped dramatically when they were asked to place themselves behind the wheel, rather than some faceless stranger.

“On a scale from -50 (protect the driver at all costs) to +50 (maximize the number of lives saved), the average response was +24,” the researchers wrote. “Results suggest that participants were generally comfortable with utilitarian autonomous vehicles (AVs), programmed to minimize an accident’s death toll,” according to a report on IFL Science.

The legal issues presented by this research could not be more complex. In theory, legislators and regulators could require that autonomous driving algorithms include an unemotional “greater good” ethical component. But what if those laws or regulations allow manufacturers to offer various levels of ethical behavior? If a buyer knowingly chooses an option that provides less protection for innocent bystanders, will that same buyer then be legally responsible for what the car’s software decides to do?

“It is a formidable challenge to define the algorithms that will guide AVs confronted with such moral dilemmas,” the researchers wrote. “We argue to achieve these objectives, manufacturers and regulators will need psychologists to apply the methods of experimental ethics to situations involving AVs and unavoidable harm.”

An article in the MIT Technology Review entitled “Why Self-Driving Cars Must Be Programmed to Kill,” argues that because self-driving cars are inherently safer than human drivers, that in and of itself creates a new dilemma. “If fewer people buy self-driving cars because they are programmed to sacrifice their owners, then more people are likely to die because ordinary cars are involved in so many more accidents,” the MIT article says. “The result is a Catch-22 situation.”

In the summary to their study, the researchers argue, “Figuring out how to build ethical autonomous machines is one of the thorniest challenges in artificial intelligence today. As we are about to endow millions of vehicles with autonomy, taking algorithmic morality seriously has never been more urgent.”

Related Autopilot News

- Tesla Autopilot emergency saves driver from head-on collision

- Who is responsible when Tesla Autopilot results in a crash?

- Upcoming Tesla Autopilot 1.01 will have several new improvements

- Tesla Building Next Gen Maps through its Autopilot Drivers