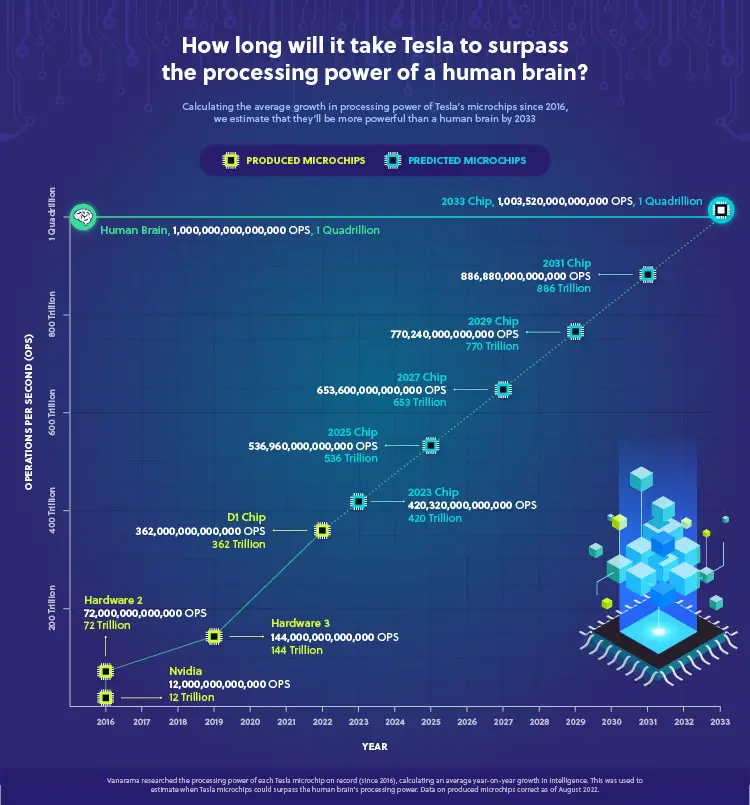

Tesla cars will be smarter than humans by 2033, according to a new study by car and van leasing company, Vanarama. Vanarama performed an analysis of the processing power of Tesla’s microchips to forecast how many years it will take to be on par with the human brain.

The study looked into the processing power of Tesla’s “own AI brain” and compared it with its predecessors and the human brain. Some of the key findings include:

-

Tesla’s microchips will top the human brain (one quadrillion operations per second) in only 11 years (10.94), by 2033.

-

Tesla’s microchip capability is increasing at a rate of 486% per year.

-

Tesla would take 17 years to reach the level of a mature human brain – eight years quicker than we manage (25 years for human brain maturity).

-

Tesla’s D1 chip is 30 times more powerful than the chip they used only six years ago.

Vanarama found that Tesla’s microchip capability is increasing at a rate of 486% per year. The first chip it looked at was a 2016 NVIDIA component that managed 12 trillion operations per second, which is the measure of a computer’s processing power. Tesla’s latest D1 chip managed 362 trillion.

“At that rate, Tesla’s self-driving AI chip will top the human brain (one quadrillion operations per second) in only 11 years (10.94), by 2033,” Vanaram noted.

The company further explained that if you were to look at the growth rate from the first NVIDIA chip it analyzed, it shows that Tesla would take 17 years to reach the level of a mature human brain. This is eight years faster than humans reach brain maturity which is typically 25 years of age.

Tesla D1 chip 3X more powerful than a chip they used 6 years ago

Tesla’s D1 chip was unveiled during AI Day last year and was designed for the Dojo supercomputer. Tesla recently shared a fresh look at the microarchitecture of the Dojo supercomputer when it gave a presentation in New Orleans.

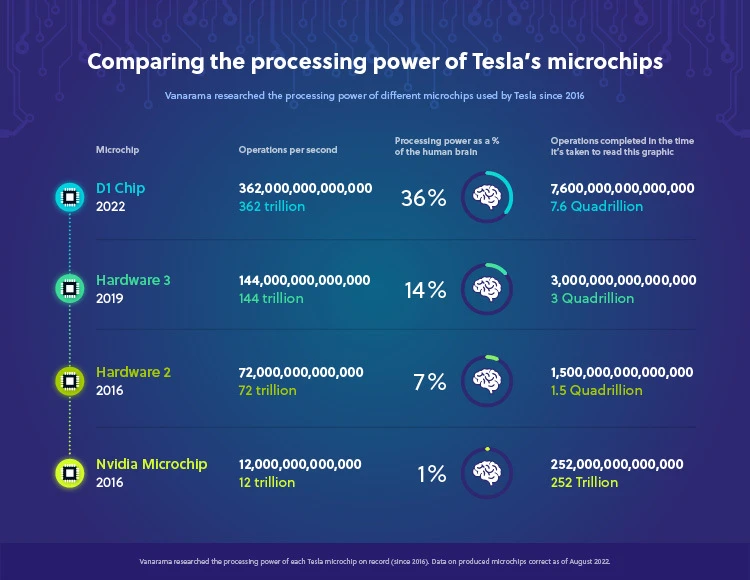

This year, Tesla will hold another AI Day event and it’s expected to release the new D1 chip and other interesting things such as a working prototype of the Optimus Bot. Vanarama took note of the D1 chip’s processing power and said that it was a “considerable increase in computing intelligence from the previous chip, Hardwar 3, which performed 144 trillion operations per second in 2019. Before that, it was the Hardware 2 on 72 trillion, and the Nvidia chip on 12 trillion.”

The Dojo ExaPOD supercomputer will use a total of 24 D1 chips which will make the system capable of just over one quintillion operations per second. For perspective, that number is written out as 1,086,000,000,000,000,000.

A glimpse of the future for AI chips

Take a look at the graphic above. Comparing the processing power of Tesla’s microchips. Vanarama said that in the time it has taken one to read it, Tesla’s microchips would have completed up to 7.6 quadrillion operations each.

“It wouldn’t be crazy to believe that tech will become significantly smarter than humans in our lifetime. Microchips are currently capable of working the way brain synapses do, with researchers developing chips that are inspired by the way the brain operates.”

You can learn more about Vanarama’s research here.

Note: Johnna is a Tesla shareholder and supports its mission.

Your feedback is important. If you have any comments, or concerns, or see a typo, you can email me at johnna@teslarati.com. You can also reach me on Twitter @JohnnaCrider1

News

Tesla wins another award critics will absolutely despise

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Tesla just won another award that critics will absolutely despise, as it has been recognized once again as the company with the most sustainable supply chain.

Tesla has once again proven its critics wrong, securing the number one spot on the 2026 Lead the Charge Auto Supply Chain Leaderboard for the second consecutive year, Lead the Charge rankings show.

NEWS: Tesla ranked 1st on supply chain sustainability in the 2026 Lead the Charge auto/EV supply chain scorecard.

“@Tesla remains the top performing automaker of the Leaderboard for the second year running, and increased its overall score by 6 percentage points, while Ford only… pic.twitter.com/nAgGOIrGFS

— Sawyer Merritt (@SawyerMerritt) March 4, 2026

This independent ranking, produced by a coalition of environmental, human rights, and investor groups including the Sierra Club, Transport & Environment, and others, evaluates 18 major automakers on their efforts to build equitable, sustainable, and fossil-free supply chains for electric vehicles.

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Perhaps the most impressive achievement came in the batteries subsection, where Tesla posted a massive +20-point jump to reach 51 percent, becoming the first automaker ever to surpass 50 percent in this critical area.

Tesla achieved this milestone through transparency, fully disclosing Scope 3 emissions breakdowns for battery cell production and key materials like lithium, nickel, cobalt, and graphite.

The company also requires suppliers to conduct due diligence aligned with OECD guidelines on responsible sourcing, which it has mentioned in past Impact Reports.

While Tesla leads comfortably in climate and environmental performance, it scores 48 percent in human rights and responsible sourcing, slightly behind Ford’s 49 percent.

The company made notable gains in workers’ rights remedies, but has room to improve on issues like Indigenous Peoples’ rights.

Overall, the leaderboard highlights that a core group of leaders, Tesla, Ford, Volvo, Mercedes, and Volkswagen, are advancing twice as fast as their peers, proving that cleaner, more ethical EV supply chains are not just possible but already underway.

For Tesla detractors who claim EVs aren’t truly green or that the company cuts corners, this recognition from sustainability-focused NGOs delivers a powerful rebuttal.

Tesla’s vertical integration, direct supplier contracts, low-carbon material agreements (like its North American aluminum deal with emissions under 2kg CO₂e per kg), and raw materials reporting continue to set the industry standard.

As the world races toward electrification, Tesla isn’t just building cars; it’s building a more responsible future.

News

Tesla Full Self-Driving likely to expand to yet another Asian country

“We are aiming for implementation in 2026. [We are] doing everything in our power [to achieve this],” Richi Hashimoto, president of Tesla’s Japanese subsidiary, said.

Tesla Full Self-Driving is likely to expand to yet another Asian country, as one country seems primed for the suite to head to it for the first time.

The launch of Full Self-Driving in yet another country this year would be a major breakthrough for Tesla as it continues to expand the driver-assistance program across the world. Bureaucratic red tape has held up a lot of its efforts, but things are looking up in some regions.

Tesla is poised to transform Japan’s roads with Full Self-Driving (FSD) technology by 2026.

Richi Hashimoto, president of Tesla’s Japanese subsidiary, announced the ambitious timeline, building on successful employee test drives that began in 2025 and earned positive media reviews. Test drives, initially limited to the Model 3 since August 2025, expanded to the Model Y on March 5.

Once regulators approve, Over-the-Air (OTA) software updates could activate FSD across roughly 40,000 Teslas already on Japanese roads. Japan’s orderly traffic and strict safety culture make it an ideal testing ground for autonomous driving.

Hashimoto said:

“We are aiming for implementation in 2026. [We are] doing everything in our power [to achieve this].”

The push aligns with Hashimoto’s leadership, which has been credited for Tesla’s sales turnaround.

In 2025, Tesla delivered a record 10,600 vehicles in Japan — a nearly 90% jump from the prior year and the first time exceeding 10,000 units annually.

BREAKING 🇯🇵 FSD IS LIKELY LAUNCHING IN JAPAN IN 2026 🚨

Richi Hashimoto, President of Tesla’s Japanese subsidiary, stated: “We are aiming for implementation in 2026” and added that they are “doing everything in our power” to achieve this 🔥

Test drives in Japan began in August… pic.twitter.com/jkkrJLszXN

— Ming (@tslaming) March 5, 2026

The strategy shifted from online-only sales to adding 29 physical showrooms in high-traffic malls, plus staff training and attractive financing offers launched in January 2026. Tesla also plans to expand its Supercharger network to over 1,000 points by 2027, boosting accessibility.

This Japanese momentum reflects Tesla’s broader international expansion. In Europe, Giga Berlin produced more than 200,000 vehicles in 2025 despite a temporary halt, supplying over 30 markets with plans for sequential production growth in 2026 and battery cell manufacturing by 2027.

While regional EV sales faced headwinds, the factory remains a cornerstone for Model Y deliveries across the continent.

In Asia, Giga Shanghai continues to be recognized as Tesla’s powerhouse. China, the company’s largest market, saw January 2026 deliveries from the plant rise 9 percent year-over-year to 69,129 units, with affordable new models expected later this year.

FSD advancements, already progressing in the U.S. and South Korea, are slated for Europe and further Asian rollout, complementing plans to expand Cybercab and Optimus to new markets as well.

With OTA-enabled autonomy on the horizon and retail strategies paying dividends, Tesla is strengthening its footprint from Tokyo showrooms to Berlin assembly lines and Shanghai exports. As Hashimoto continues to push Tesla forward in Japan, the company’s global vision for sustainable, self-driving mobility gains traction across Europe and Asia.

News

Tesla ships out update that brings massive change to two big features

“This change only updates the name of certain features and text in your vehicle,” the company wrote in Release Notes for the update, “and does not change the way your features behave.”

Tesla has shipped out an update for its vehicles that was caused specifically by a California lawsuit that threatened the company’s ability to sell cars because of how it named its driver assistance suite.

Tesla shipped out Software Update 2026.2.9 starting last week; we received it already, and it only brings a few minor changes, mostly related to how things are referenced.

“This change only updates the name of certain features and text in your vehicle,” the company wrote in Release Notes for the update, “and does not change the way your features behave.”

The following changes came to Tesla vehicles in the update:

- Navigate on Autopilot has now been renamed to Navigate on Autosteer

- FSD Computer has been renamed to AI Computer

Tesla faced a 30-day sales suspension in California after the state’s Department of Motor Vehicles stated the company had to come into compliance regarding the marketing of its automated driving features.

The agency confirmed on February 18 that it had taken a “corrective action” to resolve the issue. That corrective action was renaming certain parts of its ADAS.

Tesla discontinued its standalone Autopilot offering in January and ramped up the marketing of Full Self-Driving Supervised. Tesla had said on X that the issue with naming “was a ‘consumer protection’ order about the use of the term ‘Autopilot’ in a case where not one single customer came forward to say there’s a problem.”

This was a “consumer protection” order about the use of the term “Autopilot” in a case where not one single customer came forward to say there’s a problem.

Sales in California will continue uninterrupted.

— Tesla North America (@tesla_na) December 17, 2025

It is now compliant with the wishes of the California DMV, and we’re all dealing with it now.

This was the first primary dispute over the terminology of Full Self-Driving, but it has undergone some scrutiny at the federal level, as some government officials have claimed the suite has “deceptive” names. Previous Transportation Secretary Pete Buttigieg was one of those federal-level employees who had an issue with the names “Autopilot” and “Full Self-Driving.”

Tesla sued the California DMV over the ruling last week.