News

Stanford studies human impact when self-driving car returns control to driver

Researchers involved with the Stanford University Dynamic Design Lab have completed a study that examines how human drivers respond when an autonomous driving system returns control of a car to them. The Lab’s mission, according to its website, is to “study the design and control of motion, especially as it relates to cars and vehicle safety. Our research blends analytical approaches to vehicle dynamics and control together with experiments in a variety of test vehicles and a healthy appreciation for the talents and demands of human drivers.” The results of the study were published on December 6 in the first edition of the journal Science Robotics.

Holly Russell, lead author of study and former graduate student at the Dynamic Design Lab says, “Many people have been doing research on paying attention and situation awareness. That’s very important. But, in addition, there is this physical change and we need to acknowledge that people’s performance might not be at its peak if they haven’t actively been participating in the driving.”

The report emphasizes that the DDL’s autonomous driving program is its own proprietary system and is not intended to mimic any particular autonomous driving system currently available from any automobile manufacturer, such as Tesla’s Autopilot.

The study found that the period of time known as “the handoff” — when the computer returns control of a car to a human driver — can be an especially risky period, especially if the speed of the vehicle has changed since the last time the person had direct control of the car. The amount of steering input required to accurately control a vehicle varies according to speed. Greater input is needed at slower speeds while less movement of the wheel is required at higher speeds.

People learn over time how to steer accurately at all speeds based on experience. But when some time elapses during which the driver is not directly involved in steering the car, the researchers found that drivers require a brief period of adjustment before they can accurately steer the car again. The greater the speed change while the computer is in control, the more erratic the human drivers were in their steering inputs upon resuming control.

“Even knowing about the change, being able to make a plan and do some explicit motor planning for how to compensate, you still saw a very different steering behavior and compromised performance,” said Lene Harbott, co-author of the research and a research associate in the Revs Program at Stanford.

Handoff From Computer to Human

The testing was done on a closed course. The participants drove for 15 seconds on a course that included a straightaway and a lane change. Then they took their hands off the wheel and the car took over, bringing them back to the start. After familiarizing themselves with the course four times, the researchers altered the steering ratio of the cars at the beginning of the next lap. The changes were designed to mimic the different steering inputs required at different speeds. The drivers then went around the course 10 more times.

Even though they were notified of the changes to the steering ratio, the drivers’ steering maneuvers differed significantly from their paths previous to the modifications during those ten laps. At the end, the steering ratios were returned to the original settings and the drivers drove 6 more laps around the course. Again the researchers found the drivers needed a period of adjustment to accurately steer the cars.

The DDL experiment is very similar to a classic neuroscience experiment that assesses motor adaptation. In one version, participants use a hand control to move a cursor on a screen to specific points. The way the cursor moves in response to their control is adjusted during the experiment and they, in turn, change their movements to make the cursor go where they want it to go.

Just as in the driving test, people who take part in the experiment have to adjust to changes in how the controller moves the cursor. They also must adjust a second time if the original response relationship is restored. People can performed this experiment themselves by adjusting the speed of the cursor on their personal computers.

“Even though there are really substantial differences between these classic experiments and the car trials, you can see this basic phenomena of adaptation and then after-effect of adaptation,” says IIana Nisky, another co-author of the study and a senior lecturer at Ben-Gurion University in Israel “What we learn in the laboratory studies of adaptation in neuroscience actually extends to real life.”

In neuroscience this is explained as a difference between explicit and implicit learning, Nisky explains. Even when a person is aware of a change, their implicit motor control is unaware of what that change means and can only figure out how to react through experience.

Federal and state regulators are currently working on guidelines that will apply to Level 5 autonomous cars. What the Stanford research shows is that until full autonomy becomes a reality, the “hand off” moment will represent a period of special risk, not because of any failing on the part of computers but rather because of limitations inherent in the brains of human drivers.

The best way to protect ourselves from that period of risk is to eliminate the “hand off” period entirely by ceding total control of driving to computers as soon as possible.

News

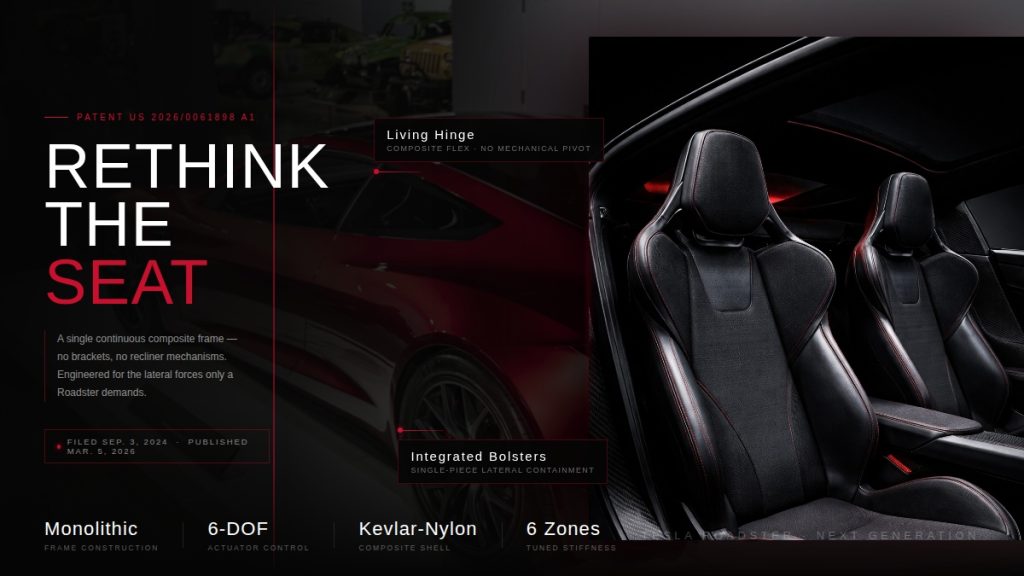

Tesla Roadster patent hints at radical seat redesign ahead of reveal

A newly published Tesla patent could offer one of the clearest signals yet that the long-awaited next-generation Roadster is nearly ready for its public debut.

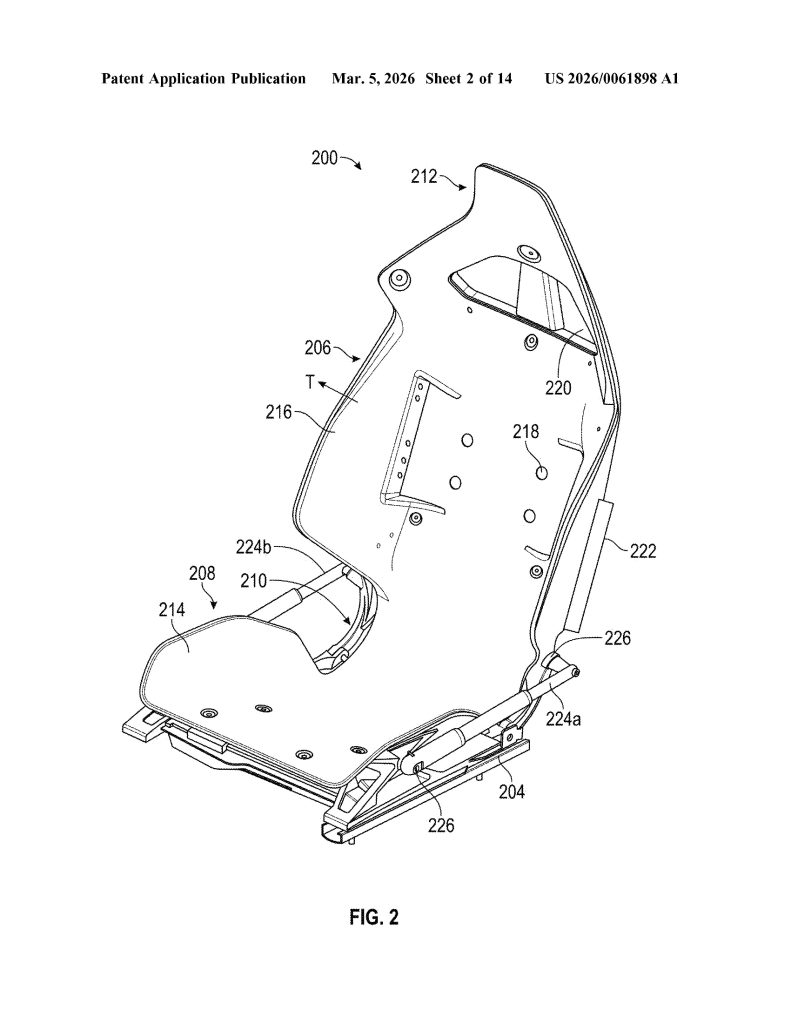

Patent No. US 20260061898 A1, published on March 5, 2026, describes a “vehicle seat system” built around a single continuous composite frame – a dramatic departure from the dozens of metal brackets, recliner mechanisms, and rivets that make up a traditional car seat. Tesla is calling it a monolithic structure, with the seat portion, backrest, headrest, and bolsters all thermoformed as one unified piece.

The approach mirrors Tesla’s broader manufacturing philosophy. The same company that pioneered massive aluminum castings to eliminate hundreds of body components is now applying that logic to the cabin. Fewer parts means fewer potential failure points, less weight, and a cleaner assembly process overall.

Tesla ramps hiring for Roadster as latest unveiling approaches

The timing of the filing is difficult to ignore. Elon Musk has publicly targeted April 1, 2026 as the date for an “unforgettable” Roadster design reveal, and two new Roadster trademarks were filed just last month. A patent describing a seat architecture suited for a hypercar, and one that Tesla has promised will hit 60 mph in under two seconds.

The Roadster, originally unveiled in 2017, has been one of Tesla’s most anticipated yet most delayed products. With a target price around $200,000 and engineering ambitions to match, it is being positioned as the ultimate showcase for what Tesla’s technology can do.

The patent was first flagged by @seti_park on X.

Tesla Roadster Monolithic Seat: Feature Highlights via US Patent 20260061898 A1

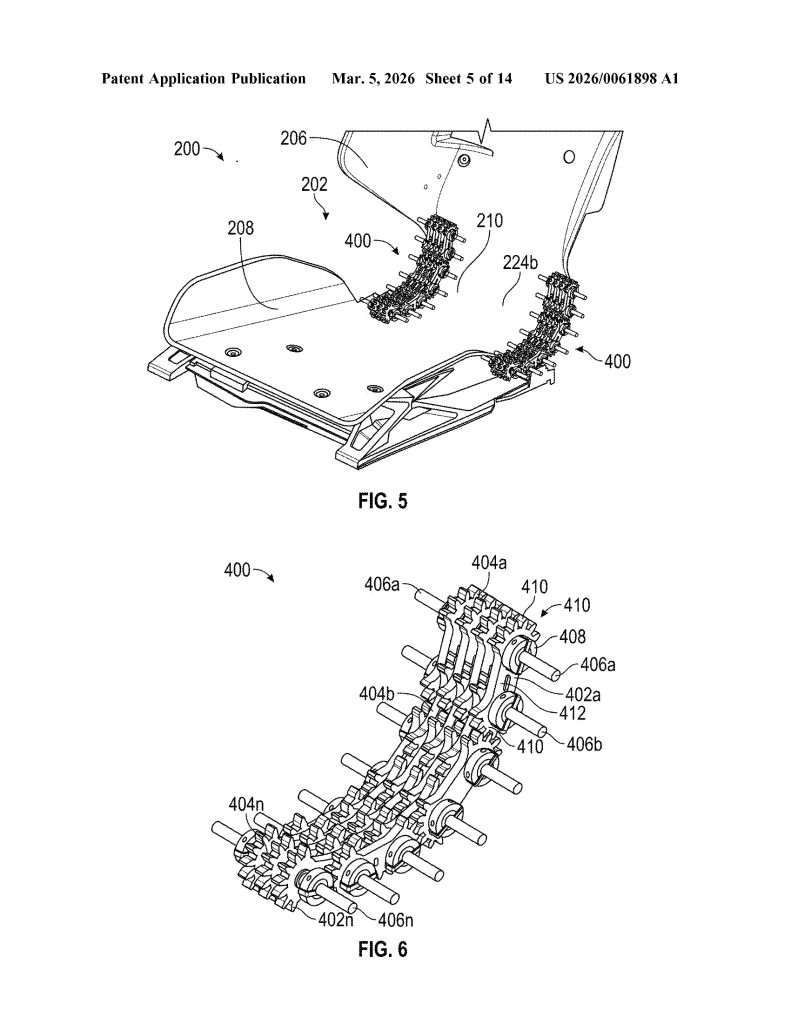

- Single Continuous Frame (Monolithic Construction). The core invention is a seat assembly built from one continuous frame that integrates the seat portion, backrest portion, and hinge into a single component — eliminating the need for separate structural parts and mechanical joints typical in conventional seats.

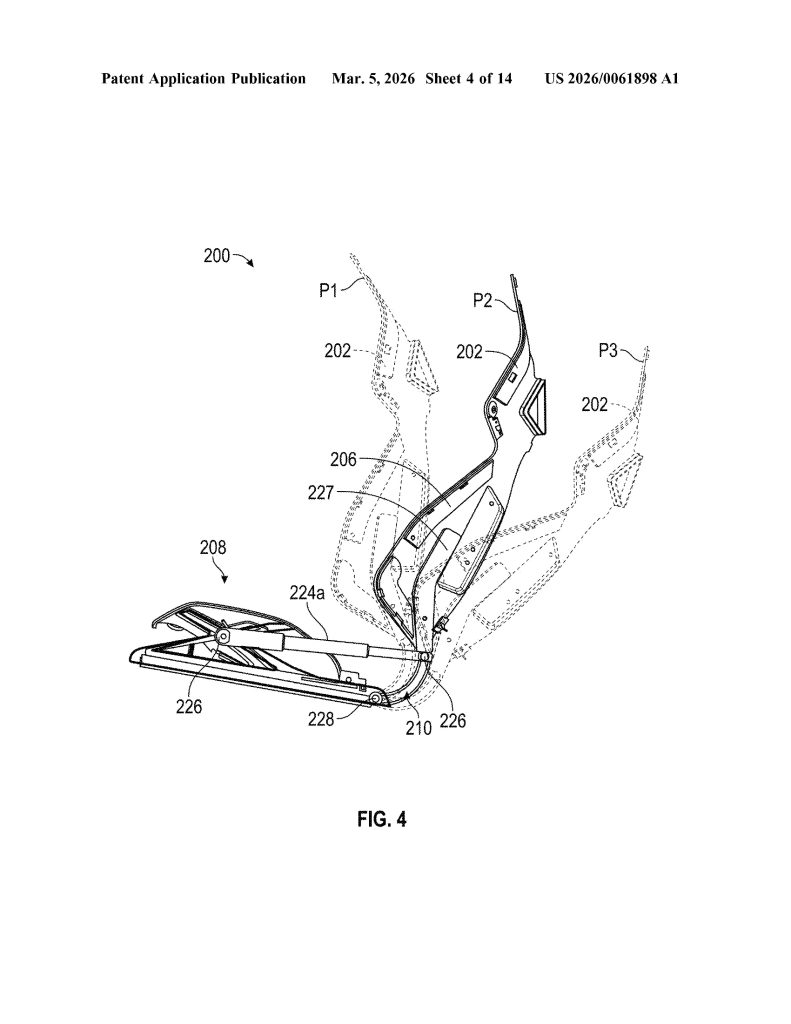

- Integrated Flexible Hinge. Rather than a traditional mechanical recliner, the hinge is built directly into the continuous frame and is designed to flex, and allowing the backrest to move relative to the seat portion. The hinge can be implemented as a fiber composite leaf spring or an assembly of rigid linkages.

- Thermoformed Anisotropic Composite Material. The continuous frame is manufactured via thermoforming from anisotropic composite materials, including fiberglass-nylon, fiberglass-polymer, nylon carbon composite, Kevlar-nylon, or Kevlar-polymer composites, enabling a molded-to-shape monolithic structure.

- Regionally Tuned Stiffness Zones. The frame is engineered with up to six distinct stiffness regions (R1–R6) across the seat, backrest, hinge, headrest, and bolsters. Each zone can have a different stiffness, allowing precise ergonomic and structural tuning without adding separate components.

- Linkage Assembly Hinge Mechanism. The hinge incorporates one or more linkage assemblies consisting of multiple interlocking links with gears, connected by rods. When driven by motors or actuators, these linkages act as a flexible member to control backrest movement along a precise, ergonomically optimized trajectory.

- Multi-Actuator Six-Degree-of-Freedom Positioning System. The seat uses four distinct actuator pairs, all controlled by a central controller. These actuators work in coordinated combinations to achieve fore/aft, height, cushion tilt, and backrest rotation adjustments simultaneously.

- ECU-Based Controller Architecture. An Electronic Control Unit (ECU) and programmable controller manage all seat actuators, receive user input via a user interface (touchscreen, buttons, or switches), and incorporate sensor feedback to confirm and maintain desired seat positions, essentially making this a software-driven seat system.

- Airbag-Integrated Bolster Deployment System. The backrest bolsters (216) are geometrically shaped and sized to guide airbag deployment along a specific, pre-configured trajectory. Left and right bolsters can have different shapes so that each guides its respective airbag along a distinct trajectory, improving occupant protection.

- Ventilation Holes Formed into the Backrest. The continuous frame includes one or more ventilation holes formed directly into the backrest portion, configured to either receive airflow into or deliver airflow from the seat frame — enabling passive or active thermal comfort without requiring separate ventilation components.

- Soft Trim Recess for Tool-Free Integration. The headrest and backrest portions together define a molded recess, specifically designed to receive and secure a soft trim component (foam, fabric, or cushioning) directly into the continuous frame, eliminating the need for separate attachment hardware and simplifying final assembly.

Elon Musk

Elon Musk’s xAI plans $659M expansion at Memphis supercomputer site

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site.

Elon Musk’s artificial intelligence company xAI has filed a permit to construct a new building at its growing data center complex outside Memphis, Tennessee.

As per a report from Data Center Dynamics, xAI plans to spend about $659 million on a new facility adjacent to its Colossus 2 data center. Permit documents submitted to the Memphis and Shelby County Division of Planning and Development show the proposed structure would be a four-story building totaling about 312,000 square feet.

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site. Permit filings indicate the structure would reach roughly 75 feet high, though the specific function of the building has not been disclosed.

The filing was first reported by the Memphis Business Journal.

xAI uses its Memphis data centers to power Grok, the company’s flagship large language model. The company entered the Memphis area in 2024, launching its Colossus supercomputer in a repurposed Electrolux factory located in the Boxtown district.

The company later acquired land for the Colossus 2 data center in March last year. That facility came online in January.

A third data center is also planned for the cluster across the Tennessee–Mississippi border. Musk has stated that the broader campus could eventually provide access to about 2 gigawatts of compute power.

The Memphis cluster is also tied to new power infrastructure commitments announced by SpaceX President Gwynne Shotwell. During a White House event with United States President Donald Trump, Shotwell stated that xAI would develop 1.2 gigawatts of power for its supercomputer facility as part of the administration’s “Ratepayer Protection Pledge.”

“As you know, xAI builds huge supercomputers and data centers and we build them fast. Currently, we’re building one on the Tennessee-Mississippi state line… xAI will therefore commit to develop 1.2 GW of power as our supercomputer’s primary power source. That will be for every additional data center as well…

“The installation will provide enough backup power to power the city of Memphis, and more than sufficient energy to power the town of Southaven, Mississippi where the data center resides. We will build new substations and invest in electrical infrastructure to provide stability to the area’s grid,” Shotwell said.

Shotwell also stated that xAI plans to support the region’s water supply through new infrastructure tied to the project. “We will build state-of-the-art water recycling plants that will protect approximately 4.7 billion gallons of water from the Memphis aquifer each year. And we will employ thousands of American workers from around the city of Memphis on both sides of the TN-MS border,” she said.

News

Tesla wins another award critics will absolutely despise

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Tesla just won another award that critics will absolutely despise, as it has been recognized once again as the company with the most sustainable supply chain.

Tesla has once again proven its critics wrong, securing the number one spot on the 2026 Lead the Charge Auto Supply Chain Leaderboard for the second consecutive year, Lead the Charge rankings show.

NEWS: Tesla ranked 1st on supply chain sustainability in the 2026 Lead the Charge auto/EV supply chain scorecard.

“@Tesla remains the top performing automaker of the Leaderboard for the second year running, and increased its overall score by 6 percentage points, while Ford only… pic.twitter.com/nAgGOIrGFS

— Sawyer Merritt (@SawyerMerritt) March 4, 2026

This independent ranking, produced by a coalition of environmental, human rights, and investor groups including the Sierra Club, Transport & Environment, and others, evaluates 18 major automakers on their efforts to build equitable, sustainable, and fossil-free supply chains for electric vehicles.

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Perhaps the most impressive achievement came in the batteries subsection, where Tesla posted a massive +20-point jump to reach 51 percent, becoming the first automaker ever to surpass 50 percent in this critical area.

Tesla achieved this milestone through transparency, fully disclosing Scope 3 emissions breakdowns for battery cell production and key materials like lithium, nickel, cobalt, and graphite.

The company also requires suppliers to conduct due diligence aligned with OECD guidelines on responsible sourcing, which it has mentioned in past Impact Reports.

While Tesla leads comfortably in climate and environmental performance, it scores 48 percent in human rights and responsible sourcing, slightly behind Ford’s 49 percent.

The company made notable gains in workers’ rights remedies, but has room to improve on issues like Indigenous Peoples’ rights.

Overall, the leaderboard highlights that a core group of leaders, Tesla, Ford, Volvo, Mercedes, and Volkswagen, are advancing twice as fast as their peers, proving that cleaner, more ethical EV supply chains are not just possible but already underway.

For Tesla detractors who claim EVs aren’t truly green or that the company cuts corners, this recognition from sustainability-focused NGOs delivers a powerful rebuttal.

Tesla’s vertical integration, direct supplier contracts, low-carbon material agreements (like its North American aluminum deal with emissions under 2kg CO₂e per kg), and raw materials reporting continue to set the industry standard.

As the world races toward electrification, Tesla isn’t just building cars; it’s building a more responsible future.