News

Tesla FSD Beta 10.69.2.2 extending to 160k owners in US and Canada: Elon Musk

It appears that after several iterations and adjustments, FSD Beta 10.69 is ready to roll out to the greater FSD Beta program. Elon Musk mentioned the update on Twitter, with the CEO stating that v10.69.2.2. should extend to 160,000 owners in the United States and Canada.

Similar to his other announcements about the FSD Beta program, Musk’s comments were posted on Twitter. “FSD Beta 10.69.2.1 looks good, extending to 160k owners in US & Canada,” Musk wrote before correcting himself and clarifying that he was talking about FSD Beta 10.69.2.2, not v10.69.2.1.

While Elon Musk has a known tendency to be extremely optimistic about FSD Beta-related statements, his comments about v10.69.2.2 do reflect observations from some of the program’s longtime members. Veteran FSD Beta tester @WholeMarsBlog, who does not shy away from criticizing the system if it does not work well, noted that his takeovers with v10.69.2.2 have been marginal. Fellow FSD Beta tester @GailAlfarATX reported similar observations.

Tesla definitely seems to be pushing to release FSD to its fleet. Recent comments from Tesla’s Senior Director of Investor Relations Martin Viecha during an invite-only Goldman Sachs tech conference have hinted that the electric vehicle maker is on track to release “supervised” FSD around the end of the year. That’s around the same time as Elon Musk’s estimate for FSD’s wide release.

It should be noted, of course, that even if Tesla manages to release “supervised” FSD to consumers by the end of the year, the version of the advanced driver-assist system would still require drivers to pay attention to the road and follow proper driving practices. With a feature-complete “supervised” FSD, however, Teslas would be able to navigate on their own regardless of whether they are in the highway or in inner-city streets. And that, ultimately, is a feature that will be extremely hard to beat.

Following are the release notes of FSD Beta v10.69.2.2, as retrieved by NotaTeslaApp:

– Added a new “deep lane guidance” module to the Vector Lanes neural network which fuses features extracted from the video streams with coarse map data, i.e. lane counts and lane connectivities. This architecture achieves a 44% lower error rate on lane topology compared to the previous model, enabling smoother control before lanes and their connectivities becomes visually apparent. This provides a way to make every Autopilot drive as good as someone driving their own commute, yet in a sufficiently general way that adapts for road changes.

– Improved overall driving smoothness, without sacrificing latency, through better modeling of system and actuation latency in trajectory planning. Trajectory planner now independently accounts for latency from steering commands to actual steering actuation, as well as acceleration and brake commands to actuation. This results in a trajectory that is a more accurate model of how the vehicle would drive. This allows better downstream controller tracking and smoothness while also allowing a more accurate response during harsh maneuvers.

– Improved unprotected left turns with more appropriate speed profile when approaching and exiting median crossover regions, in the presence of high speed cross traffic (“Chuck Cook style” unprotected left turns). This was done by allowing optimisable initial jerk, to mimic the harsh pedal press by a human, when required to go in front of high speed objects. Also improved lateral profile approaching such safety regions to allow for better pose that aligns well for exiting the region. Finally, improved interaction with objects that are entering or waiting inside the median crossover region with better modeling of their future intent.

– Added control for arbitrary low-speed moving volumes from Occupancy Network. This also enables finer control for more precise object shapes that cannot be easily represented by a cuboid primitive. This required predicting velocity at every 3D voxel. We may now control for slow-moving UFOs.

– Upgraded Occupancy Network to use video instead of images from single time step. This temporal context allows the network to be robust to temporary occlusions and enables prediction of occupancy flow. Also, improved ground truth with semantics-driven outlier rejection, hard example mining, and increasing the dataset size by 2.4x.

– Upgraded to a new two-stage architecture to produce object kinematics (e.g. velocity, acceleration, yaw rate) where network compute is allocated O(objects) instead of O(space). This improved velocity estimates for far away crossing vehicles by 20%, while using one tenth of the compute.

– Increased smoothness for protected right turns by improving the association of traffic lights with slip lanes vs yield signs with slip lanes. This reduces false slowdowns when there are no relevant objects present and also improves yielding position when they are present.

– Reduced false slowdowns near crosswalks. This was done with improved understanding of pedestrian and bicyclist intent based on their motion.

– Improved geometry error of ego-relevant lanes by 34% and crossing lanes by 21% with a full Vector Lanes neural network update. Information bottlenecks in the network architecture were eliminated by increasing the size of the per-camera feature extractors, video modules, internals of the autoregressive decoder, and by adding a hard attention mechanism which greatly improved the fine position of lanes.

– Made speed profile more comfortable when creeping for visibility, to allow for smoother stops when protecting for potentially occluded objects.

– Improved recall of animals by 34% by doubling the size of the auto-labeled training set.

– Enabled creeping for visibility at any intersection where objects might cross ego’s path, regardless of presence of traffic controls.

– Improved accuracy of stopping position in critical scenarios with crossing objects, by allowing dynamic resolution in trajectory optimization to focus more on areas where finer control is essential.

– Increased recall of forking lanes by 36% by having topological tokens participate in the attention operations of the autoregressive decoder and by increasing the loss applied to fork tokens during training.

– Improved velocity error for pedestrians and bicyclists by 17%, especially when ego is making a turn, by improving the onboard trajectory estimation used as input to the neural network.

– Improved recall of object detection, eliminating 26% of missing detections for far away crossing vehicles by tuning the loss function used during training and improving label quality.

– Improved object future path prediction in scenarios with high yaw rate by incorporating yaw rate and lateral motion into the likelihood estimation. This helps with objects turning into or away from ego’s lane, especially in intersections or cut-in scenarios.

– Improved speed when entering highway by better handling of upcoming map speed changes, which increases the confidence of merging onto the highway.

– Reduced latency when starting from a stop by accounting for lead vehicle jerk.

– Enabled faster identification of red light runners by evaluating their current kinematic state against their expected braking profile.

Press the “Video Record” button on the top bar UI to share your feedback. When pressed, your vehicle’s external cameras will share a short VIN-associated Autopilot Snapshot with the Tesla engineering team to help make improvements to FSD. You will not be able to view the clip.

Don’t hesitate to contact us with news tips. Just send a message to simon@teslarati.com to give us a heads up.

News

Tesla makes latest move to remove Model S and Model X from its lineup

Tesla’s latest decisive step toward phasing out its flagship sedan and SUV was quietly removing the Model S and Model X from its U.S. referral program earlier this week.

Tesla has made its latest move that indicates the Model S and Model X are being removed from the company’s lineup, an action that was confirmed by the company earlier this quarter, that the two flagship vehicles would no longer be produced.

Tesla has ultimately started phasing out the Model S and Model X in several ways, as it recently indicated it had sold out of a paint color for the two vehicles.

Now, the company is making even more moves that show its plans for the two vehicles are being eliminated slowly but surely.

Tesla’s latest decisive step toward phasing out its flagship sedan and SUV was quietly removing the Model S and Model X from its U.S. referral program earlier this week.

The change eliminates the $1,000 referral discount previously available to new buyers of these vehicles. Existing Tesla owners purchasing a new Model S or Model X will now only receive a halved loyalty discount of $500, down from $1,000.

The updates extend beyond the two flagship vehicles. New Cybertruck buyers using a referral code on Premium AWD or Cyberbeast configurations will no longer get $1,000 off. Instead, both referrer and buyer receive three months of Full Self-Driving (Supervised).

The loyalty discount for Cybertruck purchases, excluding the new Dual Motor AWD trim level, has also been cut to $500.

NEWS: Tesla has removed the Model S and Model X from the referral program.

New owners also no longer get a $1,000 referral discount on a new Cybertruck Premium AWD or Cyberbeast. Instead, you now get 3 months of FSD (Supervised).

Additionally, Tesla has reduced the loyalty… pic.twitter.com/IgIY8Hi2WJ

— Sawyer Merritt (@SawyerMerritt) March 6, 2026

These adjustments apply only in the United States, and reflect Tesla’s broader strategy to optimize margins while boosting adoption of its autonomous driving software.

The timing is no coincidence. Tesla confirmed earlier this year that Model S and Model X production will end in the second quarter of 2026, roughly June, as the company reallocates factory capacity toward its Optimus humanoid robot and next-generation vehicles.

With annual sales of the low-volume flagships already declining (just 53,900 units in 2025), incentives are no longer needed to drive demand. Production is winding down, and Tesla expects strong remaining interest without subsidies.

Industry observers see this as the clearest sign yet of an “end-of-life” phase for the vehicles that once defined Tesla’s luxury segment. Community reactions on X range from nostalgia, “Rest in power S and X”, to frustration among long-time owners who feel perks are eroding just as the models approach discontinuation.

Some buyers are rushing orders to lock in final discounts before they vanish entirely.

Doug DeMuro names Tesla Model S the Most Important Car of the last 30 years

For Tesla, the move prioritizes efficiency: fewer discounts on outgoing models, a stronger push for FSD subscriptions, and a focus on high-margin Cybertruck trims amid surging orders.

Loyalists still have a narrow window to purchase a refreshed Plaid or Long Range model with remaining incentives, but the message is clear: Tesla’s lineup is evolving, and the era of the original flagships is drawing to a close.

News

Tesla Australia confirms six-seat Model Y L launch in 2026

Compared with the standard five-seat Model Y, the Model Y L features a longer body and extended wheelbase to accommodate an additional row of seating.

Tesla has confirmed that the larger six-seat Model Y L will launch in Australia and New Zealand in 2026.

The confirmation was shared by techAU through a media release from Tesla Australia and New Zealand.

The Model Y L expands the Model Y lineup by offering additional seating capacity for customers seeking a larger electric SUV. Compared with the standard five-seat Model Y, the Model Y L features a longer body and extended wheelbase to accommodate an additional row of seating.

The Model Y L is already being produced at Tesla’s Gigafactory Shanghai for the Chinese market, though the vehicle will be manufactured in right-hand-drive configuration for markets such as Australia and New Zealand.

Tesla Australia and New Zealand confirmed the vehicle will feature seating for six passengers.

“As shown in pictures from its launch in China, Model Y L will have a new seating configuration providing room for 6 occupants,” Tesla Australia and New Zealand said in comments shared with techAU.

Instead of a traditional seven-seat arrangement, the Model Y L uses a 2-2-2 layout. The middle row features two individual seats, allowing easier access to the third row while providing additional space for passengers.

Tesla Australia and New Zealand also confirmed that the Model Y L will be covered by the company’s updated warranty structure beginning in 2026.

“As with all new Tesla Vehicles from the start of 2026, the Model Y L will come with a 5-year unlimited km vehicle warranty and 8 years for the battery,” the company said.

The updated policy increases Tesla’s vehicle warranty from the previous four-year or 80,000-kilometer coverage.

Battery and drive unit warranties remain unchanged depending on the variant. Rear-wheel-drive models carry an eight-year or 160,000-kilometer warranty, while Long Range and Performance variants are covered for eight years or 192,000 kilometers.

Tesla has not yet announced official pricing or range figures for the Model Y L in Australia.

News

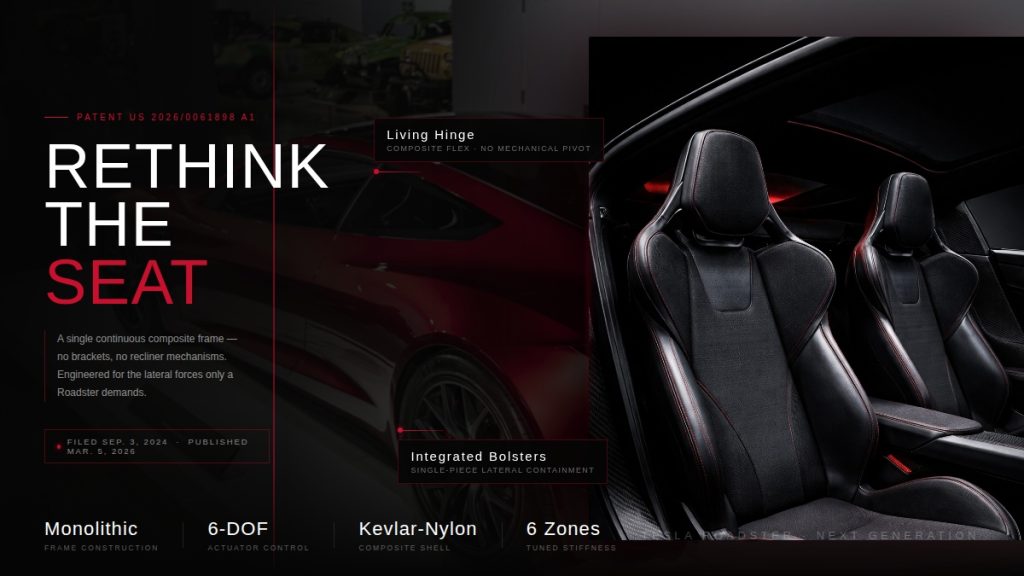

Tesla Roadster patent hints at radical seat redesign ahead of reveal

A newly published Tesla patent could offer one of the clearest signals yet that the long-awaited next-generation Roadster is nearly ready for its public debut.

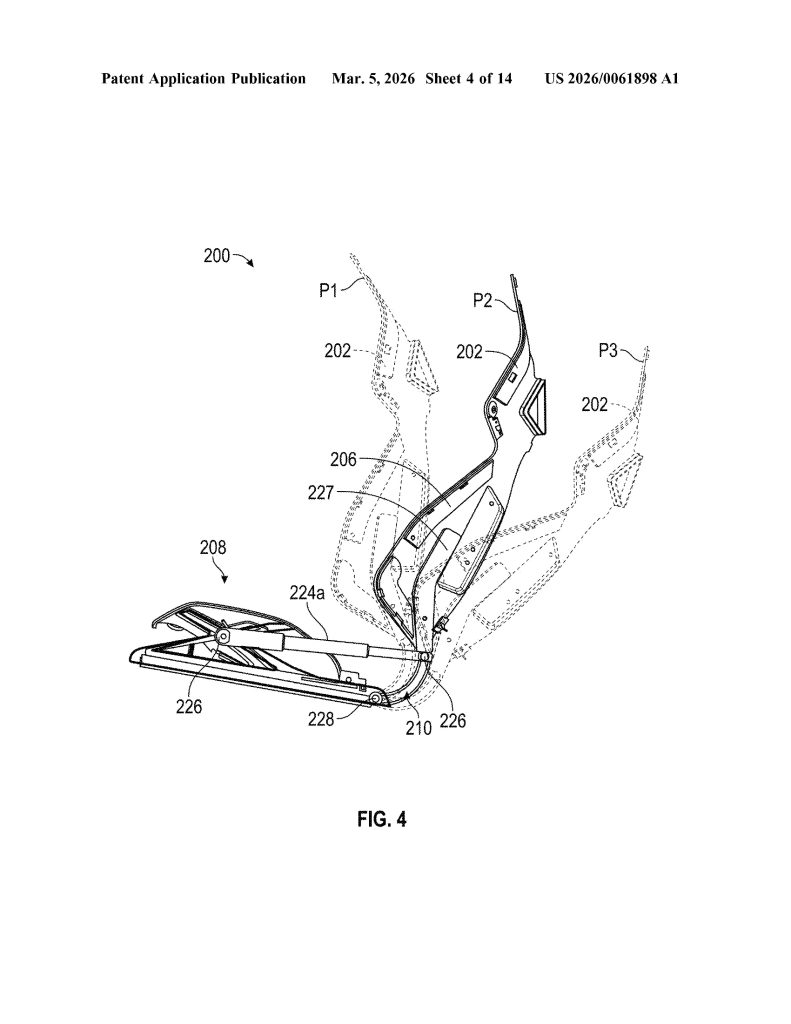

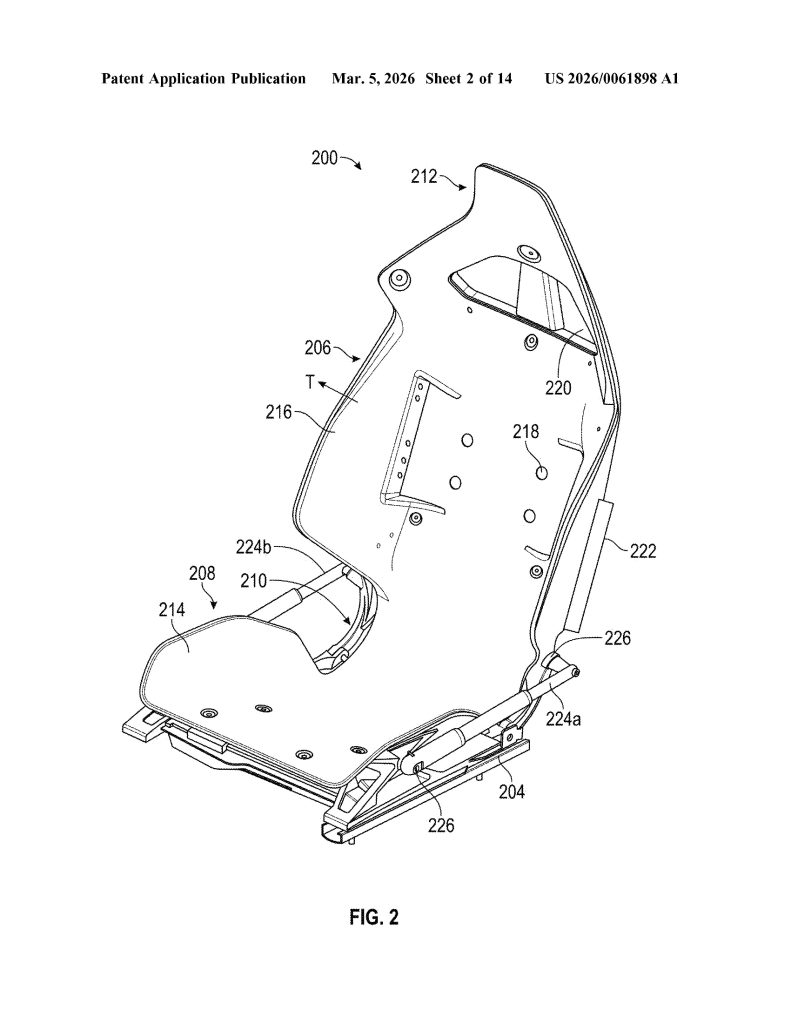

Patent No. US 20260061898 A1, published on March 5, 2026, describes a “vehicle seat system” built around a single continuous composite frame – a dramatic departure from the dozens of metal brackets, recliner mechanisms, and rivets that make up a traditional car seat. Tesla is calling it a monolithic structure, with the seat portion, backrest, headrest, and bolsters all thermoformed as one unified piece.

The approach mirrors Tesla’s broader manufacturing philosophy. The same company that pioneered massive aluminum castings to eliminate hundreds of body components is now applying that logic to the cabin. Fewer parts means fewer potential failure points, less weight, and a cleaner assembly process overall.

Tesla ramps hiring for Roadster as latest unveiling approaches

The timing of the filing is difficult to ignore. Elon Musk has publicly targeted April 1, 2026 as the date for an “unforgettable” Roadster design reveal, and two new Roadster trademarks were filed just last month. A patent describing a seat architecture suited for a hypercar, and one that Tesla has promised will hit 60 mph in under two seconds.

The Roadster, originally unveiled in 2017, has been one of Tesla’s most anticipated yet most delayed products. With a target price around $200,000 and engineering ambitions to match, it is being positioned as the ultimate showcase for what Tesla’s technology can do.

The patent was first flagged by @seti_park on X.

Tesla Roadster Monolithic Seat: Feature Highlights via US Patent 20260061898 A1

- Single Continuous Frame (Monolithic Construction). The core invention is a seat assembly built from one continuous frame that integrates the seat portion, backrest portion, and hinge into a single component — eliminating the need for separate structural parts and mechanical joints typical in conventional seats.

- Integrated Flexible Hinge. Rather than a traditional mechanical recliner, the hinge is built directly into the continuous frame and is designed to flex, and allowing the backrest to move relative to the seat portion. The hinge can be implemented as a fiber composite leaf spring or an assembly of rigid linkages.

- Thermoformed Anisotropic Composite Material. The continuous frame is manufactured via thermoforming from anisotropic composite materials, including fiberglass-nylon, fiberglass-polymer, nylon carbon composite, Kevlar-nylon, or Kevlar-polymer composites, enabling a molded-to-shape monolithic structure.

- Regionally Tuned Stiffness Zones. The frame is engineered with up to six distinct stiffness regions (R1–R6) across the seat, backrest, hinge, headrest, and bolsters. Each zone can have a different stiffness, allowing precise ergonomic and structural tuning without adding separate components.

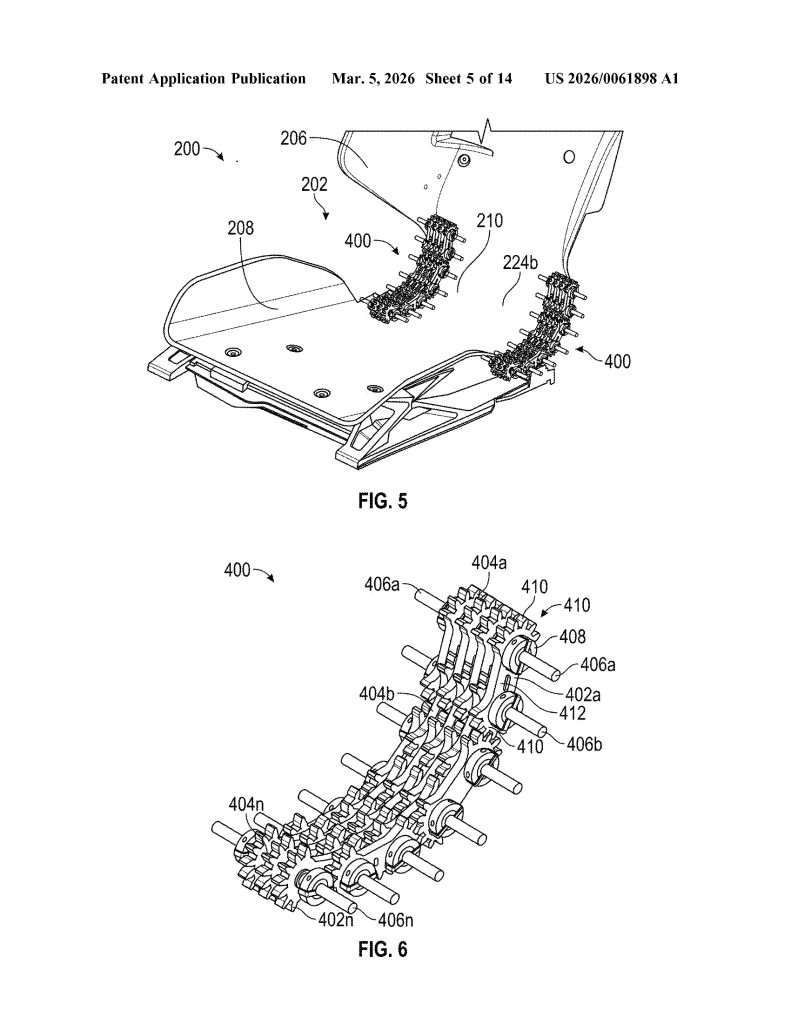

- Linkage Assembly Hinge Mechanism. The hinge incorporates one or more linkage assemblies consisting of multiple interlocking links with gears, connected by rods. When driven by motors or actuators, these linkages act as a flexible member to control backrest movement along a precise, ergonomically optimized trajectory.

- Multi-Actuator Six-Degree-of-Freedom Positioning System. The seat uses four distinct actuator pairs, all controlled by a central controller. These actuators work in coordinated combinations to achieve fore/aft, height, cushion tilt, and backrest rotation adjustments simultaneously.

- ECU-Based Controller Architecture. An Electronic Control Unit (ECU) and programmable controller manage all seat actuators, receive user input via a user interface (touchscreen, buttons, or switches), and incorporate sensor feedback to confirm and maintain desired seat positions, essentially making this a software-driven seat system.

- Airbag-Integrated Bolster Deployment System. The backrest bolsters (216) are geometrically shaped and sized to guide airbag deployment along a specific, pre-configured trajectory. Left and right bolsters can have different shapes so that each guides its respective airbag along a distinct trajectory, improving occupant protection.

- Ventilation Holes Formed into the Backrest. The continuous frame includes one or more ventilation holes formed directly into the backrest portion, configured to either receive airflow into or deliver airflow from the seat frame — enabling passive or active thermal comfort without requiring separate ventilation components.

- Soft Trim Recess for Tool-Free Integration. The headrest and backrest portions together define a molded recess, specifically designed to receive and secure a soft trim component (foam, fabric, or cushioning) directly into the continuous frame, eliminating the need for separate attachment hardware and simplifying final assembly.