Firmware

Tesla patent hints at Hardware 3’s neural network accelerator for faster processing

During the recently-held fourth-quarter earnings call, Elon Musk all but stated that Tesla holds a notable lead in the self-driving field. While responding to Loup Ventures analyst Gene Munster, who inquired about Morgan Stanley’s estimated $175 billion valuation for Waymo and its self-driving tech, Musk noted that Tesla actually has an advantage over other companies involved in the development of autonomous technologies, particularly when it comes to real-world miles.

“If you add everyone else up combined, they’re probably 5% — I’m being generous — of the miles that Tesla has. And this difference is increasing. A year from now, we’ll probably go — certainly from 18 months from now, we’ll probably have 1 million vehicles on the road with — that are — and every time the customers drive the car, they’re training the systems to be better. I’m just not sure how anyone competes with that,” Musk said.

To carry its self-driving systems towards full autonomy, Tesla has been developing its custom hardware. Designed by Apple alumni Pete Bannon, Tesla’s Hardware 3 upgrade is expected to provide the company’s vehicles with a 1000% improvement in processing capability compared to current hardware. Tesla has released only a few hints about HW3’s capabilities over the past months. That said, a patent application from the electric car maker has recently been published by the US Patent Office, hinting at an “Accelerated Mathematical Engine” that would most likely be utilized for Tesla’s Hardware 3.

Tesla notes that there is a need to develop “high-computational-throughput systems and methods that can perform matrix mathematical operations quickly and efficiently,” particularly in computationally demanding applications such as convolutional neural networks (CNN), which are used in image recognition and processing. CNNs use deep learning to perform descriptive and generative tasks, usually utilizing machine vision that involves image and video recognition. These processes, which are invaluable for the development and operation of driver-assist systems like Autopilot, require a lot of computing power.

Considering the large amount of data involved in applications such as CNNs, the computational resources and the rate of calculations become limited by the capabilities of existing hardware. This becomes particularly evident in computing devices and processors that execute matrix operations, which encounter bottlenecks during heavy operations, resulting in wasted computing time. To address these limitations, Tesla’s patent application hints at the use of a custom matrix processor architecture.

“In operation according to certain embodiments, system 200 accelerates convolution operations by reducing redundant operations within the systems and implementing hardware specific logic to perform certain mathematical operations across a large set of data and weights. This acceleration is a direct result of methods (and corresponding hardware components) that retrieve and input image data and weights to the matrix processor 240 as well as timing mathematical operations within the matrix processor 240 on a large scale.”

By adopting its custom matrix processor architecture, Tesla expects its hardware to be capable of supporting larger amounts of data. In terms of formatting alone, the electric car maker notes that its design would allow the system to reformat data on the fly, making it immediately available for execution. Tesla also notes that its architecture would result in improvements in processing speed and efficiency.

“Unlike common software implementations of formatting functions that are performed by a CPU or GPU to convert a convolution operation into a matrix-multiply by rearranging data to an alternate format that is suitable for a fast matrix multiplication, various hardware implementations of the present disclosure re-format data on the fly and make it available for execution, e.g., 96 pieces of data every cycle, in effect, allowing a very large number of elements of a matrix to be processed in parallel, thus efficiently mapping data to a matrix operation. In embodiments, for 2N fetched input data 2N2 compute data may be obtained in a single clock cycle. This architecture results in a meaningful improvement in processing speeds by effectively reducing the number of read or fetch operations employed in a typical processor architecture as well as providing a paralleled, efficient and synchronized process in performing a large number of mathematical operations across a plurality of data inputs.”

It should be noted that Tesla’s patent application for its Accelerated Mathematical Engine is but one aspect of the company’s upcoming hardware upgrade to its fleet of electric cars. The full capabilities of Tesla’s Hardware 3, at least for now, remain to be seen. Ultimately, while Tesla did not provide concrete updates on the development and release of Hardware 3 to the company’s fleet of vehicles during the fourth quarter earnings call, Musk stated that some full self-driving features would likely be ready towards the end of 2019.

Back in October, Musk noted that Hardware 3 would be equipped in all new production cars in around 6 months, which translates to a rollout date of around April 2019. Musk stated that transitioning to the new hardware will not involve any changes with vehicle production, as the upgrade is simply a replacement of the Autopilot computer installed on all electric cars today. In a later tweet, Musk mentioned that Tesla owners who bought Full Self-Driving would receive the Hardware 3 upgrade free of charge. Owners who have not ordered Full Self-Driving, on the other hand, would likely pay around $5,000 for the FSD suite and the new hardware.

Tesla’s patent application for its Accelerated Mathematical Engine could be accessed here.

Firmware

Tesla mobile app shows signs of upcoming FSD subscriptions

It appears that Tesla may be preparing to roll out some subscription-based services soon. Based on the observations of a Wales-based Model 3 owner who performed some reverse-engineering on the Tesla mobile app, it seems that the electric car maker has added a new “Subscribe” option beside the “Buy” option within the “Upgrades” tab, at least behind the scenes.

A screenshot of the new option was posted in the r/TeslaMotors subreddit, and while the Tesla owner in question, u/Callump01, admitted that the screenshot looks like something that could be easily fabricated, he did submit proof of his reverse-engineering to the community’s moderators. The moderators of the r/TeslaMotors subreddit confirmed the legitimacy of the Model 3 owner’s work, further suggesting that subscription options may indeed be coming to Tesla owners soon.

Did some reverse engineering on the app and Tesla looks to be preparing for subscriptions? from r/teslamotors

Tesla’s Full Self-Driving suite has been heavily speculated to be offered as a subscription option, similar to the company’s Premium Connectivity feature. And back in April, noted Tesla hacker @greentheonly stated that the company’s vehicles already had the source codes for a pay-as-you-go subscription model. The Tesla hacker suggested then that Tesla would likely release such a feature by the end of the year — something that Elon Musk also suggested in the first-quarter earnings call. “I think we will offer Full Self-Driving as a subscription service, but it will be probably towards the end of this year,” Musk stated.

While the signs for an upcoming FSD subscription option seem to be getting more and more prominent as the year approaches its final quarter, the details for such a feature are still quite slim. Pricing for FSD subscriptions, for example, have not been teased by Elon Musk yet, though he has stated on Twitter that purchasing the suite upfront would be more worth it in the long term. References to the feature in the vehicles’ source code, and now in the Tesla mobile app, also listed no references to pricing.

The idea of FSD subscriptions could prove quite popular among electric car owners, especially since it would allow budget-conscious customers to make the most out of the company’s driver-assist and self-driving systems without committing to the features’ full price. The current price of the Full Self-Driving suite is no joke, after all, being listed at $8,000 on top of a vehicle’s cost. By offering subscriptions to features like Navigate on Autopilot with automatic lane changes, owners could gain access to advanced functions only as they are needed.

Elon Musk, for his part, has explained that ultimately, he still believes that purchasing the Full Self-Driving suite outright provides the most value to customers, as it is an investment that would pay off in the future. “I should say, it will still make sense to buy FSD as an option as in our view, buying FSD is an investment in the future. And we are confident that it is an investment that will pay off to the consumer – to the benefit of the consumer.” Musk said.

Firmware

Tesla rolls out speed limit sign recognition and green traffic light alert in new update

Tesla has started rolling out update 2020.36 this weekend, introducing a couple of notable new features for its vehicles. While there are only a few handful of vehicles that have reportedly received the update so far, 2020.36 makes it evident that the electric car maker has made some strides in its efforts to refine its driver-assist systems for inner-city driving.

Tesla is currently hard at work developing key features for its Full Self-Driving suite, which should allow vehicles to navigate through inner-city streets without driver input. Tesla’s FSD suite is still a work in progress, though the company has released the initial iterations of key features such Traffic Light and Stop Sign Control, which was introduced last April. Similar to the first release of Navigate on Autopilot, however, the capabilities of Traffic Light and Stop Sign Control were pretty basic during their initial rollout.

2020.36 Showing Speed Limit Signs in Visualization from r/teslamotors

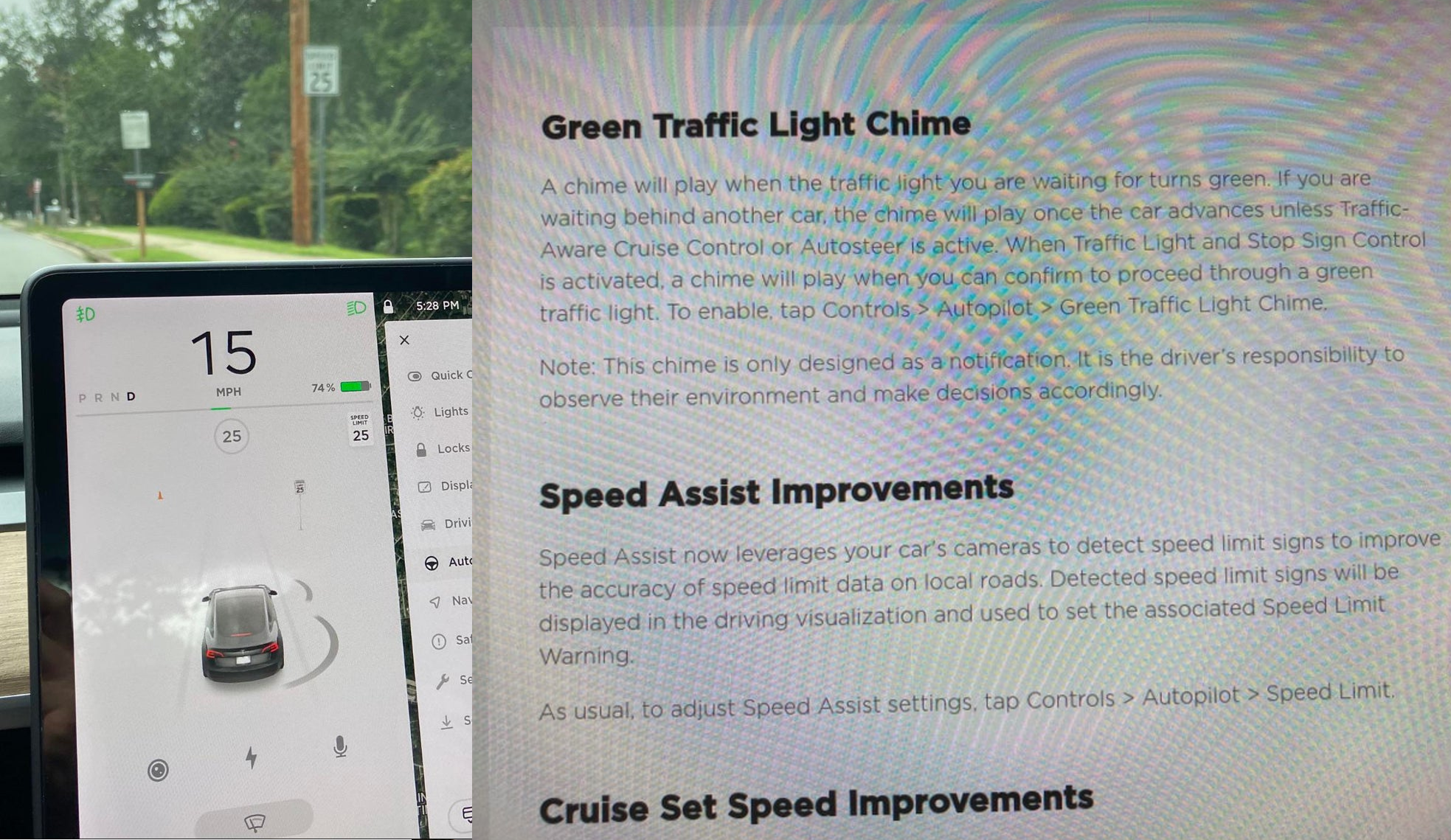

With the release of update 2020.36, Tesla has rolled out some improvements that should allow its vehicles to handle traffic lights better. What’s more, the update also includes a particularly useful feature that enables better recognition of speed limit signs, which should make Autopilot’s speed adjustments better during use. Following are the Release Notes for these two new features.

Green Traffic Light Chime

“A chime will play when the traffic light you are waiting for turns green. If you are waiting behind another car, the chime will play once the car advances unless Traffic-Aware Cruise Control or Autosteer is active. When Traffic Light and Stop Sign Control is activated, a chime will play when you can confirm to proceed through a green traffic light. To enable, tap Controls > Autopilot > Green Traffic Light Chime.

“Note: This chime is only designed as a notification. It is the driver’s responsibility to observe their environment and make decisions accordingly.”

Speed Assist Improvements

“Speed Assist now leverages your car’s cameras to detect speed limit signs to improve the accuracy of speed limit data on local roads. Detected speed limit signs will be displayed in the driving visualization and used to set the associated Speed Limit Warning.

“As usual, to adjust Speed Assist settings, tap Controls > Autopilot > Speed Limit.”

Footage of the new green light chime in action via @NASA8500 on Twitter ✈️ from r/teslamotors

Amidst the rollout of 2020.36’s new features, speculations were abounding among Tesla community members that this update may include the first pieces of the company’s highly-anticipated Autopilot rewrite. Inasmuch as the idea is exciting, however, Tesla CEO Elon Musk has stated that this was not the case. While responding to a Tesla owner who asked if the Autopilot rewrite is in “shadow mode” in 2020.36, Musk responded “Not yet.”

Firmware

Tesla rolls out Sirius XM free three-month subscription

Tesla has rolled out a free three-month trial subscription to Sirius XM, in what appears to be the company’s latest push into making its vehicles’ entertainment systems more feature-rich. The new Sirius XM offer will likely be appreciated by owners of the company’s vehicles, especially considering that the service is among the most popular satellite radios in the country today.

Tesla announced its new offer in an email sent on Monday. An image that accompanied the communication also teased Tesla’s updated and optimized Sirius XM UI for its vehicles. Following is the email’s text.

“Beginning now, enjoy a free, All Access three-month trial subscription to Sirius XM, plus a completely new look and improved functionality. Our latest over-the-air software update includes significant improvements to overall Sirius XM navigation, organization, and search features, including access to more than 150 satellite channels.

“To access simply tap the Sirius XM app from the ‘Music’ section of your in-car center touchscreen—or enjoy your subscription online, on your phone, or at home on connected devices. If you can’t hear SiriusXM channels in your car, select the Sirius XM ‘Subscription’ tab for instruction on how to refresh your audio.”

Tesla has actually been working on Sirius XM improvements for some time now. Back in June, for example, Tesla rolled out its 2020.24.6.4 update, and it included some optimizations to its Model S and Model X’s Sirius XM interface. As noted by noted Tesla owner and hacker @greentheonly, the source code of this update revealed that the Sirius XM optimizations were also intended to be released to other areas such as Canada.

Interestingly enough, Sirius XM is a popular feature that has been exclusive to the Model S and X. Tesla’s most popular vehicle to date, the Model 3, is yet to receive the feature. One could only hope that Sirius XM integration to the Model 3 may eventually be included in the future. Such an update would most definitely be appreciated by the EV community, especially since some Model 3 owners have resorted to using their smartphones or third-party solutions to gain access to the satellite radio service.

The fact that Tesla seems to be pushing Sirius XM rather assertively to its customers seems to suggest that the company may be poised to roll out more entertainment-based apps in the coming months. Apps such as Sirius XM, Spotify, Netflix, and YouTube, may seem quite minor when compared to key functions like Autopilot, after all, but they do help round out the ownership experience of Tesla owners. In a way, Sirius XM does make sense for Tesla’s next-generation of vehicles, especially the Cybertruck and the Semi, both of which would likely be driven in areas that lack LTE connectivity.

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoTesla investors will be shocked by Jim Cramer’s latest assessment

-

Elon Musk3 days ago

Elon Musk3 days agoxAI launches Grok 4 with new $300/month SuperGrok Heavy subscription

-

Elon Musk5 days ago

Elon Musk5 days agoElon Musk confirms Grok 4 launch on July 9 with livestream event

-

News1 week ago

News1 week agoTesla Model 3 ranks as the safest new car in Europe for 2025, per Euro NCAP tests

-

Elon Musk1 week ago

Elon Musk1 week agoxAI’s Memphis data center receives air permit despite community criticism

-

News2 weeks ago

News2 weeks agoXiaomi CEO congratulates Tesla on first FSD delivery: “We have to continue learning!”

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoTesla scrambles after Musk sidekick exit, CEO takes over sales

-

News2 weeks ago

News2 weeks agoTesla sees explosive sales growth in UK, Spain, and Netherlands in June