This is a preview from our weekly newsletter. Each week I go ‘Beyond the News’ and handcraft a special edition that includes my thoughts on the biggest stories, why it matters, and how it could impact the future.

Earlier this week, NTSB Chief Jennifer Homendy made some disparaging comments regarding Tesla’s use of “Full Self-Driving” to explain its semi-autonomous driving suite. The remarks from Homendy show that Tesla may not have a fair chance when it ultimately comes to proving the effectiveness of its FSD program, especially considering agency officials, who should remain impartial, are already making misdirected comments regarding the name of the suite.

In an interview with the Wall Street Journal, Homendy commented on the company’s use of the phrase “Full Self-Driving.” While Tesla’s FSD suite is admittedly not capable of Level 5 autonomy, the idea for the program is to eventually roll out a fully autonomous driving program for those who choose to invest in the company’s software. However, instead of focusing on the program’s effectiveness and commending Tesla, arguably the leader in self-driving developments, Homendy concentrates on the terminology.

Homendy said Tesla’s use of the term “Full Self-Driving” was “misleading and irresponsible,” despite the company confirming with each driver who buys the capability that the program is not yet fully autonomous. Drivers are explicitly told to remain vigilant and keep their hands on the wheel at all times. It is a requirement to use Autopilot or FSD, and failure to do so can result in being locked in “Autopilot jail” for the duration of your trip. Nobody wants that.

However, despite the way some media outlets and others describe Tesla’s FSD program, the company’s semi-autonomous driving functionalities are extraordinarily safe and among the most complex on the market. Tesla is one of the few companies attempting to solve the riddle that is self-driving, and the only to my knowledge that has chosen not to use LiDAR in its efforts. Additionally, Tesla ditched radar just a few months ago in the Model Y and Model 3, meaning cameras are the only infrastructure the company plans to use to keep its cars moving. Several drivers have reported improvements due to the lack of radar.

These comments regarding FSD and Autopilot are simple: The terminology is not the focus; the facts are. The truth is, Tesla Autopilot recorded one of its safest quarters, according to the most recently released statistics that outlined an accident occurring on Autopilot just once every 4.19 million miles. The national average is 484,000 miles, the NHTSA says.

It isn’t to say that things don’t happen. Accidents on Autopilot and FSD do occur, and the NHTSA is currently probing twelve incidents that have shown Autopilot to be active during an accident. While the conditions and situations vary in each accident, several have already been proven to be the result of driver negligence, including a few that had drivers operating a vehicle without a license or under the influence of alcohol. Now, remind me: When a BMW driver is drunk and crashes into someone, do we blame BMW? I’ll let that rhetorical question sink in.

Of course, Homendy has a Constitutional right to say whatever is on her mind. It is perfectly reasonable to be skeptical of self-driving systems. I’ll admit, the first time I experienced one, I was not a fan, but it wasn’t because I didn’t trust it. It was because I was familiar with controlling a vehicle and not having it manage things for me. However, just like anything else, I adjusted and got used to the idea, eventually becoming accustomed to the new feelings and sensations of having my car assist me in navigating to my destination.

To me, it is simply unfortunate for an NTSB official to claim that Tesla “has clearly misled numerous people to misuse and abuse technology.” One, because it isn’t possible, two, because it would be a massive liability for the company, and three, because Tesla has never maintained that its cars can drive themselves. Tesla has never claimed that its cars can drive themselves, nor has Tesla ever advised a driver to attempt a fully autonomous trek to a destination.

The numerous safety features and additions to the FSD suite have only solidified Tesla’s position as one of the safest car companies out there. With in-cabin cameras to test driver attentiveness and numerous other safety thresholds that drivers must respond to with the correct behaviors, Tesla’s FSD suite and its Autopilot program are among the safest around. It isn’t favorable for NTSB head Homendy to comment in this way, especially as it seems to be detrimental to not only Tesla’s attempts to achieve Level 5 autonomy but the entire self-driving effort as a whole.

A big thanks to our long-time supporters and new subscribers! Thank you.

I use this newsletter to share my thoughts on what is going on in the Tesla world. If you want to talk to me directly, you can email me or reach me on Twitter. I don’t bite, be sure to reach out!

-Joey

News

Tesla Full Self-Driving v14.2.2.5 might be the most confusing release ever

With each Full Self-Driving release, I am realistic. I know some things are going to get better, and I know some things will regress slightly. However, these instances of improvements are relatively mild, as are the regressions. Yet, this version has shown me that it contains extremes of both.

Tesla Full Self-Driving v14.2.2.5 hit my car back on Valentine’s Day, February 14, and since I’ve had it, it has become, in my opinion, the most confusing release I’ve ever had.

With each Full Self-Driving release, I am realistic. I know some things are going to get better, and I know some things will regress slightly. However, these instances of improvements are relatively mild, as are the regressions. Yet, this version has shown me that it contains extremes of both.

It has been about three weeks of driving on v14.2.2.5; I’ve used it for nearly every mile traveled since it hit my car. I’ve taken short trips of 10 minutes or less, I’ve taken medium trips of an hour or less, and I’ve taken longer trips that are over 100 miles per leg and are over two hours of driving time one way.

These are my thoughts on it thus far:

Speed Profiles Are a Mixed Bag

Speed Profiles are something Tesla seems to tinker with quite frequently, and each version tends to show a drastic difference in how each one behaves compared to the previous version.

I do a vast majority of my FSD travel using Standard and Hurry modes, although in bad weather, I will scale it back to Chill, and when it’s a congested city on a weekend or during rush hour, I’ll throw it into Mad Max so it takes what it needs.

Early on, Speed Profiles really felt great. This is one of those really subjective parts of the FSD where someone might think one mode travels too quickly, whereas another person might see the identical performance as too slow or just right.

To me, I would like to see more consistency from release to release on them, but overall, things are pretty good. There are no real complaints on my end, as I had with previous releases.

In a past release, Mad Max traveled under the speed limit quite frequently, and I only had that experience because Hurry was acting the same way. I’ve had no instances of that with v14.2.2.5.

Strange Turn Signal Behavior

This is the first Full Self-Driving version where I’ve had so many weird things happen with the turn signals.

Two things come to mind: Using a turn signal on a sharp turn, and ignoring the navigation while putting the wrong turn signal on. I’ve encountered both things on v14.2.2.5.

On my way to the Supercharger, I take a road that has one semi-sharp right-hand turn with a driveway entrance right at the beginning of the turn.

Only recently, with the introduction of v14.2.2.5, have I had FSD put on the right turn signal when going around this turn. It’s obviously a minor issue, but it still happens, and it’s not standard practice:

How can we get Full Self-Driving to stop these turn signals?

There’s no need to use one here; the straight path is a driveway, not a public road. The right turn signal here is unnecessary pic.twitter.com/7uLDHnqCfv

— TESLARATI (@Teslarati) February 28, 2026

When sharing this on X, I had Tesla fans (the ones who refuse to acknowledge that the company can make mistakes) tell me that it’s a “valid” behavior that would be taught to anyone who has been “professionally trained” to drive.

Apparently, if you complain about this turn signal, you are also claiming you know more than Tesla engineers…okay.

Nobody in their right mind has ever gone around a sharp turn when driving their car and put on a signal when continuing on the same road. You would put a left turn signal on to indicate you were turning into that driveway if that’s what your intention was.

Like I said, it’s a totally minor issue. However, it’s not really needed, and nor is it normal. If I were in the car with someone who was taking a simple turn on a road they were traveling, and they signaled because the turn was sharp, I’d be scratching my head.

I’ve also had three separate instances of the car completely ignoring the navigation and putting on a signal that is opposite to what the routing says. Really quite strange.

Parking Performance is Still Underwhelming

Parking has been a complaint of mine with FSD for a long time, so much so that it is pretty rare that I allow the vehicle to park itself. More often than not, it is because I want to pick a spot that is relatively isolated.

However, in the times I allow it to pull into a spot, it still does some pretty head-scratching things.

Recently, it tried to back into a spot that was ~60% covered in plowed snow. The snow was piled about six feet high in a Target parking lot.

A few days later, it tried backing into a spot where someone failed the universal litmus test of returning their shopping cart. Both choices were baffling and required me to manually move the car to a different portion of the lot.

I used Autopark on both occasions, and it did a great job of getting into the spot. I notice that the parking performance when I manually choose the spot is much better than when the car does the entire parking process, meaning choosing the spot and parking in it.

It’s Doing Things (For Me) It’s Never Done Before

Two things that FSD has never done before, at least for me, are slow down in School Zones and avoid deer. The first is something I usually take over manually, and the second I surprisingly have not had to deal with yet.

I had my Tesla slow down at a school zone yesterday for the first time, traveling at 20 MPH and not 15 MPH as the sign suggested, but at the speed of other cars in the School Zone. This was impressive and the first time I experienced it.

I would like to see this more consistently, and I think School Zones should be one of those areas where, no matter what, FSD will only travel the speed limit.

Last night, FSD v14.2.2.5 recognized a deer in a roadside field and slowed down for it:

🚨 Cruising home on a rainy, foggy evening and my Tesla on Full Self-Driving begins to slow down suddenly

FSD just wanted Mr. Deer to make it home to his deer family ❤️ pic.twitter.com/cAeqVDgXo5

— TESLARATI (@Teslarati) March 4, 2026

Navigation Still SUCKS

Navigation will be a complaint until Tesla proves it can fix it. For now, it’s just terrible.

It still has not figured out how to leave my neighborhood. I give it the opportunity to prove me wrong each time I leave my house, and it just can’t do it.

It always tries to go out of the primary entrance/exit of the neighborhood when the route needs to take me left, even though that exit is a right turn only. I always leave a voice prompt for Tesla about it.

It still picks incredibly baffling routes for simple navigation. It’s the one thing I still really want Tesla to fix.

Investor's Corner

Tesla gets tip of the hat from major Wall Street firm on self-driving prowess

“Tesla is at the forefront of autonomous driving, supported by a camera-only approach that is technically harder but much cheaper than the multi-sensor systems widely used in the industry. This strategy should allow Tesla to scale more profitably compared to Robotaxi competitors, helped by a growing data engine from its existing fleet,” BoA wrote.

Tesla received a tip of the hat from major Wall Street firm Bank of America on Wednesday, as it reinitiated coverage on Tesla shares with a bullish stance that comes with a ‘Buy’ rating and a $460 price target.

In a new note that marks a sharp reversal from its neutral position earlier in 2025, the bank declared Tesla’s Full Self-Driving (FSD) technology the “leading consumer autonomy solution.”

Analysts highlighted Tesla’s camera-only architecture, known as Tesla Vision, as a strategic masterstroke. While technically more challenging than the multi-sensor setups favored by rivals, the vision-based approach is dramatically cheaper to produce and maintain.

This cost edge, combined with Tesla’s rapidly expanding real-world data engine, positions the company to scale robotaxis far more profitably than competitors, BofA argues in the new note:

“Tesla is at the forefront of autonomous driving, supported by a camera-only approach that is technically harder but much cheaper than the multi-sensor systems widely used in the industry. This strategy should allow Tesla to scale more profitably compared to Robotaxi competitors, helped by a growing data engine from its existing fleet.”

The bank now attributes roughly 52% of Tesla’s total valuation to its Robotaxi ambitions. It also flagged meaningful upside from the Optimus humanoid robot program and the fast-growing energy storage business, suggesting the auto segment’s recent headwinds, including expired incentives, are being eclipsed by these higher-margin opportunities.

Tesla’s own data underscores exactly why Wall Street is waking up to FSD’s potential. According to Tesla’s official safety reporting page, the FSD Supervised fleet has now surpassed 8.4 billion cumulative miles driven.

Tesla FSD (Supervised) fleet passes 8.4 billion cumulative miles

That total ballooned from just 6 million miles in 2021 to 80 million in 2022, 670 million in 2023, 2.25 billion in 2024, and a staggering 4.25 billion in 2025 alone. In the first 50 days of 2026, owners added another 1 billion miles — averaging more than 20 million miles per day.

This avalanche of real-world, camera-captured footage, much of it on complex city streets, gives Tesla an unmatched training dataset. Every mile feeds its neural networks, accelerating improvement cycles that lidar-dependent rivals simply cannot match at scale.

Tesla owners themselves will tell you the suite gets better with every release, bringing new features and improvements to its self-driving project.

The $460 target implies roughly 15 percent upside from recent trading levels around $400. While regulatory and safety hurdles remain, BofA’s endorsement signals growing institutional conviction that Tesla’s data advantage is not hype; it’s a tangible moat already delivering billions of miles of proof.

News

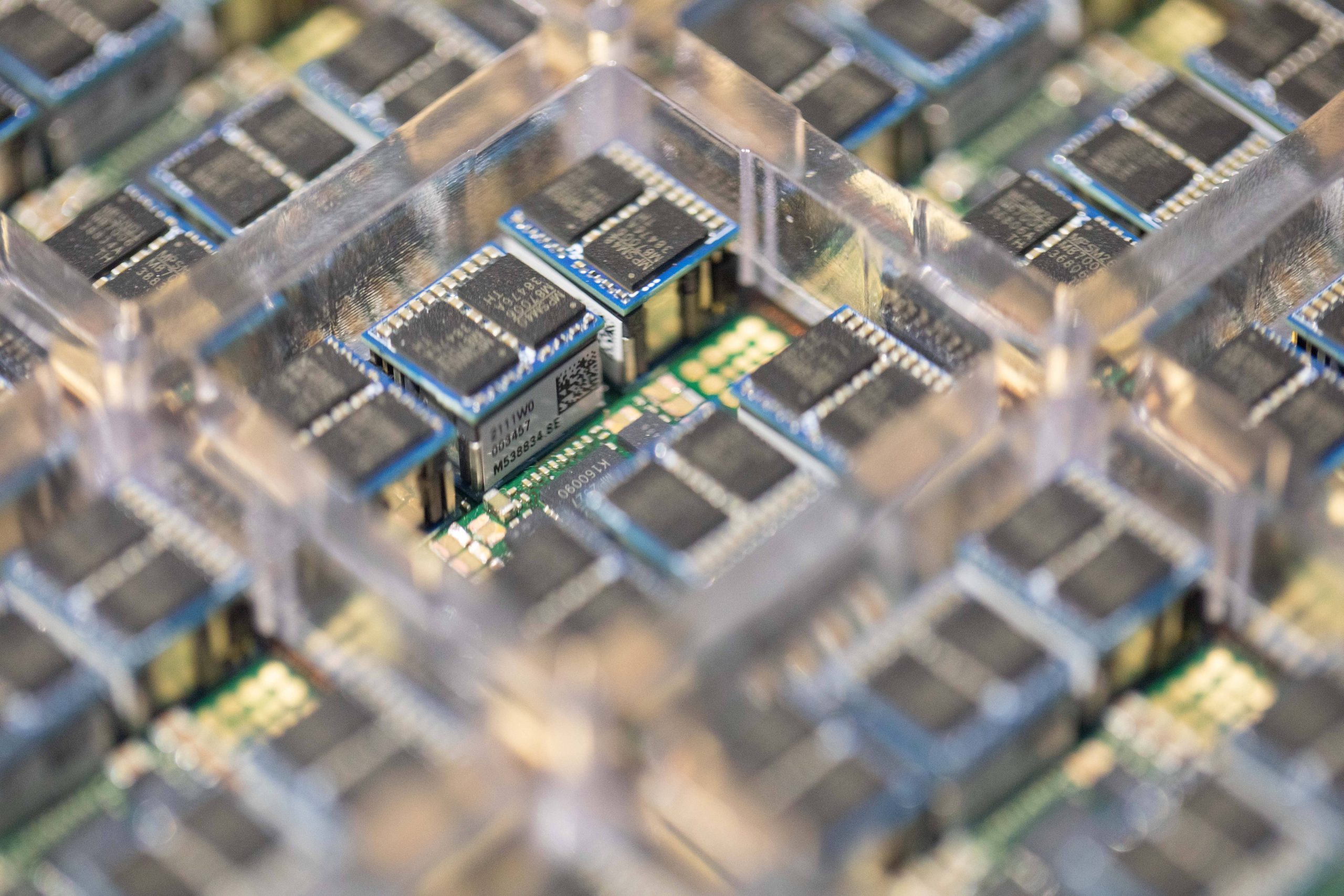

Tesla to discuss expansion of Samsung AI6 production plans: report

Tesla has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

Tesla is reportedly discussing an expansion of its next-generation AI chip supply deal with Samsung Electronics.

As per a report from Korean industry outlet The Elec, Tesla purchasing executives are reportedly scheduled to meet Samsung officials this week to negotiate additional production volume for the company’s upcoming AI6 chip.

Industry sources cited in the report stated that Tesla is pushing to increase the production volume of its AI6 chip, which will be manufactured using Samsung’s 2-nanometer process.

Tesla previously signed a long-term foundry agreement with Samsung covering AI6 production through December 31, 2033. The deal was reportedly valued at about 22.8 trillion won (roughly $16–17 billion).

Under the existing agreement, Tesla secured approximately 16,000 wafers per month from the facility. The company has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

Tesla purchasing executives are expected to discuss detailed supply terms during their visit to Samsung this week.

The AI6 chip is expected to support several Tesla technologies. Industry sources stated that the chip could be used for the company’s Full Self-Driving system, the Optimus humanoid robot, and Tesla’s internal AI data centers.

The report also indicated that AI6 clusters could replace the role previously planned for Tesla’s Dojo AI supercomputer. Instead of a single system, multiple AI6 chips would be combined into server-level clusters.

Tesla’s semiconductor collaboration with Samsung dates back several years. Samsung participated in the design of Tesla’s HW3 (AI3) chip and manufactured it using a 14-nanometer process. The HW4 chip currently used in Tesla vehicles was also produced by Samsung using a 5-nanometer node.

Tesla previously planned to split production of its AI5 chip between Samsung and TSMC. However, the company reportedly chose Samsung as the primary partner for the newer AI6 chip.