News

Tesla’s Neural Network adaptability to hardware highlighted in new patent application

Tesla’s developments in the artificial intelligence arena are one of the most important aspects of its current and future technology, and this includes adapting neural networks to various hardware platforms. A recent patent publication titled “System and Method for Adapting a Neural Network Model On a Hardware Platform” provides a bit of insight into how the electric car maker is taking on the challenge.

In general, a neural network is a set of algorithms designed to gather data and recognize patterns from it. The particular data being collected depends on the platform involved and what kind of information it can send to the network, i.e., cameras/image data, etc. Differences between platforms mean differences in the neural network algorithms, and adapting them is something time consuming for developers. Just as apps have to be programmed to work based on the operating system or hardware on a phone or tablet, for example, so too do neural networks. Tesla’s answer to the adaptation issue is automation (of course).

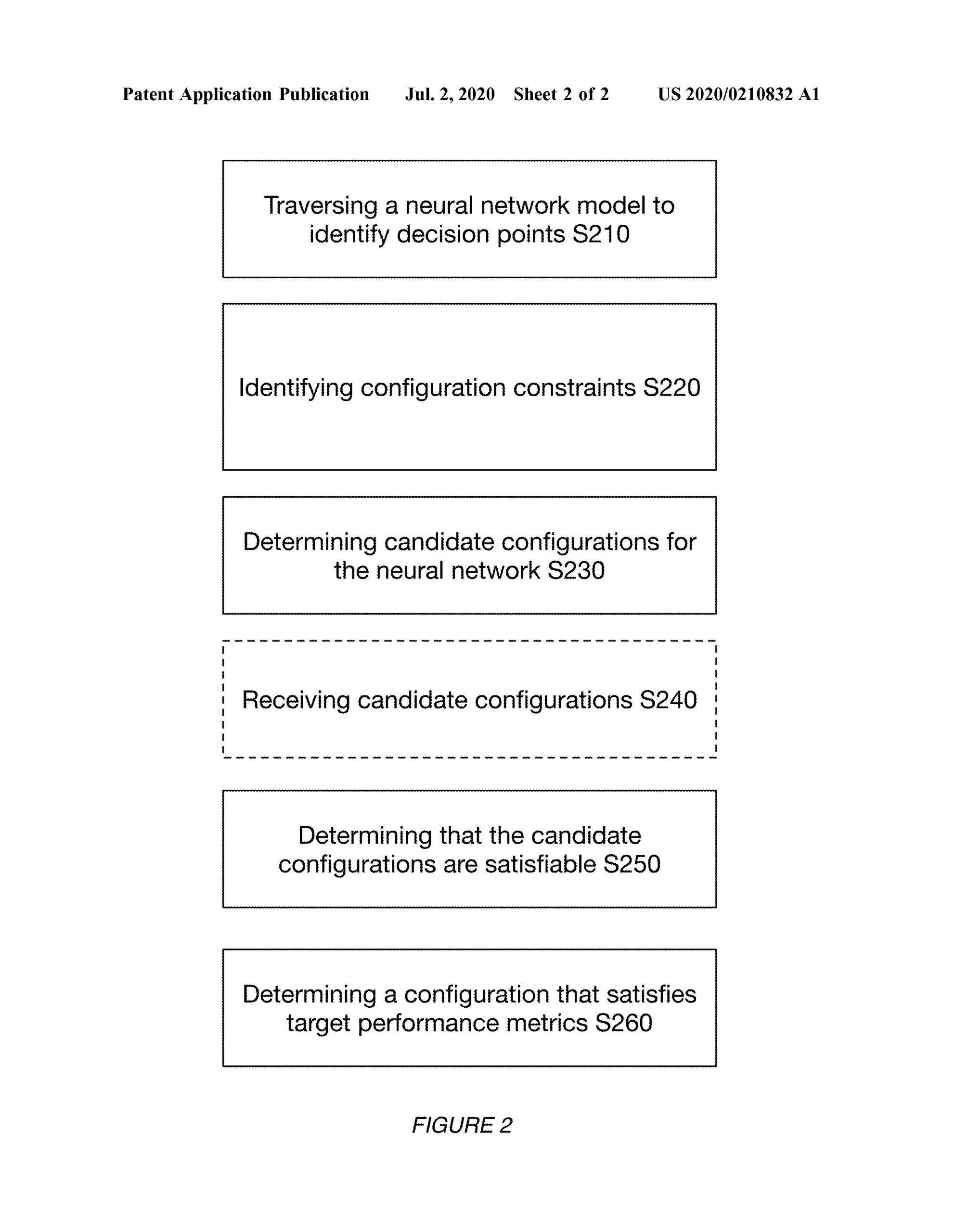

During the adaptation process of a neural network to specific hardware, decisions must be made by a software developer based on available options built into the hardware being used. Each of these options, in turn, usually requires research, hardware documentation review, and impact analysis, with each set of options chosen, eventually adding up to a configuration for the neural network to use. Tesla’s application calls these options “decision points,” and they are a vital part of how their invention functions.

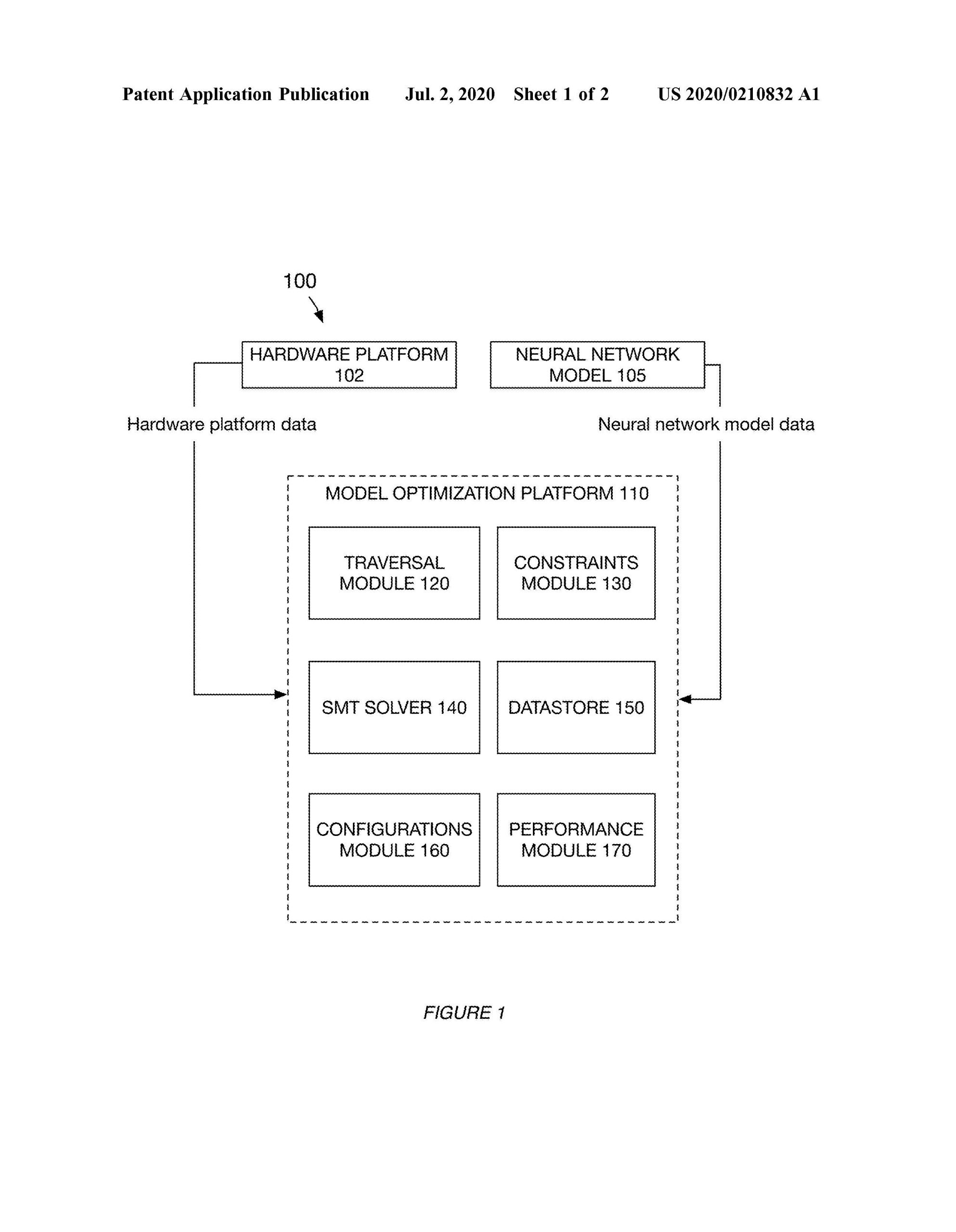

According to the application, after plugging in a neural network model and the specific hardware platform information for adaptation, software code traverses the network to learn where the decision points are, then runs the hardware parameters against those points to provide available configurations. More specifically, the software method looks at the hardware constraints (such as processing resources and performance metrics) and generates setups for the neural network that will satisfy the requirements for it to operate correctly. From the application:

“In order to produce a concrete implementation of an abstract neural network, a number of implementation decisions about one or more of system’s data layout, numerical precision, algorithm selection, data padding, accelerator use, stride, and more may be made. These decisions may be made on a per-layer or per-tensor basis, so there can potentially be hundreds of decisions, or more, to make for a particular network. Embodiments of the invention take many factors into account before implementing the neural network because many configurations are not supported by underlying software or hardware platforms, and such configurations will result in an inoperable implementation.”

Tesla’s invention also provides the ability to display the neural network configuration information on a graphical interface to make assessment and selection a bit more user friendly. For instance, different configurations could have different evaluation times, power consumption, or memory consumption. Perhaps an analogy for this process would be selecting configurations based on differences between Track Mode and Range Mode but instead for how you’d want your AI to work with your hardware.

This patent application looks to be one of the products of Tesla’s reported acquisition of DeepScale, an AI startup focused on Full Self Driving and designing neural networks for small devices. The listed inventor, Dr. Michael Driscoll, was a Senior Staff Engineer for DeepScale before transitioning to a Senior Software Engineer position at Tesla. Prior CEO of DeepScale, Dr. Forrest Iandola, also transitioned to Tesla as a Senior Staff Machine Learning Scientist before moving on to independent research this year.

Elon Musk

Why Tesla’s Q3 could be one of its biggest quarters in history

Tesla could stand to benefit from the removal of the $7,500 EV tax credit at the end of Q3.

Tesla has gotten off to a slow start in 2025, as the first half of the year has not been one to remember from a delivery perspective.

However, Q3 could end up being one of the best the company has had in history, with the United States potentially being a major contributor to what might reverse a slow start to the year.

Earlier today, the United States’ House of Representatives officially passed President Trump’s “Big Beautiful Bill,” after it made its way through the Senate earlier this week. The bill will head to President Trump, as he looks to sign it before his July 4 deadline.

The Bill will effectively bring closure to the $7,500 EV tax credit, which will end on September 30, 2025. This means, over the next three months in the United States, those who are looking to buy an EV will have their last chance to take advantage of the credit. EVs will then be, for most people, $7,500 more expensive, in essence.

The tax credit is available to any single filer who makes under $150,000 per year, $225,000 a year to a head of household, and $300,000 to couples filing jointly.

Ending the tax credit was expected with the Trump administration, as his policies have leaned significantly toward reliance on fossil fuels, ending what he calls an “EV mandate.” He has used this phrase several times in disagreements with Tesla CEO Elon Musk.

Nevertheless, those who have been on the fence about buying a Tesla, or any EV, for that matter, will have some decisions to make in the next three months. While all companies will stand to benefit from this time crunch, Tesla could be the true winner because of its sheer volume.

If things are done correctly, meaning if Tesla can also offer incentives like 0% APR, special pricing on leasing or financing, or other advantages (like free Red, White, and Blue for a short period of time in celebration of Independence Day), it could see some real volume in sales this quarter.

You can now buy a Tesla in Red, White, and Blue for free until July 14 https://t.co/iAwhaRFOH0

— TESLARATI (@Teslarati) July 3, 2025

Tesla is just a shade under 721,000 deliveries for the year, so it’s on pace for roughly 1.4 million for 2025. This would be a decrease from the 1.8 million cars it delivered in each of the last two years. Traditionally, the second half of the year has produced Tesla’s strongest quarters. Its top three quarters in terms of deliveries are Q4 2024 with 495,570 vehicles, Q4 2023 with 484,507 vehicles, and Q3 2024 with 462,890 vehicles.

Elon Musk

Tesla Full Self-Driving testing continues European expansion: here’s where

Tesla has launched Full Self-Driving testing in a fifth European country ahead of its launch.

Tesla Full Self-Driving is being tested in several countries across Europe as the company prepares to launch its driver assistance suite on the continent.

The company is still working through the regulatory hurdles with the European Union. They are plentiful and difficult to navigate, but Tesla is still making progress as its testing of FSD continues to expand.

Today, it officially began testing in a new country, as more regions open their doors to Tesla. Many owners and potential customers in Europe are awaiting its launch.

On Thursday, Tesla officially confirmed that Full Self-Driving testing is underway in Spain, as the company shared an extensive video of a trip through the streets of Madrid:

Como pez en el agua …

FSD Supervised testing in Madrid, Spain

Pending regulatory approval pic.twitter.com/txTgoWseuA

— Tesla Europe & Middle East (@teslaeurope) July 3, 2025

The launch of Full Self-Driving testing in Spain marks the fifth country in which Tesla has started assessing the suite’s performance in the European market.

Across the past several months, Tesla has been expanding the scope of countries where Full Self-Driving is being tested. It has already made it to Italy, France, the Netherlands, and Germany previously.

Tesla has already filed applications to have Full Self-Driving (Supervised) launched across the European Union, but CEO Elon Musk has indicated that this particular step has been the delay in the official launch of the suite thus far.

In mid-June, Musk revealed the frustrations Tesla has felt during its efforts to launch its Full Self-Driving (Supervised) suite in Europe, stating that the holdup can be attributed to authorities in various countries, as well as the EU as a whole:

Tesla Full Self-Driving’s European launch frustrations revealed by Elon Musk

“Waiting for Dutch authorities and then the EU to approve. Very frustrating and hurts the safety of people in Europe, as driving with advanced Autopilot on results in four times fewer injuries! Please ask your governing authorities to accelerate making Tesla safer in Europe.”

Waiting for Dutch authorities and then the EU to approve.

Very frustrating and hurts the safety of people in Europe, as driving with advanced Autopilot on results in four times fewer injuries!

Please ask your governing authorities to accelerate making Tesla safer in Europe. https://t.co/QIYCXhhaQp

— Elon Musk (@elonmusk) June 11, 2025

Tesla said last year that it planned to launch Full Self-Driving in Europe in 2025.

Elon Musk

xAI’s Memphis data center receives air permit despite community criticism

xAI welcomed the development in a post on its official xAI Memphis account on X.

Elon Musk’s artificial intelligence startup xAI has secured an air permit from Memphis health officials for its data center project, despite critics’ opposition and pending legal action. The Shelby County Health Department approved the permit this week, allowing xAI to operate 15 mobile gas turbines at its facility.

Air permit granted

The air permit comes after months of protests from Memphis residents and environmental justice advocates, who alleged that xAI violated the Clean Air Act by operating gas turbines without prior approval, as per a report from WIRED.

The Southern Environmental Law Center (SELC) and the NAACP has claimed that xAI installed dozens of gas turbines at its new data campus without acquiring the mandatory Prevention of Significant Deterioration (PSD) permit required for large-scale emission sources.

Local officials previously stated the turbines were considered “temporary” and thus not subject to stricter permitting. xAI applied for an air permit in January 2025, and in June, Memphis Mayor Paul Young acknowledged that the company was operating 21 turbines. SELC, however, has claimed that aerial footage shows the number may be as high as 35.

Critics are not giving up

Civil rights groups have stated that they intend to move forward with legal action. “xAI’s decision to install and operate dozens of polluting gas turbines without any permits or public oversight is a clear violation of the Clean Air Act,” said Patrick Anderson, senior attorney at SELC.

“Over the last year, these turbines have pumped out pollution that threatens the health of Memphis families. This notice paves the way for a lawsuit that can hold xAI accountable for its unlawful refusal to get permits for its gas turbines,” he added.

Sharon Wilson, a certified optical gas imaging thermographer, also described the emissions cloud in Memphis as notable. “I expected to see the typical power plant type of pollution that I see. What I saw was way worse than what I expected,” she said.

-

Elon Musk3 days ago

Elon Musk3 days agoTesla investors will be shocked by Jim Cramer’s latest assessment

-

News1 week ago

News1 week agoTesla Robotaxi’s biggest challenge seems to be this one thing

-

News2 weeks ago

News2 weeks agoTexas lawmakers urge Tesla to delay Austin robotaxi launch to September

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoFirst Look at Tesla’s Robotaxi App: features, design, and more

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoxAI’s Grok 3 partners with Oracle Cloud for corporate AI innovation

-

News2 weeks ago

News2 weeks agoSpaceX and Elon Musk share insights on Starship Ship 36’s RUD

-

News2 weeks ago

News2 weeks agoWatch Tesla’s first driverless public Robotaxi rides in Texas

-

News2 weeks ago

News2 weeks agoTesla has started rolling out initial round of Robotaxi invites