News

Elon Musk’s Neuralink unveils sleek V0.9 device, uses sassy pigs for live brain machine demo

After another year of successfully staying in the shadows, Elon Musk’s Neuralink has revealed what’s been going on behind the scenes in terms of technological progress. In a live streamed event on Friday afternoon, the brain-machine interface company gave a demonstration, took questions, and left audiences with even more to mull over than ever.

“The primary purpose of this demo is recruiting,” Musk stated at the very beginning of the presentation. He emphasized that everyone at some point in their life will face a brain or spine problem – all inherently electrical – meaning it takes electrical solutions to solve electrical problems. Neuralink’s goals are to solve these problems for anyone who wants them solved, and that application will be simple and reversible with no negative effects.

Two pigs were used for the ‘real-time’ demonstration promised in the days leading up to the event. The first, named Gertrude, had a Neuralink implant installed for two months and was shown to be healthy and happy. A second pig, named Dorothy, had the implant previously installed and removed with no side effects afterward.

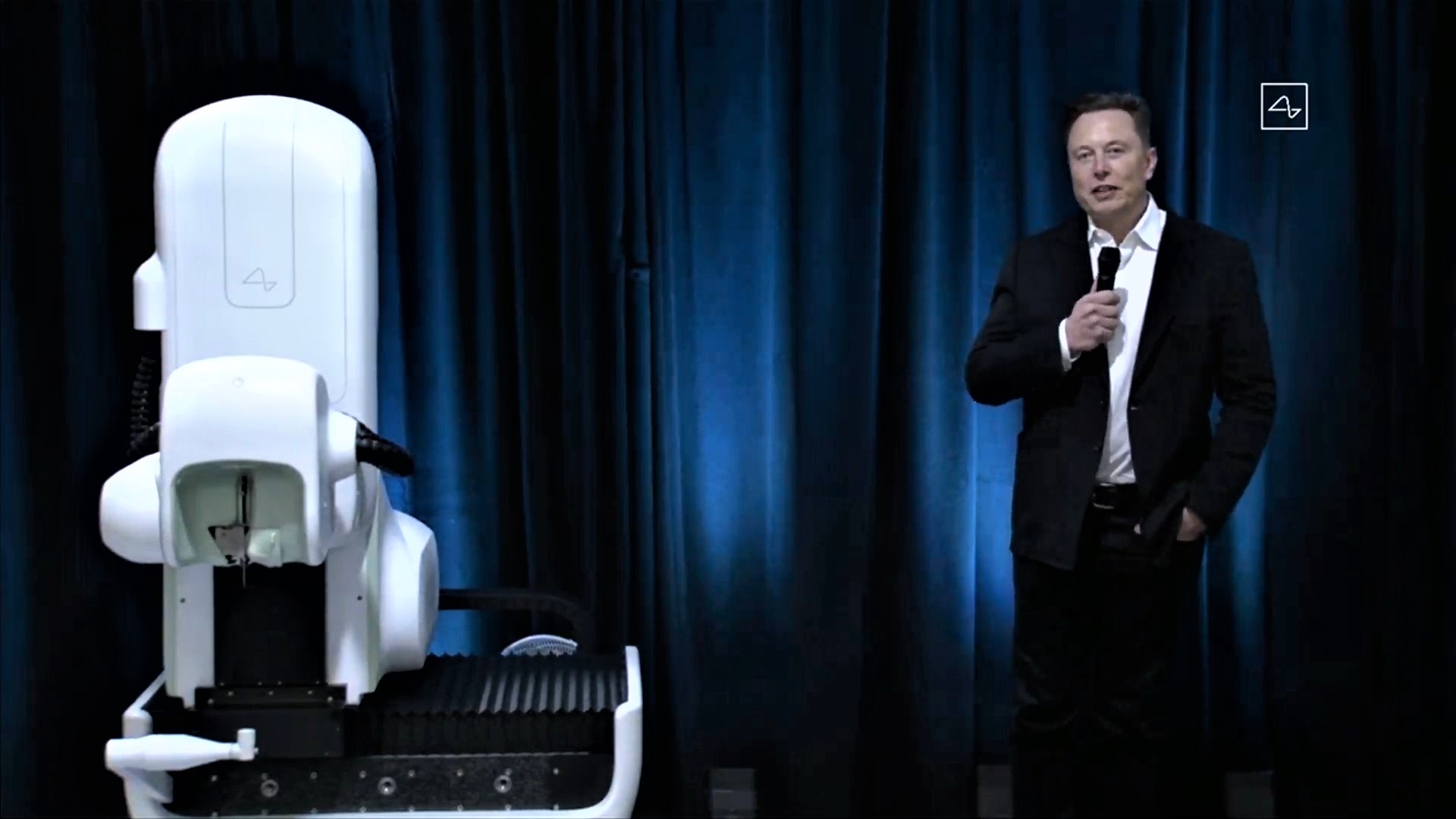

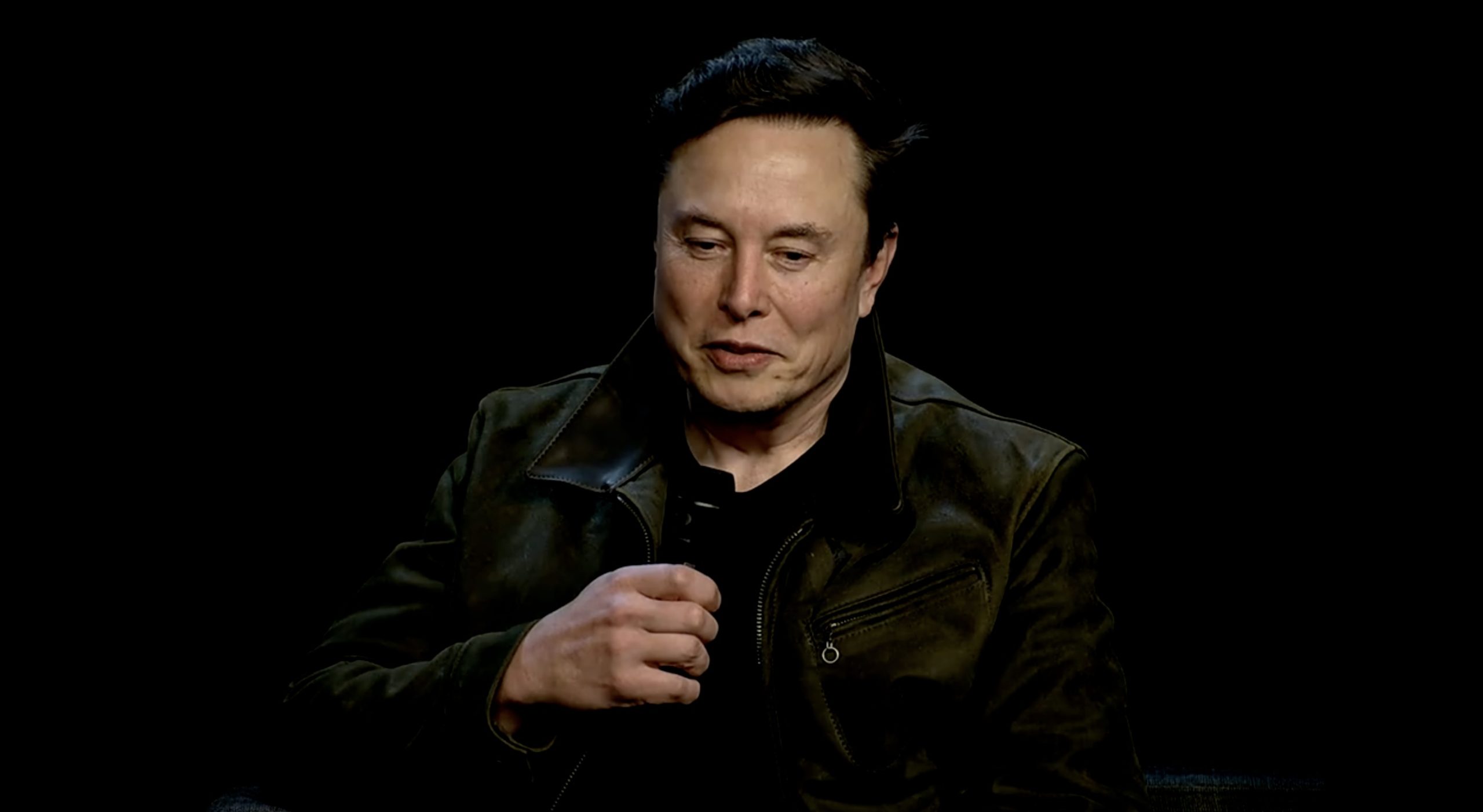

- Elon Musk shows off the Neuralink v0.9 Device (Credit: Neuralink)

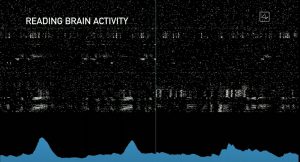

After a bit of a delay from the amusingly sassy Neuralink-implanted pigs, the live stream and in-house audience witnessed Gertrude’s device in action. Notably, the neural implants could predict all the limb movements of the pigs based on the neural activity being read. Each reading was shown on a screen and musical notes attached as the data was processed.

Overall, here are some of the main takeaways from the presentation.

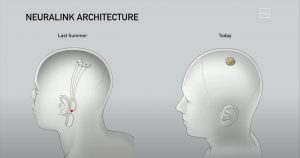

- The Neuralink implant device has been dramatically simplified since Summer 2019. Its design will be very low profile and nearly invisible on the outside, leaving only a small scar that could be covered by hair. “It’s like a FitBit in your skull with tiny wires,” Musk half-joked. “I could have it right now and you wouldn’t even know. Maybe I do!”

- The implant device is inductively charged, much like wireless smartphones are charged. It will also have functions that are akin to those available on smartwatches today.

- A “smart” robot installs the device, which requires engineering talent to accomplish, hence the recruiting focus of the Neuralink event. The “V2” robot featured in this year’s presentation looks like a step up from last year’s machine.

- The electrodes are installed without general anesthesia, no bleeding, and no noticeable damage. The currently developed robot has done all the current implant installations to date.

- The implant can be installed and removed without any side effects.

- You can have multiple Neuralink devices implanted and they will work seamlessly.

- The implant device would have an application linked to your phones.

- Neuralink received a ‘breakthrough device’ designation from the FDA in July, and the company is working with the agency to make the technology as safe as possible.

- The device will eventually be able to be sewn deeper within the brain, thereby having access to a greater range of functions beyond the upper cortex. Examples are motor function, depression, and addiction.

- Getting a Neuralink should take less than an hour, without the need for general anesthesia. Users could have the surgery done in the morning and go home later during the day.

- Credit: Neuralink

- Credit: Neuralink

The idea for Musk’s AI-focused brain venture first seemed to really take off after his appearance at Vox Media’s Recode Code Conference in 2016. The CEO had discussed the concept of a neural lace device on several occasions up to that point and suggested at the conference that he might be willing to tackle the challenge himself. A few months later, he revealed that he was in fact working on the idea, which was detailed at great length by Tim Urban on his website Wait But Why.

“He started Neuralink to accelerate our pace into the Wizard Era—into a world where he says that ‘everyone who wants to have this AI extension of themselves could have one, so there would be billions of individual human-AI symbiotes who, collectively, make decisions about the future.’ A world where AI really could be of the people, by the people, for the people,” Urban summarized. Given that bigger picture perspective, the 2020 Neuralink event seems even more impactful.

Neuralink’s official Twitter account opened the virtual floor to questions using the #askneuralink hashtag the night before the event, prompting several questions during the presentation. However, Musk fanned the building curiosity in the hours beforehand. “Giant gap between experimental medical device for use only in patients with extreme medical problems & widespread consumer use. This is way harder than making a small number of prototypes,” Musk responded to one question directed towards the mass market viability of a future Neuralink product line.

https://twitter.com/flcnhvy/status/1299422178329362437

Also in the days prior to the Neuralink event, Musk teased a few more bits of information about what to expect. “Live webcast of working @Neuralink device,” he said. Just prior to his confirmation of the device demonstration, he revealed that version two of the robot initially shown in the first progress update in 2019 wasn’t quite up to the level of a LASIK eye surgery machine, though only a few years away.

You can watch the full event below:

News

Tesla Australia confirms six-seat Model Y L launch in 2026

Compared with the standard five-seat Model Y, the Model Y L features a longer body and extended wheelbase to accommodate an additional row of seating.

Tesla has confirmed that the larger six-seat Model Y L will launch in Australia and New Zealand in 2026.

The confirmation was shared by techAU through a media release from Tesla Australia and New Zealand.

The Model Y L expands the Model Y lineup by offering additional seating capacity for customers seeking a larger electric SUV. Compared with the standard five-seat Model Y, the Model Y L features a longer body and extended wheelbase to accommodate an additional row of seating.

The Model Y L is already being produced at Tesla’s Gigafactory Shanghai for the Chinese market, though the vehicle will be manufactured in right-hand-drive configuration for markets such as Australia and New Zealand.

Tesla Australia and New Zealand confirmed the vehicle will feature seating for six passengers.

“As shown in pictures from its launch in China, Model Y L will have a new seating configuration providing room for 6 occupants,” Tesla Australia and New Zealand said in comments shared with techAU.

Instead of a traditional seven-seat arrangement, the Model Y L uses a 2-2-2 layout. The middle row features two individual seats, allowing easier access to the third row while providing additional space for passengers.

Tesla Australia and New Zealand also confirmed that the Model Y L will be covered by the company’s updated warranty structure beginning in 2026.

“As with all new Tesla Vehicles from the start of 2026, the Model Y L will come with a 5-year unlimited km vehicle warranty and 8 years for the battery,” the company said.

The updated policy increases Tesla’s vehicle warranty from the previous four-year or 80,000-kilometer coverage.

Battery and drive unit warranties remain unchanged depending on the variant. Rear-wheel-drive models carry an eight-year or 160,000-kilometer warranty, while Long Range and Performance variants are covered for eight years or 192,000 kilometers.

Tesla has not yet announced official pricing or range figures for the Model Y L in Australia.

News

Tesla Roadster patent hints at radical seat redesign ahead of reveal

A newly published Tesla patent could offer one of the clearest signals yet that the long-awaited next-generation Roadster is nearly ready for its public debut.

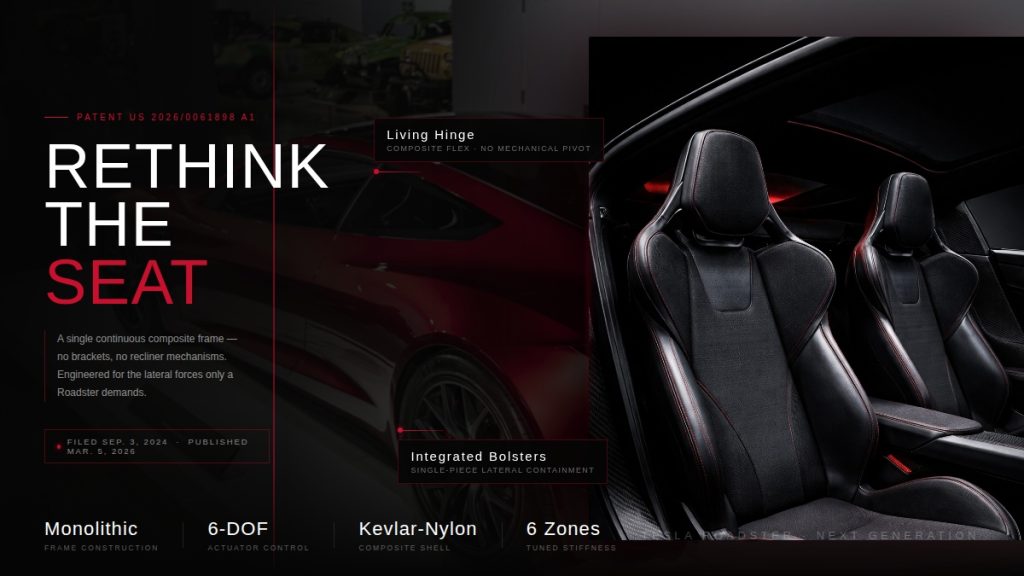

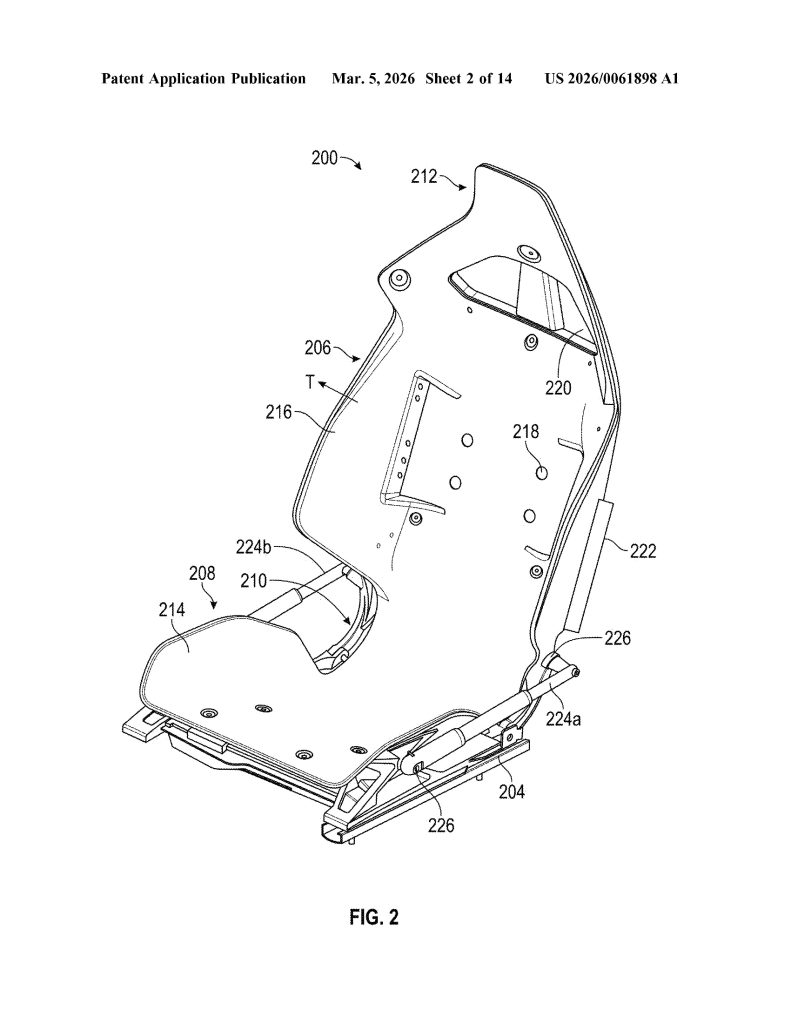

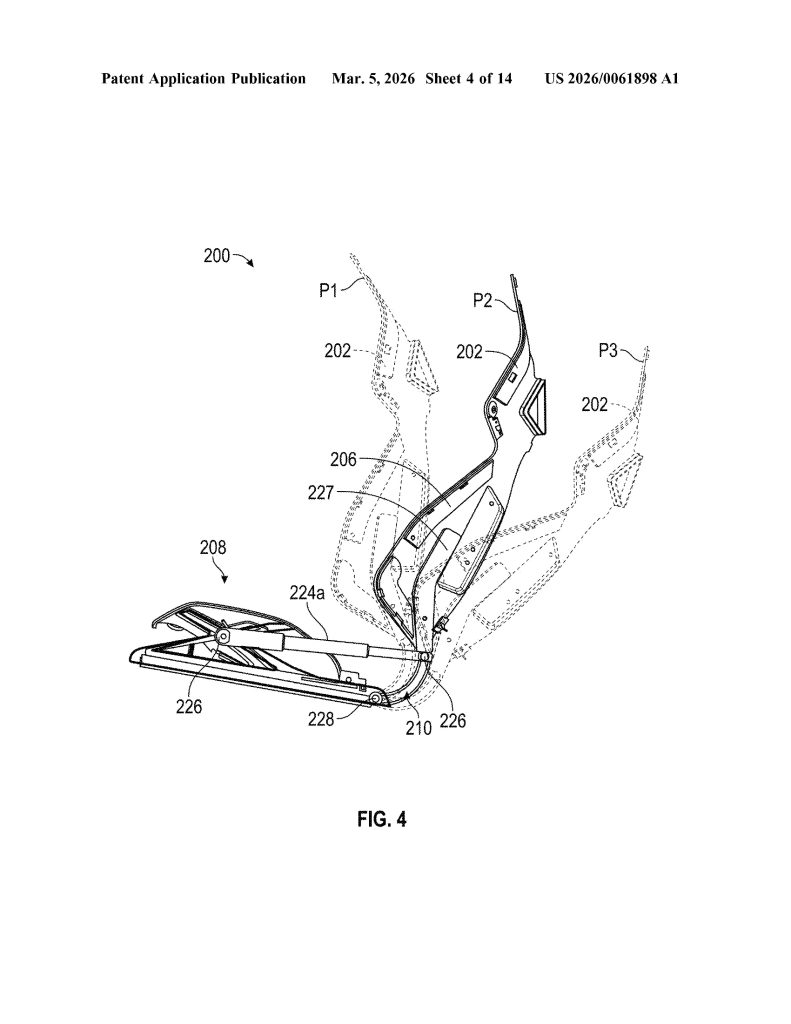

Patent No. US 20260061898 A1, published on March 5, 2026, describes a “vehicle seat system” built around a single continuous composite frame – a dramatic departure from the dozens of metal brackets, recliner mechanisms, and rivets that make up a traditional car seat. Tesla is calling it a monolithic structure, with the seat portion, backrest, headrest, and bolsters all thermoformed as one unified piece.

The approach mirrors Tesla’s broader manufacturing philosophy. The same company that pioneered massive aluminum castings to eliminate hundreds of body components is now applying that logic to the cabin. Fewer parts means fewer potential failure points, less weight, and a cleaner assembly process overall.

Tesla ramps hiring for Roadster as latest unveiling approaches

The timing of the filing is difficult to ignore. Elon Musk has publicly targeted April 1, 2026 as the date for an “unforgettable” Roadster design reveal, and two new Roadster trademarks were filed just last month. A patent describing a seat architecture suited for a hypercar, and one that Tesla has promised will hit 60 mph in under two seconds.

The Roadster, originally unveiled in 2017, has been one of Tesla’s most anticipated yet most delayed products. With a target price around $200,000 and engineering ambitions to match, it is being positioned as the ultimate showcase for what Tesla’s technology can do.

The patent was first flagged by @seti_park on X.

Tesla Roadster Monolithic Seat: Feature Highlights via US Patent 20260061898 A1

- Single Continuous Frame (Monolithic Construction). The core invention is a seat assembly built from one continuous frame that integrates the seat portion, backrest portion, and hinge into a single component — eliminating the need for separate structural parts and mechanical joints typical in conventional seats.

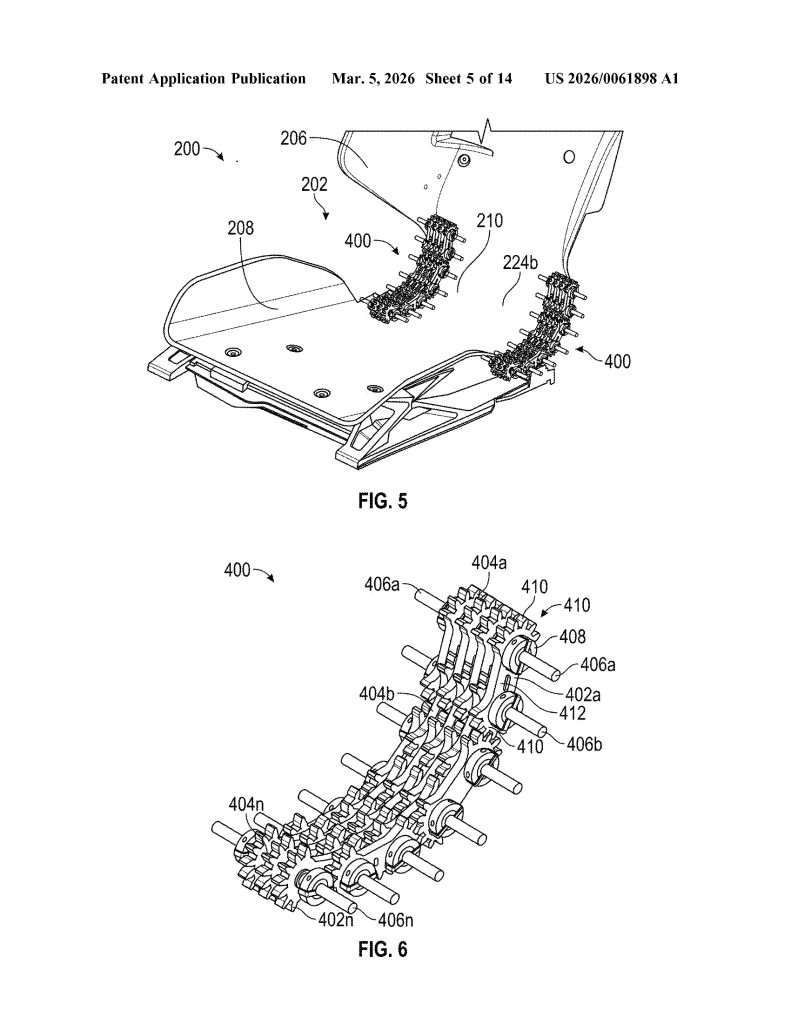

- Integrated Flexible Hinge. Rather than a traditional mechanical recliner, the hinge is built directly into the continuous frame and is designed to flex, and allowing the backrest to move relative to the seat portion. The hinge can be implemented as a fiber composite leaf spring or an assembly of rigid linkages.

- Thermoformed Anisotropic Composite Material. The continuous frame is manufactured via thermoforming from anisotropic composite materials, including fiberglass-nylon, fiberglass-polymer, nylon carbon composite, Kevlar-nylon, or Kevlar-polymer composites, enabling a molded-to-shape monolithic structure.

- Regionally Tuned Stiffness Zones. The frame is engineered with up to six distinct stiffness regions (R1–R6) across the seat, backrest, hinge, headrest, and bolsters. Each zone can have a different stiffness, allowing precise ergonomic and structural tuning without adding separate components.

- Linkage Assembly Hinge Mechanism. The hinge incorporates one or more linkage assemblies consisting of multiple interlocking links with gears, connected by rods. When driven by motors or actuators, these linkages act as a flexible member to control backrest movement along a precise, ergonomically optimized trajectory.

- Multi-Actuator Six-Degree-of-Freedom Positioning System. The seat uses four distinct actuator pairs, all controlled by a central controller. These actuators work in coordinated combinations to achieve fore/aft, height, cushion tilt, and backrest rotation adjustments simultaneously.

- ECU-Based Controller Architecture. An Electronic Control Unit (ECU) and programmable controller manage all seat actuators, receive user input via a user interface (touchscreen, buttons, or switches), and incorporate sensor feedback to confirm and maintain desired seat positions, essentially making this a software-driven seat system.

- Airbag-Integrated Bolster Deployment System. The backrest bolsters (216) are geometrically shaped and sized to guide airbag deployment along a specific, pre-configured trajectory. Left and right bolsters can have different shapes so that each guides its respective airbag along a distinct trajectory, improving occupant protection.

- Ventilation Holes Formed into the Backrest. The continuous frame includes one or more ventilation holes formed directly into the backrest portion, configured to either receive airflow into or deliver airflow from the seat frame — enabling passive or active thermal comfort without requiring separate ventilation components.

- Soft Trim Recess for Tool-Free Integration. The headrest and backrest portions together define a molded recess, specifically designed to receive and secure a soft trim component (foam, fabric, or cushioning) directly into the continuous frame, eliminating the need for separate attachment hardware and simplifying final assembly.

Elon Musk

Elon Musk’s xAI plans $659M expansion at Memphis supercomputer site

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site.

Elon Musk’s artificial intelligence company xAI has filed a permit to construct a new building at its growing data center complex outside Memphis, Tennessee.

As per a report from Data Center Dynamics, xAI plans to spend about $659 million on a new facility adjacent to its Colossus 2 data center. Permit documents submitted to the Memphis and Shelby County Division of Planning and Development show the proposed structure would be a four-story building totaling about 312,000 square feet.

The new building is planned for a 79-acre parcel located at 5414 Tulane Road, next to xAI’s Colossus 2 data center site. Permit filings indicate the structure would reach roughly 75 feet high, though the specific function of the building has not been disclosed.

The filing was first reported by the Memphis Business Journal.

xAI uses its Memphis data centers to power Grok, the company’s flagship large language model. The company entered the Memphis area in 2024, launching its Colossus supercomputer in a repurposed Electrolux factory located in the Boxtown district.

The company later acquired land for the Colossus 2 data center in March last year. That facility came online in January.

A third data center is also planned for the cluster across the Tennessee–Mississippi border. Musk has stated that the broader campus could eventually provide access to about 2 gigawatts of compute power.

The Memphis cluster is also tied to new power infrastructure commitments announced by SpaceX President Gwynne Shotwell. During a White House event with United States President Donald Trump, Shotwell stated that xAI would develop 1.2 gigawatts of power for its supercomputer facility as part of the administration’s “Ratepayer Protection Pledge.”

“As you know, xAI builds huge supercomputers and data centers and we build them fast. Currently, we’re building one on the Tennessee-Mississippi state line… xAI will therefore commit to develop 1.2 GW of power as our supercomputer’s primary power source. That will be for every additional data center as well…

“The installation will provide enough backup power to power the city of Memphis, and more than sufficient energy to power the town of Southaven, Mississippi where the data center resides. We will build new substations and invest in electrical infrastructure to provide stability to the area’s grid,” Shotwell said.

Shotwell also stated that xAI plans to support the region’s water supply through new infrastructure tied to the project. “We will build state-of-the-art water recycling plants that will protect approximately 4.7 billion gallons of water from the Memphis aquifer each year. And we will employ thousands of American workers from around the city of Memphis on both sides of the TN-MS border,” she said.