News

Driver of Model X crash in Montana pens open letter to Musk, calls Tesla drivers “lab rats” [Updated]

Pang, the driver of the Model X that crashed in Montana earlier this month has posted an open letter to Elon Musk and Tesla asking the company to “take responsibility for the mistakes of Tesla products”. He accuses Tesla for allegedly using drivers as “lab rats” for testing of its Autopilot system.

In an email sent to us and also uploaded to the Tesla Motors Club forum, Pang provides a detailed account of what happened the day of the crash. He says he and a friend drove about 600 miles on Interstate 90 on the way to Yellowstone National Park. When he exited the highway to get on Montana route 2, he drove for about a mile, saw conditions were clear, and turned on Autopilot again. Pang describes what happened next as follows:

“After we drove about another mile on state route 2, the car suddenly veered right and crashed into the safety barrier post. It happened so fast, and we did not hear any warning beep. Autopilot did not slow down at all after the crash, but kept going in the original speed setting and continued to crash into more barrier posts in high speed. I managed to step on the break, turn the car left and stopped the car after it crashed 12 barrier posts.

“After we stopped, we heard the car making abnormal loud sound. Afraid that the battery was broken or short circuited, we got out and ran away as fast as we could. After we ran about 50 feet, we found the sound was the engine were still running in high speed. I returned to the car and put it in parking, that is when the loud sound disappeared.”

Pang goes on to explain how his Tesla Model X driving on Autopilot continued to travel on its own even after veering off the road and crashing into a roadside stake. “I was horrified by the fact that the Tesla autopilot did not slow down the car at all after the intial crash. After we crashed on the first barrier post, autopilot continued to drive the car with the speed of 55 to 60 mph, and crashed another 11 posts. Even after I stopped the car, it was still trying to accelerate and spinning the engine in high speed. What if it is not barrier posts on the right side, but a crowd?”

Photo credit: Steven Xu

After the accident, Tesla reviewed the driving logs from the Model X and reported that the car was operating for more than two miles with no hands on the steering wheel, despite numerous alarms and warnings issued by the car. Pang says he never heard any audible warnings. Comments on TMC range from the incredulous to the acerbic. Most feel Teslas simply don’t operate the way Pang said his car did. Among other discrepancies, the cars are designed to put themselves in Park if the driver’s door is opened with no one in the driver’s seat.

But that hasn’t stopped Pang from voicing his strong opinions on Tesla’s Autopilot system. “It is clear that Tesla is selling a beta product with bugs to consumers, and ask the consumers to be responsible for the liability of the bugging autopilot system. Tesla is using all Tesla drivers as lab rats.”

A car that crashes but continues to accelerate is certainly a scary thought. There is no way to resolve the discrepancy between what Pang says happened and Tesla’s account of what occurred. In an updated email sent to us by friend and english translator for Mandarin speaking Pang, Tesla has reached out to Pang to address the matter.

The original open letter from Pang reads as follows:

A Public Letter to Mr. Musk and Tesla For The Sake Of All Tesla Driver’s Safety

From the survivor of the Montana Tesla autopilot crash

My name is Pang. On July 8, 2016, I drove my Tesla Model X from Seattle heading to Yellowstone Nation Park, with a friend, Mr. Huang, in the passenger seat. When we were on highway I90, I turned on autopilot, and drove for about 600 miles. I switched autopilot off while we exited I90 in Montana to state route 2. After about 1 mile, we saw that road condition was good, and turned on autopilot again. The speed setting was between 55 and 60 mph. After we drove about another mile on state route 2, the car suddenly veered right and crashed into the safety barrier post. It happened so fast, and we did not hear any warning beep. Autopilot did not slow down at all after the crash, but kept going in the original speed setting and continued to crash into more barrier posts in high speed. I managed to step on the break, turn the car left and stopped the car after it crashed 12 barrier posts. After we stopped, we heard the car making abnormal loud sound. Afraid that the battery was broken or short circuited, we got out and ran away as fast as we could. After we ran about 50 feet, we found the sound was the engine were still running in high speed. I returned to the car and put it in parking, that is when the loud sound disappeared. Our cellphone did not have coverage, and asked a lady passing by to call 911 on her cellphone. After the police arrived, we found the right side of the car was totally damaged. The right front wheel, suspension, and head light flied off far, and the right rear wheel was crashed out of shape. We noticed that the barrier posts is about 2 feet from the white line. The other side of the barrier is a 50 feet drop, with a railroad at the bottom, and a river next. If the car rolled down the steep slope, it would be really bad.

Concerning this crash accident, we want to make several things clear:

1. We know that while Tesla autopilot is on but the driver’s hand is not on the steering wheel, the system will issue warning beep sound after a while. If the driver’s hands continue to be off the steering wheel, autopilot will slow down, until the driver takes over both the steering wheel and gas pedal. But we did not hear any warning beep before the crash, and the car did not slow down either. It just veered right in a sudden and crashed into the barrier posts. Apparently the autopilot system malfunctioned and caused the crash. The car was running between 55 and 60 mph, and the barrier posts are just 3 or 4 feet away. It happened in less than 1/10 of a second from the drift to crash. A normal driver is impossible to avoid that in such a short time.

2. I was horrified by the fact that the Tesla autopilot did not slow down the car at all after the intial crash. After we crashed on the first barrier post, autopilot continued to drive the car with the speed of 55 to 60 mph, and crashed another 11 posts. Even after I stopped the car, it was still trying to accelerate and spinning the engine in high speed. What if it is not barrier posts on the right side, but a crowd?

3. Tesla never contacted me after the accident. Tesla just issued conclusion without thorough investigation, but blaming me for the crash. Tesla were trying to cover up the lack of dependability of the autopilot system, but blaming everything on my hands not on the steering wheel. Tesla were not interested in why the car veered right suddenly, nor why the car did not slow down during the crash. It is clear that Tesla is selling a beta product with bugs to consumers, and ask the consumers to be responsible for the liability of the bugging autopilot system. Tesla is using all Tesla drivers as lab rats. We are willing to talk to Tesla concerning the accident anytime, anywhere, in front of the public.

4. CNN’s article later about the accident was quoting out of context of our interview. I did not say that I do not know either Tesla or me should be responsible for the accident. I might consider buying another Tesla only if they can iron out the instability problems of their system.

As a survivor of such a bad accident, a past fan of the Tesla technology, I now realized that life is the most precious fortune in this world. Any advance in technology should be based on the prerequisite of protecting life to the maximum extend. In front of life and death, any technology has no right to ignore life, any pursue and dream on technology should first show the respect to life. For the sake of the safety of all Tesla drivers and passengers, and all other people sharing the road, Mr. Musk should stand up as a man, face up the challenge to thoroughly investigate the cause of the accident, and take responsibility for the mistakes of Tesla product. We are willing to publicly talk to you face to face anytime to give you all the details of what happened. Mr. Musk, you should immediately stop trying to cover up the problems of the Tesla autopilot system and blame the consumers.

Tesla’s Response on TMC

TM Ownership, Saturday at 12:11 PM

Dear Mr. Pang,

We were sorry to hear about your accident, but we were very pleased to learn both you and your friend were ok when we spoke through your translator on the morning of the crash (July 9). On Monday immediately following the crash (July 11), we found a member of the Tesla team fluent in Mandarin and called to follow up. When we were able to make contact with your wife the following day, we expressed our concern and gathered more information regarding the incident. We have since made multiple attempts (one Wednesday, one Thursday, and one Friday) to reach you to discuss the incident, review detailed logs, and address any further concerns and have not received a call back.

As is our standard procedure with all incidents experienced in our vehicles, we have conducted a thorough investigation of the diagnostic log data transmitted by the vehicle. Given your stated preference to air your concerns in a public forum, we are happy to provide a brief analysis here and welcome a return call from you. From this data, we learned that after you engaged Autosteer, your hands were not detected on the steering wheel for over two minutes. This is contrary to the terms of use when first enabling the feature and the visual alert presented you every time Autosteer is activated. As road conditions became increasingly uncertain, the vehicle again alerted you to put your hands on the wheel. No steering torque was then detected until Autosteer was disabled with an abrupt steering action. Immediately following detection of the first impact, adaptive cruise control was also disabled, the vehicle began to slow, and you applied the brake pedal.

Following the crash, and once the vehicle had come to rest, the passenger door was opened but the driver door remained closed and the key remained in the vehicle. Since the vehicle had been left in Drive with Creep Mode enabled, the motor continued to rotate. The diagnostic data shows that the driver door was later opened from the outside and the vehicle was shifted to park. We understand that at night following a collision the rotating motors may have been disconcerting, even though they were only powered by minimal levels of creep torque. We always seek to learn from customer concerns, and we are looking into this behavior to see if it can be improved. We are also continually studying means of better encouraging drivers to adhere to the terms of use for our driver assistance features.

We are still seeking to speak with you. Please contact Tesla service so that we can answer any further questions you may have.

Sincerely,

The Tesla team

News

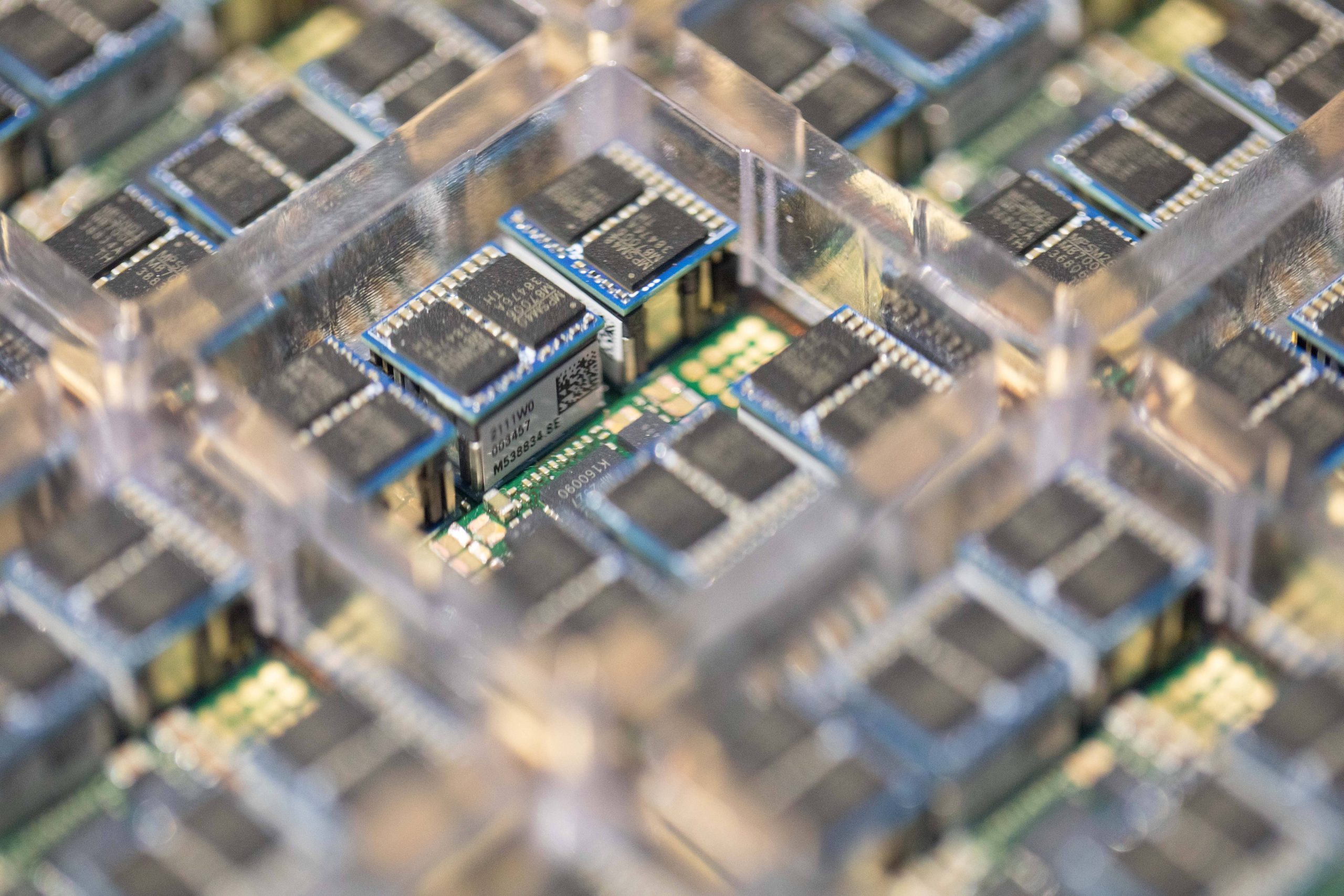

Tesla to discuss expansion of Samsung AI6 production plans: report

Tesla has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

Tesla is reportedly discussing an expansion of its next-generation AI chip supply deal with Samsung Electronics.

As per a report from Korean industry outlet The Elec, Tesla purchasing executives are reportedly scheduled to meet Samsung officials this week to negotiate additional production volume for the company’s upcoming AI6 chip.

Industry sources cited in the report stated that Tesla is pushing to increase the production volume of its AI6 chip, which will be manufactured using Samsung’s 2-nanometer process.

Tesla previously signed a long-term foundry agreement with Samsung covering AI6 production through December 31, 2033. The deal was reportedly valued at about 22.8 trillion won (roughly $16–17 billion).

Under the existing agreement, Tesla secured approximately 16,000 wafers per month from the facility. The company has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

Tesla purchasing executives are expected to discuss detailed supply terms during their visit to Samsung this week.

The AI6 chip is expected to support several Tesla technologies. Industry sources stated that the chip could be used for the company’s Full Self-Driving system, the Optimus humanoid robot, and Tesla’s internal AI data centers.

The report also indicated that AI6 clusters could replace the role previously planned for Tesla’s Dojo AI supercomputer. Instead of a single system, multiple AI6 chips would be combined into server-level clusters.

Tesla’s semiconductor collaboration with Samsung dates back several years. Samsung participated in the design of Tesla’s HW3 (AI3) chip and manufactured it using a 14-nanometer process. The HW4 chip currently used in Tesla vehicles was also produced by Samsung using a 5-nanometer node.

Tesla previously planned to split production of its AI5 chip between Samsung and TSMC. However, the company reportedly chose Samsung as the primary partner for the newer AI6 chip.

Elon Musk

Elon Musk: Tesla could be first to build AGI in humanoid form

Musk’s statement was shared in a post on social media platform X.

Elon Musk predicted that Tesla could become one of the developers of Artificial General Intelligence (AGI) in humanoid form. Musk’s statement was shared in a post on social media platform X.

In his post, Musk stated that “Tesla will be one of the companies to make AGI and probably the first to make it in humanoid/atom-shaping form.”

The comment comes as Tesla expands development of its Optimus humanoid robot.

During Tesla’s Q4 earnings report, Elon Musk stated that production of the Model S and Model X would be phased out at its Fremont, California, facility. The vehicles’ production line will then be converted to a pilot line for Optimus. Tesla is looking to produce 1 million units of the humanoid robots annually to start.

Musk has previously stated that Optimus could eventually function as a von Neumann probe. The concept, proposed by mathematician John von Neumann, describes a machine capable of replicating itself using planetary resources and sending those replicas to other worlds.

Optimus would likely only be able to achieve this potential if it manages to achieve Artificial General Intelligence.

Other leaders in the AI sector have also expressed strong expectations about AGI’s potential. Demis Hassabis, CEO of Google DeepMind, recently spoke about the technology at the India AI Impact Summit 2026, as noted in a Benzinga report.

“It’s going to be something like ten times the impact of the Industrial Revolution, but happening at ten times the speed,” Hassabis said.

Elon Musk’s recent comments about Tesla producing a product with AGI could hint at further collaboration among his companies. So far, Tesla is actively pursuing autonomous driving, but it is xAI that is pursuing AGI with its Grok program.

Considering that Elon Musk mentioned a Tesla humanoid product with AGI, it appears that an Optimus robot running xAI’s AI models could become a reality.

xAI had recently merged with SpaceX, though reports suggest that Elon Musk is also considering an even bigger merger for all his companies, including Tesla.

News

Tesla influencers argue company’s polarizing Full Self-Driving transfer decision

Tesla maintains it will honor transfers for orders with initial delivery windows before the deadline and offers full deposit refunds otherwise, citing longstanding fine print that the program is “subject to change at any time.”

Tesla’s decision to tighten its Full Self-Driving (FSD) transfer promotion has ignited fierce debate among owners and enthusiasts.

The company quietly updated its terms in late February 2026, changing the eligibility from “order by March 31, 2026” to “take delivery by March 31, 2026.”

What began as a flexible incentive to boost sales, allowing buyers to transfer their paid FSD (Supervised) to a new vehicle, now excludes many, particularly Cybertruck owners facing delivery delays into summer or later.

Tesla maintains it will honor transfers for orders with initial delivery windows before the deadline and offers full deposit refunds otherwise, citing longstanding fine print that the program is “subject to change at any time.”

The reversal has polarized the Tesla community, with accusations of a “bait-and-switch” clashing against defenses of corporate pragmatism. Many owners who placed orders under the original wording feel betrayed, especially as production backlogs and new unsupervised FSD rollout complicate timelines.

However, Tesla has allowed them to cancel their orders and receive a refund.

Critics of the decision argue that the change disadvantages loyal customers who helped fund FSD development, calling it poor communication and a revenue grab as Tesla pivots toward subscriptions.

Popular influencers have amplified the divide. Whole Mars Catalog struck a measured but firm tone, acknowledging the original “order by” language but emphasizing Tesla’s right to adjust terms. He has continued to defend Tesla in this particular issue:

Sad to see so many fans trashing Tesla with such extreme language.

LIARS!!! PATHETIC!!! And if you aren’t as furious and angry as they are they are you’re “worshipping” and saying “they can do no wrong”.

Let’s get real here. They’re not liars. They offered FSD transfer to us… https://t.co/3Ay7vGaVR6

— Whole Mars Catalog (@wholemars) March 3, 2026

He criticized extreme backlash as “dramatization” and “spoiled kids,” noting the unsupervised FSD era and broader sales challenges make blanket transfers financially risky. Whole Mars advocated for polite outreach to CEO Elon Musk over the issue.

Rather than “calling them out”, I would simply say “Hey Elon, really hoped to be able to do FSD transfer on my cybertruck but the terms changed. Would really appreciate if Tesla could extend this to everyone who ordered before the terms changes”

that would probably work

— Whole Mars Catalog (@wholemars) March 3, 2026

In a contrasting perspective, Dirty TesLA voiced sharper frustration, posting that blocking transfers feels “crazy” and distancing himself from “people that want to worship a corporation and say they can do no wrong.” His stance resonated with owners who view the policy flip as disrespectful to early adopters.

Popular Tesla influencer Sawyer Merritt captured the frustration felt by thousands. In a widely shared thread viewed over 700,000 times, Merritt detailed how pre-change Cybertruck orders now risk losing FSD eligibility unless their initial delivery window falls before March 31.

It’s not a contradiction, it’s a change in policy that Tesla just made an hour ago. I am trying to check if the change is retroactive to all existing orders, including Cybertruck AWD orders, because if it is, that sucks big time.

— Sawyer Merritt (@SawyerMerritt) February 28, 2026

The controversy underscores deeper tensions—between Tesla’s need for revenue discipline and owners’ expectations of goodwill. As FSD evolves toward unsupervised capability, the community remains split: some see the change as necessary business, others as a broken promise. Whether Tesla reconsiders under pressure or holds firm remains to be seen, but it does not appear they are planning to budge.