News

Tesla’s camera-based driver monitoring system exists; pretending it doesn’t makes roads less safe

Tesla’s FSD Beta program has begun its expansion to more users. And while the system is only being distributed today to drivers with a perfect Safety Score, the advanced driver-assist system is expected to be released to users with a rating of 99 and below in the near future. True to form, with the expansion of FSD Beta also came the predictable wave of complaints and pearl-clutching from critics, some of whom still refuse to acknowledge that Tesla is now utilizing its vehicles’ in-cabin camera to bolster its driver-monitoring systems.

Just recently, the NHTSA sent a letter to Tesla asking for an explanation why the company rolled out some improvements to Autopilot without issuing a safety recall. According to the NHTSA, Tesla should have filed for a recall notice if the company found a “safety defect” on its vehicles. What was missed by the NHTSA was that the Autopilot update, which enabled the company’s vehicles to slow down and alert their drivers when an emergency vehicle is detected, was done as a proactive measure, not as a response to a defect.

Consumer Reports Weighs In

Weighing in on the issue, Consumer Reports argued that ultimately, over-the-air software updates do not really address the main weakness of Teslas, which is driver-monitoring. The magazine admitted that Tesla’s driver-assist system’s object detection and response is better than comparable systems, but Kelly Funkhouser, head of connected and automated vehicle testing for Consumer Reports, argued that it is this very reason why the magazine has safety concerns with Tesla’s cars.

“In our tests, Tesla continues to perform well at object detection and response compared to other vehicles. It’s actually because the driver assistance system performs so well that we are concerned about overreliance on it. The most important change Tesla needs to make is to add safeguards—such as an effective direct driver monitoring system—to ensure the driver is aware of their surroundings and able to take over in these types of scenarios,” Funkhouser said.

Jake Fisher, senior director of Consumer Reports‘ Auto Test Center, also shared his own take on the issue, particularly around some Autopilot crashes involving stationary emergency vehicles on the side of the road. “CR’s position is that crashes like these can be avoided if there is an effective driver monitoring system, and that’s the underlying problem here,” Fisher said, adding that over-the-air software updates are typically not sent to address defects.

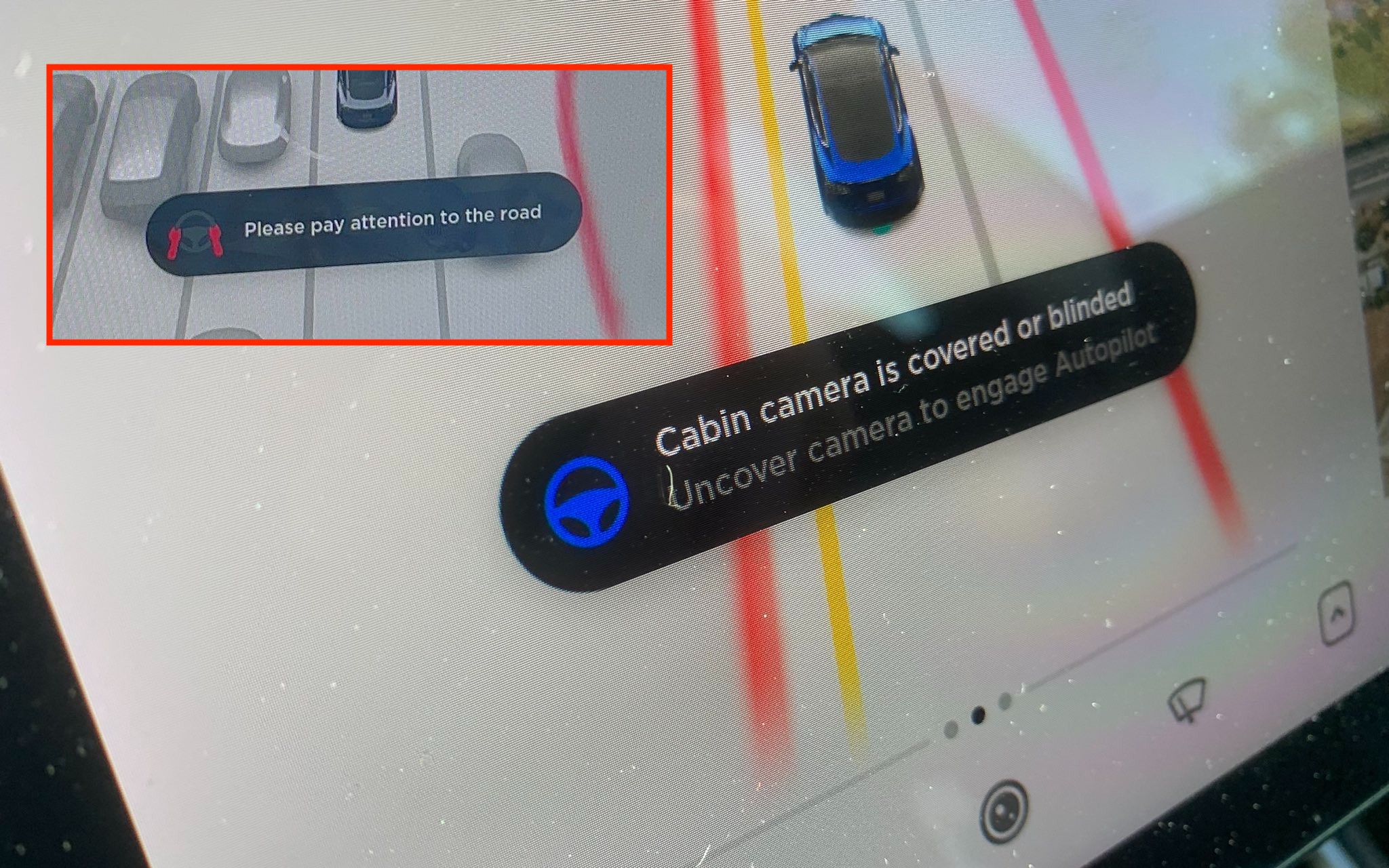

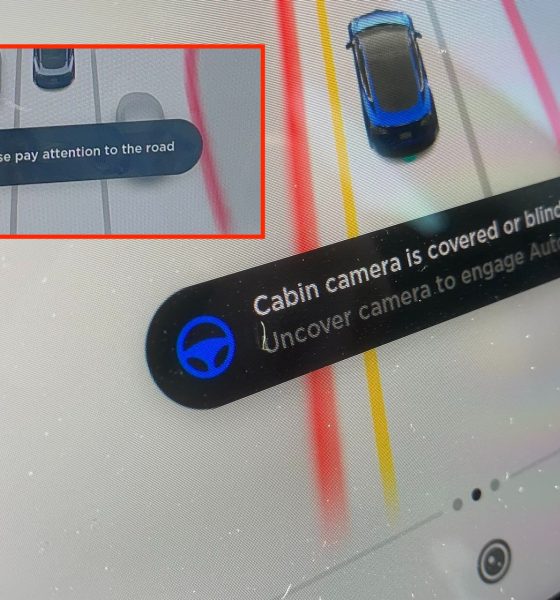

Tesla’s camera-based DMS

Funkhouser and Fisher’s reference to direct driver monitoring systems is interesting because the exact feature has been steadily rolling out to Tesla’s vehicles over the past months. It is quite strange that Consumer Reports seems unaware about this, considering that the magazine has Teslas in its fleet. Tesla, after all, has been rolling out its camera-based driver monitoring system to its fleet since late May 2021. A rollout of the camera-based system to radar-equipped vehicles was done in the previous quarter.

Tesla’s Release Notes for its camera-based driver monitoring function describes how the function works. “The cabin camera above your rearview mirror can now detect and alert driver inattentiveness while Autopilot is engaged. Camera data does not leave the car itself, which means the system cannot save or transmit information unless data sharing is enabled,” Tesla noted in its Release Notes.

What is interesting is that Consumer Reports‘ Jake Fisher was made aware of the function when it launched last May. In a tweet, Fisher even noted that the camera-based system was not “just about preventing abuse;” it also “has the potential to save lives by preventing distraction.” This shows that Consumer Reports, or at least the head of its Auto Test Center, has been fully aware that Tesla’s in-cabin cameras are now steadily being used for driver monitoring purposes. This makes his recent comments about Tesla’s lack of driver monitoring quite strange.

Legacy or Bust?

That being said, Consumer Reports appears to have a prepared narrative once it acknowledges the existence of Tesla’s camera-based driver-monitoring system. Back in March, the magazine posted an article criticizing Tesla for its in-cabin cameras, titled “Tesla’s In-Car Cameras Raise Privacy Concerns.” In the article, the magazine noted that the EV maker could simply be using its in-cabin cameras for its own benefit. “

“We have already seen Tesla blaming the driver for not paying attention immediately after news reports of a crash while a driver is using Autopilot. Now, Tesla can use video footage to prove that a driver is distracted rather than addressing the reasons why the driver wasn’t paying attention in the first place,” Funkhouser said.

Considering that Consumer Reports seems to be critical of Tesla’s use (or non-use for that matter) of its vehicles’ in-cabin cameras, it appears that the magazine is arguing that the only effective and safe driver monitoring systems are those utilized by veteran automakers like General Motors for its Super Cruise system. However, even the advanced eye-tracking technology used by GM for Super Cruise, which Consumer Reports overtly praises, has been proven to be susceptible to driver abuse.

This was proven by Car and Driver, when the motoring publication fooled Super Cruise into operating without a driver using a pair of gag glasses with eyes painted on them. One could easily criticize Car and Driver for publicly showcasing a vulnerability in Super Cruise’s driver monitoring systems, but one has to remember that Consumer Reports also published an extensive guide on how to fool Tesla’s Autopilot into operating without a driver using a series of tricks and a defeat device.

Salivating for the first FSD Beta accident

What is quite unfortunate amidst the criticism surrounding the expansion of FSD Beta is the fact that skeptics seem to be salivating for the first accident involving the advanced driver-assist system. Fortunately, Tesla seems to be aware of this, which may be the reason why the Beta is only being released to the safest drivers in the fleet. Tesla does plan on releasing the system to drivers with lower safety scores, but it would not be a surprise if the company ends up adopting an even more cautious approach when it does so.

That being said, incidents on the road are inevitable, and one can only hope that when something does happen, it would not be too easy for an organization such as Consumer Reports to run away with a narrative that echoes falsehoods that its own executives have recognized publicly — such as the potential benefits of Tesla’s camera-based driver monitoring system. Tesla’s FSD suite and Autopilot are designed as safety features, after all, and so far, they are already making the company’s fleet of vehicles less susceptible to accidents on the road. Over time, and as more people participate in the FSD Beta program, Autopilot and Full Self-Driving would only get safer.

Tesla is not above criticism, of course. There are several aspects of the company that deserves to be called out. Service and quality control, as well as the treatment of longtime Tesla customers who purchased FSD cars with MCU1 units, are but a few of them. However, it’s difficult to defend the notion that FSD and Autopilot are making the roads less safe. Autopilot and FSD have already saved numerous lives, and they have the potential to save countless more once they are fully developed. So why block their development and rollout?

Don’t hesitate to contact us with news tips. Just send a message to tips@teslarati.com to give us a heads up.

Elon Musk

SpaceX secures FAA approval for 44 annual Starship launches in Florida

The FAA’s environmental review covers up to 44 launches annually, along with 44 Super Heavy booster landings and 44 upper-stage landings.

SpaceX has received environmental approval from the Federal Aviation Administration (FAA) to conduct up to 44 Starship-Super Heavy launches per year from Kennedy Space Center Launch Complex 39A in Florida.

The decision allows the company to proceed with plans tied to its next-generation launch system and future satellite deployments.

The FAA’s environmental review covers up to 44 launches annually, along with 44 Super Heavy booster landings and 44 upper-stage landings. The approval concludes the agency’s public comment period and outlines required mitigation measures related to noise, emissions, wildlife, and airspace management.

Construction of Starship infrastructure at Launch Complex 39A is nearing completion. The site, previously used for Apollo and space shuttle missions, is transitioning to support Starship operations, as noted in a Florida Today report.

If fully deployed across Kennedy Space Center and nearby Cape Canaveral Space Force Station, Starship activity on the Space Coast could exceed 120 launches annually, excluding tests. Separately, the U.S. Air Force has authorized repurposing Space Launch Complex 37 for potential additional Starship activity, pending further FAA airspace analysis.

The approval supports SpaceX’s long-term strategy, which includes deploying a large constellation of satellites intended to power space-based artificial intelligence data infrastructure. The company has previously indicated that expanded Starship capacity will be central to that effort.

The FAA review identified likely impacts from increased noise, nitrogen oxide emissions, and temporary airspace closures. Commercial flights may experience periodic delays during launch windows. The agency, however, determined these effects would be intermittent and manageable through scheduling, public notification, and worker safety protocols.

Wildlife protections are required under the approval, Florida Today noted. These include lighting controls to protect sea turtles, seasonal monitoring of scrub jays and beach mice, and restrictions on offshore landings to avoid coral reefs and right whale critical habitat. Recovery vessels must also carry trained observers to prevent collisions with protected marine species.

Elon Musk

Texas township wants The Boring Company to build it a Loop system

The township’s board unanimously approved an application to The Boring Company’s “Tunnel Vision Challenge.”

The Woodlands Township, Texas, has formally entered The Boring Company’s tunneling sweepstakes.

The township’s board unanimously approved an application to The Boring Company’s “Tunnel Vision Challenge,” which offers up to one mile of tunnel construction at no cost to a selected community.

The Woodlands’ proposal, dubbed “The Current,” features two parallel 12-foot-diameter tunnels beneath the Town Center corridor near The Waterway. Teslas would shuttle passengers between Waterway Square, Cynthia Woods Mitchell Pavilion, Town Green Park and nearby hotels during concerts and large-scale events, as noted in a Chron report.

Township officials framed the tunnel as a solution for the township’s traffic congestion issues. The Pavilion alone hosts more than 60 shows each year and can accommodate crowds of up to 16,500, often straining Lake Robbins Drive and surrounding intersections.

“We know we have traffic impacts and pedestrian movement challenges, especially in the Town Center area,” Chris Nunes, chief operating officer of The Woodlands Township, stated during the meeting.

“The Current” mirrors the Loop system operating beneath the Las Vegas Convention Center, where Tesla vehicles transport passengers through underground tunnels between venues and resorts.

The Boring Company issued its request for proposals (RFP) in mid-January, inviting cities and districts to pitch local uses for its tunneling technology. The Woodlands must submit its application by Feb. 23, though no timeline has been provided for when a winning community will be announced.

Nunes confirmed that the board has authorized a submission for “The Current’s” proposal, though he emphasized that the project is still in its preliminary stages.

“The Woodlands Township Board of Directors has authorized staff to submit an application to The Boring Company, which has issued an RFP for communities interested in leveraging their technology to address community challenges,” he said in a statement.

“The Board believes that an underground tunnel would provide a safe and efficient means to transport people to and from various high-use community amenities in our Town Center.”

News

Tesla Model Y wins 2026 Drive Car of the Year award in Australia

The Model Y is already Australia’s best-selling EV in 2025 and the tenth best-selling vehicle overall.

The Tesla Model Y has been named 2026 Drive Car of the Year overall winner, taking the top honor after being judged as the vehicle that “moves the game forward the most for Australian new car buyers.”

The Model Y is already Australia’s best-selling EV in 2025 and the tenth best-selling vehicle overall, but the vehicle’s Juniper update strengthened its case with new ownership benefits and expanded software capability.

Drive’s overall award compares category winners and looks at which model most significantly advances the local new car market. In 2026, judges pointed to the Model Y’s five-year warranty and the availability of Full Self-Driving (Supervised) as a monthly subscription as key differentiators.

Priced from AU$58,900 before on-road costs, the all-electric crossover SUV offers a lot of value compared to similarly sized petrol and hybrid rivals. The ability to access Tesla’s Supercharger network across Australia also reduces friction for buyers moving to EV ownership.

Owners can add FSD (Supervised) for AU$149 per month. While it still requires driver oversight, the system expands the vehicle’s advanced driver-assistance capabilities and reflects Tesla’s software-first approach.

“The default choice for a reason. The Tesla Model Y makes the transition to electric both effortless and rewarding,” Drive wrote.

The 2025 Model Y facelift also sharpened the vehicle’s exterior, highlighted by a distinctive rear light bar that gives the crossover SUV a more modern road presence.

Drive described the Model Y as a benchmark for combining practicality, efficiency and technology at an accessible price point. With eligibility for federal Fringe Benefit Tax exemptions through novated leasing, its value proposition has improved for numerous buyers.

For 2026, the Model Y’s combination of range efficiency, charging access and software capability proved decisive. Ultimately, the award all but cements the Model Y’s position as one of the most influential vehicles in Australia’s evolving new-car market today.