News

Mars travelers can use ‘Star Trek’ Tricorder-like features using smartphone biotech: study

Plans to take humans to the Moon and Mars come with numerous challenges, and the health of space travelers is no exception. One of the ways any ill-effects can be prevented or mitigated is by detecting relevant changes in the body and the body’s surroundings, something that biosensor technology is specifically designed to address on Earth. However, the small size and weight requirements for tech used in the limited habitats of astronauts has impeded its development to date.

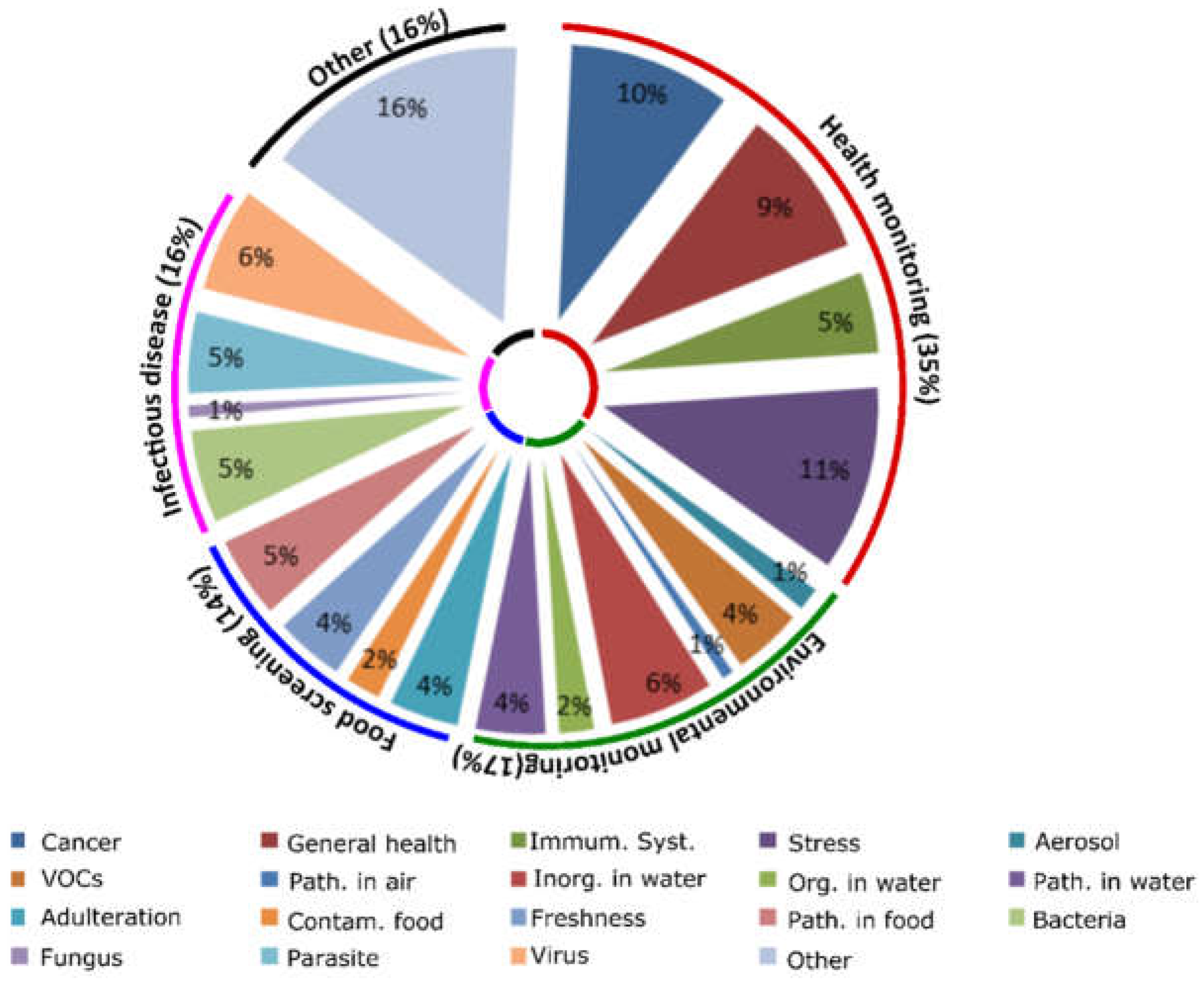

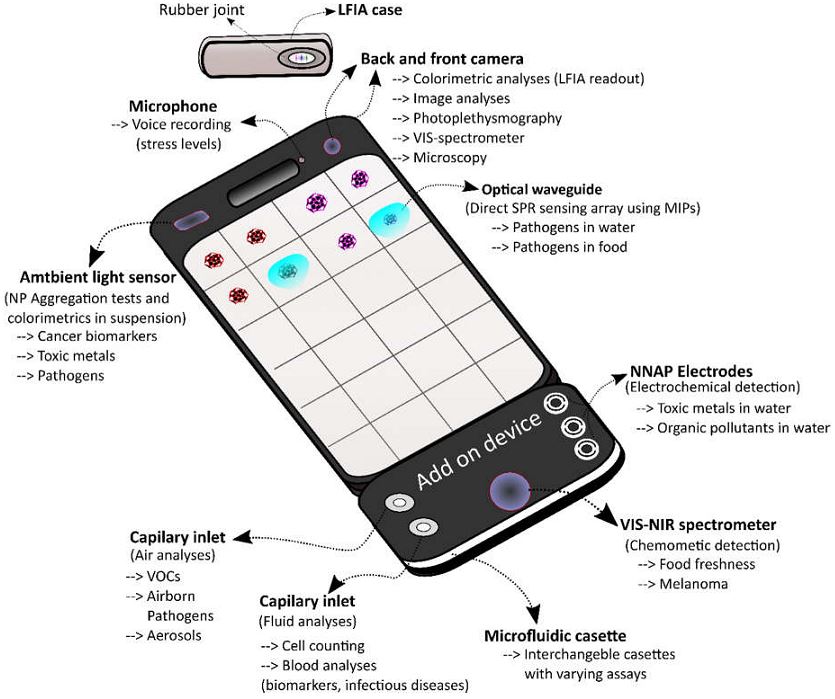

A recent study of existing smartphone-based biosensors by scientists from Queen’s University Belfast (QUB) in the UK identified several candidates under current use or development that could be also used in a space or Martian environment. When combined, the technology could provide functionality reminiscent of the “Tricorder” devices used for medical assessments in the Star Trek television and movie franchises, providing on-site information about the health of human space travelers and biological risks present in their habitats.

Biosensors focus on studying biomarkers, i.e., the body’s response to environmental conditions. For example, changes in blood composition, elevations of certain molecules in urine, heart rate increases or decreases, and so forth, are all considered biomarkers. Health and fitness apps tracking general health biomarkers have become common in the marketplace with brands like FitBit leading the charge for overall wellness sensing by tracking sleep patterns, heart rate, and activity levels using wearable biosensors. Astronauts and other future space travelers could likely use this kind of tech for basic health monitoring, but there are other challenges that need to be addressed in a compact way.

The projected human health needs during spaceflight have been detailed by NASA on its Human Research Program website, more specifically so in its web-based Human Research Roadmap (HRR) where the agency has its scientific data published for public review. Several hazards of human spaceflight are identified, such as environmental and mental health concerns, and the QUB scientists used that information to organize their study. Their research produced a 20-page document reviewing the specific inner workings of the relevant devices found in their searches, complete with tables summarizing each device’s methods and suitability for use in space missions. Here are some of the highlights.

Risks in the Spacecraft Environment

During spaceflight, the environment is a closed system that has a two-fold effect: One, the immune system has been shown to decrease its functionality in long-duration missions, specifically by lowering white blood cell counts, and two, the weightless and non-competitive environment make it easier for microbes to transfer between humans and their growth rates increase. In one space shuttle era study, the number of microbial cells in the vehicle able to reproduce increased by 300% within 12 days of being in orbit. Also, certain herpes viruses, such as those responsible for chickenpox and mononucleosis, have been reactivated under microgravity, although the astronauts typically didn’t show symptoms despite the presence of active viral shedding (the virus had surfaced and was able to spread).

Frequent monitoring of the spacecraft environment and the crew’s biomarkers is the best way to mitigate these challenges, and NASA is addressing these issues to an extent with traditional instruments and equipment to collect data, although often times the data cannot be processed until the experiments are returned to Earth. An attempt has also been made to rapidly quantify microorganisms aboard the International Space Station (ISS) via a handheld device called the Lab-on-a-Chip Application Development-Portable Test System (LOCAD-PTS). However, this device cannot distinguish between microorganism species yet, meaning it can’t tell the difference between pathogens and harmless species. The QUB study found several existing smartphone-based technologies generally developed for use in remote medical care facilities that could achieve better identification results.

One of the devices described was a spectrometer (used to identify substances based on the light frequency emitted) which used the smartphone’s flashlight and camera to generate data that was at least as accurate as traditional instruments. Another was able to identify concentrations of an artificial growth hormone injected into cows called recominant bovine somatrotropin (rBST) in test samples, and other systems were able to accurately detect cyphilis and HIV as well as the zika, chikungunya, and dengue viruses. All of the devices used smartphone attachments, some of them with 3D-printed parts. Of course, the types of pathogens detected are not likely to be common in a closed space habitat, but the technology driving them could be modified to meet specific detection needs.

The Stress of Spaceflight

A group of people crammed together in a small space for long periods of time will be impacted by the situation despite any amount of careful selection or training due to the isolation and confinement. Declines in mood, cognition, morale, or interpersonal interaction can impact team functioning or transition into a sleep disorder. On Earth, these stress responses may seem common, or perhaps an expected part of being human, but missions in deep space and on Mars will be demanding and need fully alert, well-communicating teams to succeed. NASA already uses devices to monitor these risks while also addressing the stress factor by managing habitat lighting, crew movement and sleep amounts, and recommending astronauts keep journals to vent as needed. However, an all-encompassing tool may be needed for longer-duration space travels.

As recognized by the QUB study, several “mindfulness” and self-help apps already exist in the market and could be utilized to address the stress factor in future astronauts when combined with general health monitors. For example, the popular FitBit app and similar products collect data on sleep patterns, activity levels, and heart rates which could potentially be linked to other mental health apps that could recommend self-help programs using algorithms. The more recent “BeWell” app monitors physical activity, sleep patterns, and social interactions to analyze stress levels and recommend self-help treatments. Other apps use voice patterns and general phone communication data to assess stress levels such as “StressSense” and “MoodSense”.

Advances in smartphone technology such as high resolution cameras, microphones, fast processing speed, wireless connectivity, and the ability to attach external devices provide tools that can be used for an expanding number of “portable lab” type functionalities. Unfortunately, though, despite the possibilities that these biosensors could mean for human spaceflight needs, there are notable limitations that would need to be overcome in some of the devices. In particular, any device utilizing antibodies or enzymes in its testing would risk the stability of its instruments thanks to radiation from galactic cosmic rays and solar particle events. Biosensor electronics might also be damaged by these things as well. Development of new types of shielding may be necessary to ensure their functionality outside of Earth and Earth orbit or, alternatively, synthetic biology could also be a source of testing elements genetically engineered to withstand the space and Martian environments.

The interest in smartphone-based solutions for space travelers has been garnering more attention over the years as tech-centric societies have moved in the “app” direction overall. NASA itself has hosted a “Space Apps Challenge” for the last 8 years, drawing thousands of participants to submit programs that interpret and visualize data for greater understanding of designated space and science topics. Some of the challenges could be directly relevant to the biosensor field. For example, in the 2018 event, contestants are asked to develop a sensor to be used by humans on Mars to observe and measure variables in their environments; in 2017, contestants created visualizations of potential radiation exposure during polar or near-polar flight.

While the QUB study implied that the combination of existing biosensor technology could be equivalent to a Tricorder, the direct development of such a device has been the subject of its own specific challenge. In 2012, the Qualcomm Tricorder XPRIZE competition was launched, asking competitors to develop a user-friendly device that could accurately diagnose 13 health conditions and capture 5 real-time health vital signs. The winner of the prize awarded in 2017 was Pennsylvania-based family team called Final Frontier Medical Devices, now Basil Leaf Technologies, for their DxtER device. According to their website, the sensors inside DxtER can be used independently, one of which is in a Phase 1 Clinical Trial. The second place winner of the competition used a smartphone app to connect its health testing modules and generate a diagnosis from the data acquired from the user.

The march continues to develop the technology humans will need to safely explore regions beyond Earth orbit. Space is hard, but it was hard before we went there the first time, and it was hard before we put humans on the moon. There may be plenty of challenges to overcome, but as the Queen’s University Belfast study demonstrates, we may already be solving them. It’s just a matter of realizing it and expanding on it.

News

Tesla Full Self-Driving v14.2.2.5 might be the most confusing release ever

With each Full Self-Driving release, I am realistic. I know some things are going to get better, and I know some things will regress slightly. However, these instances of improvements are relatively mild, as are the regressions. Yet, this version has shown me that it contains extremes of both.

Tesla Full Self-Driving v14.2.2.5 hit my car back on Valentine’s Day, February 14, and since I’ve had it, it has become, in my opinion, the most confusing release I’ve ever had.

With each Full Self-Driving release, I am realistic. I know some things are going to get better, and I know some things will regress slightly. However, these instances of improvements are relatively mild, as are the regressions. Yet, this version has shown me that it contains extremes of both.

It has been about three weeks of driving on v14.2.2.5; I’ve used it for nearly every mile traveled since it hit my car. I’ve taken short trips of 10 minutes or less, I’ve taken medium trips of an hour or less, and I’ve taken longer trips that are over 100 miles per leg and are over two hours of driving time one way.

These are my thoughts on it thus far:

Speed Profiles Are a Mixed Bag

Speed Profiles are something Tesla seems to tinker with quite frequently, and each version tends to show a drastic difference in how each one behaves compared to the previous version.

I do a vast majority of my FSD travel using Standard and Hurry modes, although in bad weather, I will scale it back to Chill, and when it’s a congested city on a weekend or during rush hour, I’ll throw it into Mad Max so it takes what it needs.

Early on, Speed Profiles really felt great. This is one of those really subjective parts of the FSD where someone might think one mode travels too quickly, whereas another person might see the identical performance as too slow or just right.

To me, I would like to see more consistency from release to release on them, but overall, things are pretty good. There are no real complaints on my end, as I had with previous releases.

In a past release, Mad Max traveled under the speed limit quite frequently, and I only had that experience because Hurry was acting the same way. I’ve had no instances of that with v14.2.2.5.

Strange Turn Signal Behavior

This is the first Full Self-Driving version where I’ve had so many weird things happen with the turn signals.

Two things come to mind: Using a turn signal on a sharp turn, and ignoring the navigation while putting the wrong turn signal on. I’ve encountered both things on v14.2.2.5.

On my way to the Supercharger, I take a road that has one semi-sharp right-hand turn with a driveway entrance right at the beginning of the turn.

Only recently, with the introduction of v14.2.2.5, have I had FSD put on the right turn signal when going around this turn. It’s obviously a minor issue, but it still happens, and it’s not standard practice:

How can we get Full Self-Driving to stop these turn signals?

There’s no need to use one here; the straight path is a driveway, not a public road. The right turn signal here is unnecessary pic.twitter.com/7uLDHnqCfv

— TESLARATI (@Teslarati) February 28, 2026

When sharing this on X, I had Tesla fans (the ones who refuse to acknowledge that the company can make mistakes) tell me that it’s a “valid” behavior that would be taught to anyone who has been “professionally trained” to drive.

Apparently, if you complain about this turn signal, you are also claiming you know more than Tesla engineers…okay.

Nobody in their right mind has ever gone around a sharp turn when driving their car and put on a signal when continuing on the same road. You would put a left turn signal on to indicate you were turning into that driveway if that’s what your intention was.

Like I said, it’s a totally minor issue. However, it’s not really needed, and nor is it normal. If I were in the car with someone who was taking a simple turn on a road they were traveling, and they signaled because the turn was sharp, I’d be scratching my head.

I’ve also had three separate instances of the car completely ignoring the navigation and putting on a signal that is opposite to what the routing says. Really quite strange.

Parking Performance is Still Underwhelming

Parking has been a complaint of mine with FSD for a long time, so much so that it is pretty rare that I allow the vehicle to park itself. More often than not, it is because I want to pick a spot that is relatively isolated.

However, in the times I allow it to pull into a spot, it still does some pretty head-scratching things.

Recently, it tried to back into a spot that was ~60% covered in plowed snow. The snow was piled about six feet high in a Target parking lot.

A few days later, it tried backing into a spot where someone failed the universal litmus test of returning their shopping cart. Both choices were baffling and required me to manually move the car to a different portion of the lot.

I used Autopark on both occasions, and it did a great job of getting into the spot. I notice that the parking performance when I manually choose the spot is much better than when the car does the entire parking process, meaning choosing the spot and parking in it.

It’s Doing Things (For Me) It’s Never Done Before

Two things that FSD has never done before, at least for me, are slow down in School Zones and avoid deer. The first is something I usually take over manually, and the second I surprisingly have not had to deal with yet.

I had my Tesla slow down at a school zone yesterday for the first time, traveling at 20 MPH and not 15 MPH as the sign suggested, but at the speed of other cars in the School Zone. This was impressive and the first time I experienced it.

I would like to see this more consistently, and I think School Zones should be one of those areas where, no matter what, FSD will only travel the speed limit.

Last night, FSD v14.2.2.5 recognized a deer in a roadside field and slowed down for it:

🚨 Cruising home on a rainy, foggy evening and my Tesla on Full Self-Driving begins to slow down suddenly

FSD just wanted Mr. Deer to make it home to his deer family ❤️ pic.twitter.com/cAeqVDgXo5

— TESLARATI (@Teslarati) March 4, 2026

Navigation Still SUCKS

Navigation will be a complaint until Tesla proves it can fix it. For now, it’s just terrible.

It still has not figured out how to leave my neighborhood. I give it the opportunity to prove me wrong each time I leave my house, and it just can’t do it.

It always tries to go out of the primary entrance/exit of the neighborhood when the route needs to take me left, even though that exit is a right turn only. I always leave a voice prompt for Tesla about it.

It still picks incredibly baffling routes for simple navigation. It’s the one thing I still really want Tesla to fix.

Investor's Corner

Tesla gets tip of the hat from major Wall Street firm on self-driving prowess

“Tesla is at the forefront of autonomous driving, supported by a camera-only approach that is technically harder but much cheaper than the multi-sensor systems widely used in the industry. This strategy should allow Tesla to scale more profitably compared to Robotaxi competitors, helped by a growing data engine from its existing fleet,” BoA wrote.

Tesla received a tip of the hat from major Wall Street firm Bank of America on Wednesday, as it reinitiated coverage on Tesla shares with a bullish stance that comes with a ‘Buy’ rating and a $460 price target.

In a new note that marks a sharp reversal from its neutral position earlier in 2025, the bank declared Tesla’s Full Self-Driving (FSD) technology the “leading consumer autonomy solution.”

Analysts highlighted Tesla’s camera-only architecture, known as Tesla Vision, as a strategic masterstroke. While technically more challenging than the multi-sensor setups favored by rivals, the vision-based approach is dramatically cheaper to produce and maintain.

This cost edge, combined with Tesla’s rapidly expanding real-world data engine, positions the company to scale robotaxis far more profitably than competitors, BofA argues in the new note:

“Tesla is at the forefront of autonomous driving, supported by a camera-only approach that is technically harder but much cheaper than the multi-sensor systems widely used in the industry. This strategy should allow Tesla to scale more profitably compared to Robotaxi competitors, helped by a growing data engine from its existing fleet.”

The bank now attributes roughly 52% of Tesla’s total valuation to its Robotaxi ambitions. It also flagged meaningful upside from the Optimus humanoid robot program and the fast-growing energy storage business, suggesting the auto segment’s recent headwinds, including expired incentives, are being eclipsed by these higher-margin opportunities.

Tesla’s own data underscores exactly why Wall Street is waking up to FSD’s potential. According to Tesla’s official safety reporting page, the FSD Supervised fleet has now surpassed 8.4 billion cumulative miles driven.

Tesla FSD (Supervised) fleet passes 8.4 billion cumulative miles

That total ballooned from just 6 million miles in 2021 to 80 million in 2022, 670 million in 2023, 2.25 billion in 2024, and a staggering 4.25 billion in 2025 alone. In the first 50 days of 2026, owners added another 1 billion miles — averaging more than 20 million miles per day.

This avalanche of real-world, camera-captured footage, much of it on complex city streets, gives Tesla an unmatched training dataset. Every mile feeds its neural networks, accelerating improvement cycles that lidar-dependent rivals simply cannot match at scale.

Tesla owners themselves will tell you the suite gets better with every release, bringing new features and improvements to its self-driving project.

The $460 target implies roughly 15 percent upside from recent trading levels around $400. While regulatory and safety hurdles remain, BofA’s endorsement signals growing institutional conviction that Tesla’s data advantage is not hype; it’s a tangible moat already delivering billions of miles of proof.

News

Tesla to discuss expansion of Samsung AI6 production plans: report

Tesla has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

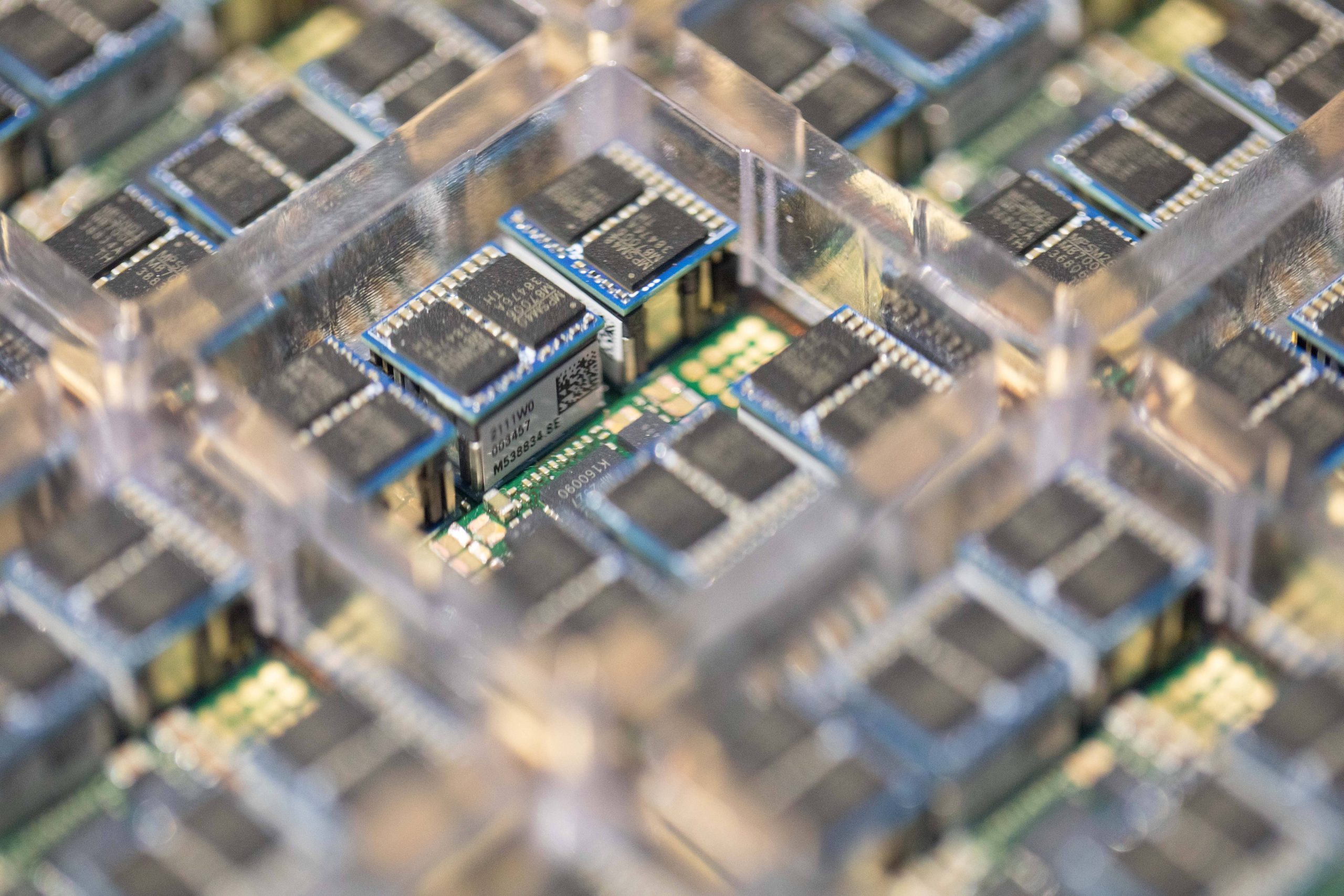

Tesla is reportedly discussing an expansion of its next-generation AI chip supply deal with Samsung Electronics.

As per a report from Korean industry outlet The Elec, Tesla purchasing executives are reportedly scheduled to meet Samsung officials this week to negotiate additional production volume for the company’s upcoming AI6 chip.

Industry sources cited in the report stated that Tesla is pushing to increase the production volume of its AI6 chip, which will be manufactured using Samsung’s 2-nanometer process.

Tesla previously signed a long-term foundry agreement with Samsung covering AI6 production through December 31, 2033. The deal was reportedly valued at about 22.8 trillion won (roughly $16–17 billion).

Under the existing agreement, Tesla secured approximately 16,000 wafers per month from the facility. The company has reportedly requested an additional 24,000 wafers per month, which would bring total production capacity to around 40,000 wafers if finalized.

Tesla purchasing executives are expected to discuss detailed supply terms during their visit to Samsung this week.

The AI6 chip is expected to support several Tesla technologies. Industry sources stated that the chip could be used for the company’s Full Self-Driving system, the Optimus humanoid robot, and Tesla’s internal AI data centers.

The report also indicated that AI6 clusters could replace the role previously planned for Tesla’s Dojo AI supercomputer. Instead of a single system, multiple AI6 chips would be combined into server-level clusters.

Tesla’s semiconductor collaboration with Samsung dates back several years. Samsung participated in the design of Tesla’s HW3 (AI3) chip and manufactured it using a 14-nanometer process. The HW4 chip currently used in Tesla vehicles was also produced by Samsung using a 5-nanometer node.

Tesla previously planned to split production of its AI5 chip between Samsung and TSMC. However, the company reportedly chose Samsung as the primary partner for the newer AI6 chip.