News

Tesla crash leads NTSB to begin probe of autonomous driving technology

The echoes of the Autopilot crash that killed Joshua Brown on May 7 are still reverberating. Not only is the National Highway Transportation Safety Administration conducting an investigation, now the National Transportation Safety Board (NTSB) is getting into the act. NHTSA chief Mark Rosekind and Transportation Secretary Anthony Fox have expressed approval of autonomous driving technology. With more than 80% of the 34,000 highway deaths in the US every year attributed to human error, they recognize the power of new safety system to reduce the carnage on America’s roadways.

The NTSB on the other hand has warned that such systems can lead to danger because they lull drivers into complacency behind the wheel. Missy Cummings, an engineering professor and human factors expert at Duke University, says humans tend to show “automation bias,” a trust that automated systems can handle all situations when they work 80% of the time.

According to Automotive News, NTSB will send a team of five investigators to Florida to probe the of Joshua Brown after his Tesla Model S while driving on Autopilot crashed into a tractor trailer. “It’s worth taking a look and seeing what we can learn from that event, so that as that automation is more widely introduced we can do it in the safest way possible,” says Christopher O’Neil, who heads the NTSB.

“It’s very significant,” says Clarence Ditlow, executive director of the Center for Auto Safety, an advocacy group in Washington, DC. “The NTSB only investigates crashes with broader implications.” He says the action by the NTSB is significant. “They’re not looking at just this crash. They’re looking at the broader aspects. Are these driverless vehicles safe? Are there enough regulations in place to ensure their safety” Ditlow adds, “And one thing in this crash I’m certain they’re going to look at is using the American public as test drivers for beta systems in vehicles. That is simply unheard of in auto safety,” he said.

That is the crux of the situation. Tesla obviously has the right to conduct a beta test of its Autopilot system if only other Tesla drivers were involved. The question is whether it has the same right to do so on public roads with other drivers who are not part of the beta test and who are unaware than an experiment is taking place around them as they drive?

For its part, Tesla steadfastly maintains that “Autopilot is by far the most advanced driver-assistance system on the road, but it does not turn a Tesla into an autonomous vehicle and does not allow the driver to abdicate responsibility. Since the release of Autopilot, we’ve continuously educated customers on the use of the feature, reminding them that they’re responsible for remaining alert and present when using Autopilot and must be prepared to take control at all times.”

That’s all well and good, but are such pronouncements from the company enough to overcome that “automation bias” Professor Cummings refers to? Clearly, Ditlow thinks not. What the NTSB decides to do, if anything, after it completes its investigation could have a dramatic impact on self driving technology both in the US and around the world. Regulators in other countries will place a lot of weight on what the Board decides.

From Tesla’s perspective, they will want to know if the death of one person is reason enough to delay implementation of technology that could save 100,000 or more lives each year worldwide. The company is racing ahead with improvements to its Autopilot system. Just the other week a Tesla Model S was spotted testing near the company’s Silicon Valley-based headquarters with a LIDAR mounted to its roof.

NTSB has a lot of clout when it comes to promulgating safety regulations. It will be hard pressed to fairly balance all of the competing interests involved in the aftermath of the Joshua Brown fatality.

Elon Musk

Tesla begins expanding Robotaxi access: here’s how you can ride

You can ride in a Tesla Robotaxi by heading to its website and filling out the interest form. The company is hand-picking some of those who have done this to gain access to the fleet.

Tesla has begun expanding Robotaxi access beyond the initial small group it offered rides to in late June, as it launched the driverless platform in Austin, Texas.

The small group of people enjoying the Robotaxi ride-hailing service is now growing, as several Austin-area residents are receiving invitations to test out the platform for themselves.

The first rides took place on June 22, and despite a very small number of very manageable and expected hiccups, Tesla Robotaxi was widely successful with its launch.

Tesla Robotaxi riders tout ‘smooth’ experience in first reviews of driverless service launch

However, Tesla is expanding the availability of the ride-hailing service to those living in Austin and its surrounding areas, hoping to gather more data and provide access to those who will utilize it on a daily basis.

Many of the people Tesla initially invited, including us, are not local to the Austin area.

There are a handful of people who are, but Tesla was evidently looking for more stable data collection, as many of those early invitees headed back to where they live.

The first handful of invitations in the second round of the Robotaxi platform’s Early Access Program are heading out to Austin locals:

I just got a @robotaxi invite! Super excited to go try the service out! pic.twitter.com/n9mN35KKFU

— Ethan McKanna (@ethanmckanna) July 1, 2025

Tesla likely saw an influx of data during the first week, as many traveled far and wide to say they were among the first to test the Robotaxi platform.

Now that the first week and a half of testing is over, Tesla is expanding invites to others. Many of those who have been chosen to gain access to the Robotaxi app and the ride-hailing service state that they simply filled out the interest form on the Robotaxi page of Tesla’s website.

That’s the easiest way you will also gain access, so be sure to fill out that form if you have any interest in riding in Robotaxi.

Tesla will continue to utilize data accumulated from these rides to enable more progress, and eventually, it will lead to even more people being able to hail rides from the driverless platform.

With more success, Tesla will start to phase out some of the Safety Monitors and Supervisors it is using to ensure things run smoothly. CEO Elon Musk said Tesla could start increasing the number of Robotaxis to monitors within the next couple of months.

Elon Musk

Tesla analyst issues stern warning to investors: forget Trump-Musk feud

A Tesla analyst today said that investors should not lose sight of what is truly important in the grand scheme of being a shareholder, and that any near-term drama between CEO Elon Musk and U.S. President Donald Trump should not outshine the progress made by the company.

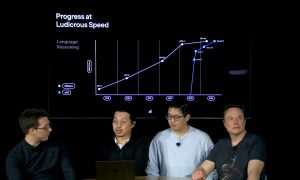

Gene Munster of Deepwater Management said that Tesla’s progress in autonomy is a much larger influence and a significantly bigger part of the company’s story than any disagreement between political policies.

Munster appeared on CNBC‘s “Closing Bell” yesterday to reiterate this point:

“One thing that is critical for Tesla investors to remember is that what’s going on with the business, with autonomy, the progress that they’re making, albeit early, is much bigger than any feud that is going to happen week-to-week between the President and Elon. So, I understand the reaction, but ultimately, I think that cooler heads will prevail. If they don’t, autonomy is still coming, one way or the other.”

BREAKING: GENE MUNSTER SAYS — $TSLA AUTONOMY IS “MUCH BIGGER” THAN ANY FEUD 👀

He says robotaxis are coming regardless ! pic.twitter.com/ytpPcwUTFy

— TheSonOfWalkley (@TheSonOfWalkley) July 2, 2025

This is a point that other analysts like Dan Ives of Wedbush and Cathie Wood of ARK Invest also made yesterday.

On two occasions over the past month, Musk and President Trump have gotten involved in a very public disagreement over the “Big Beautiful Bill,” which officially passed through the Senate yesterday and is making its way to the House of Representatives.

Musk is upset with the spending in the bill, while President Trump continues to reiterate that the Tesla CEO is only frustrated with the removal of an “EV mandate,” which does not exist federally, nor is it something Musk has expressed any frustration with.

In fact, Musk has pushed back against keeping federal subsidies for EVs, as long as gas and oil subsidies are also removed.

Nevertheless, Ives and Wood both said yesterday that they believe the political hardship between Musk and President Trump will pass because both realize the world is a better place with them on the same team.

Munster’s perspective is that, even though Musk’s feud with President Trump could apply near-term pressure to the stock, the company’s progress in autonomy is an indication that, in the long term, Tesla is set up to succeed.

Tesla launched its Robotaxi platform in Austin on June 22 and is expanding access to more members of the public. Austin residents are now reporting that they have been invited to join the program.

Elon Musk

Tesla surges following better-than-expected delivery report

Tesla saw some positive momentum during trading hours as it reported its deliveries for Q2.

Tesla (NASDAQ: TSLA) surged over four percent on Wednesday morning after the company reported better-than-expected deliveries. It was nearly right on consensus estimations, as Wall Street predicted the company would deliver 385,000 cars in Q2.

Tesla reported that it delivered 384,122 vehicles in Q2. Many, including those inside the Tesla community, were anticipating deliveries in the 340,000 to 360,000 range, while Wall Street seemed to get it just right.

Tesla delivers 384,000 vehicles in Q2 2025, deploys 9.6 GWh in energy storage

Despite Tesla meeting consensus estimations, there were real concerns about what the company would report for Q2.

There were reportedly brief pauses in production at Gigafactory Texas during the quarter and the ramp of the new Model Y configuration across the globe were expected to provide headwinds for the EV maker during the quarter.

At noon on the East Coast, Tesla shares were up about 4.5 percent.

It is expected that Tesla will likely equal the number of deliveries it completed in both of the past two years.

It has hovered at the 1.8 million mark since 2023, and it seems it is right on pace to match that once again. Early last year, Tesla said that annual growth would be “notably lower” than expected due to its development of a new vehicle platform, which will enable more affordable models to be offered to the public.

These cars are expected to be unveiled at some point this year, as Tesla said they were “on track” to be produced in the first half of the year. Tesla has yet to unveil these vehicle designs to the public.

Dan Ives of Wedbush said in a note to investors this morning that the company’s rebound in China in June reflects good things to come, especially given the Model Y and its ramp across the world.

He also said that Musk’s commitment to the company and return from politics played a major role in the company’s performance in Q2:

“If Musk continues to lead and remain in the driver’s seat, we believe Tesla is on a path to an accelerated growth path over the coming years with deliveries expected to ramp in the back-half of 2025 following the Model Y refresh cycle.”

Ives maintained his $500 price target and the ‘Outperform’ rating he held on the stock:

“Tesla’s future is in many ways the brightest it’s ever been in our view given autonomous, FSD, robotics, and many other technology innovations now on the horizon with 90% of the valuation being driven by autonomous and robotics over the coming years but Musk needs to focus on driving Tesla and not putting his political views first. We maintain our OUTPERFORM and $500 PT.”

Moving forward, investors will look to see some gradual growth over the next few quarters. At worst, Tesla should look to match 2023 and 2024 full-year delivery figures, which could be beaten if the automaker can offer those affordable models by the end of the year.

-

Elon Musk2 days ago

Elon Musk2 days agoTesla investors will be shocked by Jim Cramer’s latest assessment

-

News1 week ago

News1 week agoTesla Robotaxi’s biggest challenge seems to be this one thing

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoElon Musk slams Bloomberg’s shocking xAI cash burn claims

-

News2 weeks ago

News2 weeks agoTexas lawmakers urge Tesla to delay Austin robotaxi launch to September

-

Elon Musk1 week ago

Elon Musk1 week agoFirst Look at Tesla’s Robotaxi App: features, design, and more

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoTesla Robotaxis are becoming a common sight on Austin’s public roads

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoSpaceX President meets India Minister after Starlink approval

-

Elon Musk2 weeks ago

Elon Musk2 weeks agoxAI’s Grok 3 partners with Oracle Cloud for corporate AI innovation