Tesla’s AI Day is here. In a few minutes, Tesla watchers would be seeing executives like Elon Musk provide an in-depth discussion on the company’s AI efforts on not just its automotive business but on its energy business and beyond as well. AI Day promises to be yet another tour-de-force of technical information from the electric car manufacturer. Thus, it is no surprise that there is a lot of excitement from the EV community heading into the event.

Tesla has kept the details of AI Day behind closed doors, so the specifics of the actual event are scarce. That being said, an AI Day agenda sent to attendees indicated that they could expect to hear Elon Musk speak during a live keynote, speak with Andrej Karpathy and the rest of Tesla’s AI engineers, and participate in breakout sessions with the teams behind Tesla’s AI development.

Similar to Autonomy Day and Battery Day, Teslarati would be following along on AI Day’s discussions to provide you with an updated account of the highly-anticipated event. Please refresh this page from time to time, as notes, details, and quotes from Elon Musk’s keynote and its following discussions will be posted here.

Simon 19:40 PT – A question about the use cases for the Tesla Bot was asked. Musk notes that the Tesla Bot would start with boring, repetitive, work, or work that people would least like to do.

Simon 19:25 PT – A question about AI and manufacturing is asked and how it potentially relates to the “Alien Dreadnaught” concept. Musk notes that most of Tesla’s manufacturing today is already automated. Musk also noted that humanoid robots would be done either way, so it would be great for Tesla to do this project, and safely as well. “We’re making the pieces that would be useful for a humanoid robot, so we should probably make it. If we don’t someone else will — and we want to make sure it’s safe,” Musk said.

Simon 19:15 PT – And the Q&A starts. First question involves open-sourcing Tesla’s innovations. Musk notes that it’s pretty expensive to develop all this tech, so he’s not sure how things could be open-sourced. But if other car companies would like to license the system, that could be done.

Simon 19:11 PT – There will really be a “Tesla Bot.” It would be built by humans, for humans. It would be friendly, and it would eliminate dangerous, repetitive, boring tasks. This is still petty darn unreal. It uses the systems that are currently being developed for the company’s vehicles. “There will be profound applications for the economy,” Musk said.

Simon 19:06 PT – New products! A whole Tesla suit?! After a fun skit, Elon says the “Tesla Bot” would eventually be real.

Simon 19:00 PT – What is crazy is that Dojo is not even done. This is just what it is today. Dojo is still evolving, and it is going to be way more powerful in the future. Now, it’s Elon Musk’s turn. What’s next for Tesla beyond vehicles.

Simon 19:00 PT – Venkataramanan teases the ExaPOD. Yet another revolutionary solution from Tesla. With all this, it is evident that Tesla’s approach to autonomy is on a whole other level. It would not be surprising if it takes Wall Street and the market a few days to fully absorb what is happening here.

Simon 18:55 PT – The specs of Dojo are insane. Behind its beastly specs, it seems that Dojo’s full potential lies in the fact that all this power is being used to do one thing: to make autonomous cars possible. Dojo is a pure learning machine, with more than 500,000 training nodes being built together. Nine petaflops of compute per tile, 36 terabytes per second of off-tile bandwidth. But this is just the tip of the iceberg for Dojo.

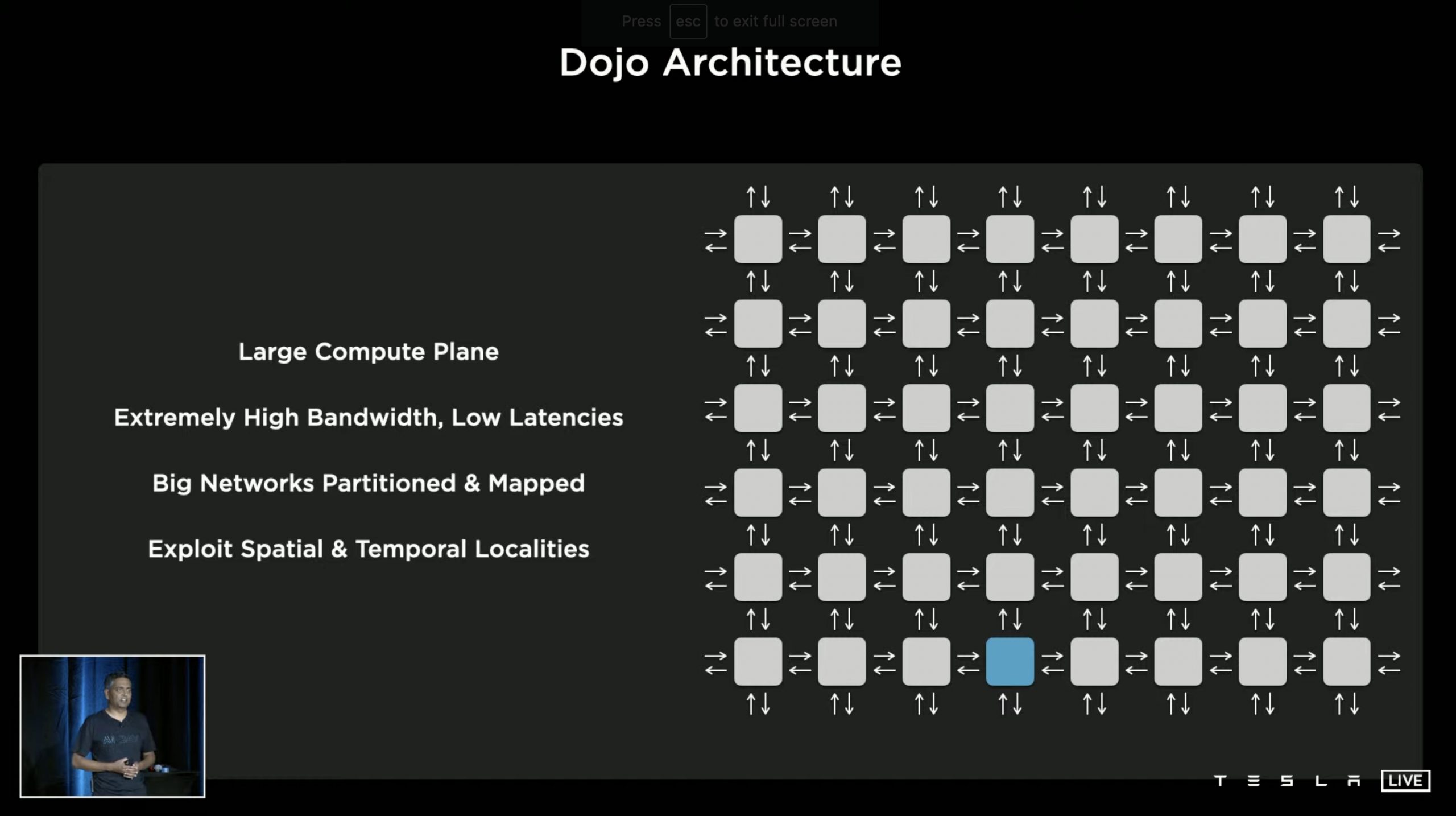

Simon 18:50 PT – Ganesh Venkataramanan, Project Dojo’s lead, takes the stage. He states that Elon Musk wanted a super-fast training computer to train Autopilot. And thus Project Dojo was born. Dojo is a distributed compute architecture connected by network fabric. It also has a large compute plane, extremely high bandwidth with low latencies, and big networks that are partitioned and mapped, to name a few.

Simon 18:45 PT – Milan Kovac, Tesla’s Director of Autopilot Engineering takes the stage. He notes that he would discuss how neural networks are run in the company’s cars. He notes that Tesla’s systems require supercomputers.

Simon 18:40 PT – Ashok notes that simulations have helped Tesla a lot already. It has, for example, helped the company identify pedestrian, bicycle, and vehicle detection and kinematics. The networks in the vehicles were traded to 371 million simulated images and 480 million cuboids.

Simon 18:35 PT – Ashok notes that these strategies ultimately helped Tesla retire radar from its FSD and Autopilot suite and adopt a pure vision model. A comparison between a radar+camera system and pure vision shows just how much more refined the company’s current strategy is. The executive also touched on how simulations help Tesla develop its self-driving systems. He states that simulations help when data is difficult to source, difficult to label, or in a closed loop.

Simon 18:30 PT – Ashok returns to discuss Auto Labeling. Simply put, there is so much labeling that needs to be done that it’s impossible to be done manually. He shows how roads and other items on the road are “reconstructed” from a single car that’s driving. This effectively allowed Tesla to label data much faster, while allowing vehicles to navigate safely and accurately even when occlusions are present.

Simon 18:25 PT – Karpathy returns to talk about manual labeling. He notes that manual labeling that’s outsourced to third-party firms is not optimal. Thus, in the spirit of vertical integration, Tesla opted to establish its own labeling team. Karpathy notes that in the beginning, that Tesla was using 2D image labeling. Eventually, Tesla transitioned to 4D labeling, where the company could label in vector space. But even this was not enough, and thus, auto labeling was developed.

Simon 18:23 PT – The executive states that traffic behavior is extremely complicated, especially in several parts of the world. Ashok notes that this partly illustrated by parking lots and how they are actually complex. Summoning a car from a parking lot, for example, used to utilize 400k notes to navigate, resulting in a system whose performance left much to be desired.

Simon 18:18 PT – Ashok notes that when driving alongside other cars, Autopilot must not only think about how they would drive, they must also think about how other cars would operate. He shows a video of a Tesla navigating a road and dealing with multiple vehicles to demonstrate this point.

Simon 18:15 PT – Director of Autopilot Software Ashok Elluswamy takes the stage. He starts off by discussing some key problems in planning in both non-convex and high-dimensional action spaces. He also shows Tesla’s solution to these issues, a “Hybrid Planning System.” He demonstrates this by showing how Autopilot performs a lane change.

Simon 18:10 PT – Karpathy’s discussion notes that today, Tesla’s FSD strategy is a lot more cohesive. This is demonstrated by the fact that the company’s vehicles could effectively draw a map in real-time as it drives. This is a massive difference compared to the pre-mapped strategies employed by rivals in both the automotive and software field like Super Cruise and Waymo.

To solve several problems encountered over the last few years with the previous suite, Tesla re-engineered their NN learning from the ground up and utilized a multi-head route, camera calibrations, caching, queues, and optimizations to streamline all tasks.

(heavily simplified) pic.twitter.com/LG2TRgjxip

— Teslascope (@teslascope) August 20, 2021

Simon 18:05 PT – The AI Director discusses how Tesla practically re-engineered their neural network learning from the ground-up and utilized a multi-head route. These include camera calibrations, caching, queues, and optimizations to streamline all tasks. Do note that this is an extremely simplified iteration of Karpathy’s discussion so far.

Simon 18:00 PT – Karpathy covers more challenges that are involved in even the basics of perception. Needless to say, AI Day is quickly proving to be Tesla’s most technical event right off the bat. That said, multi-camera networks are amazing. They’re just a ton of work, but it may very well be a silver bullet for Tesla’s predictive efforts.

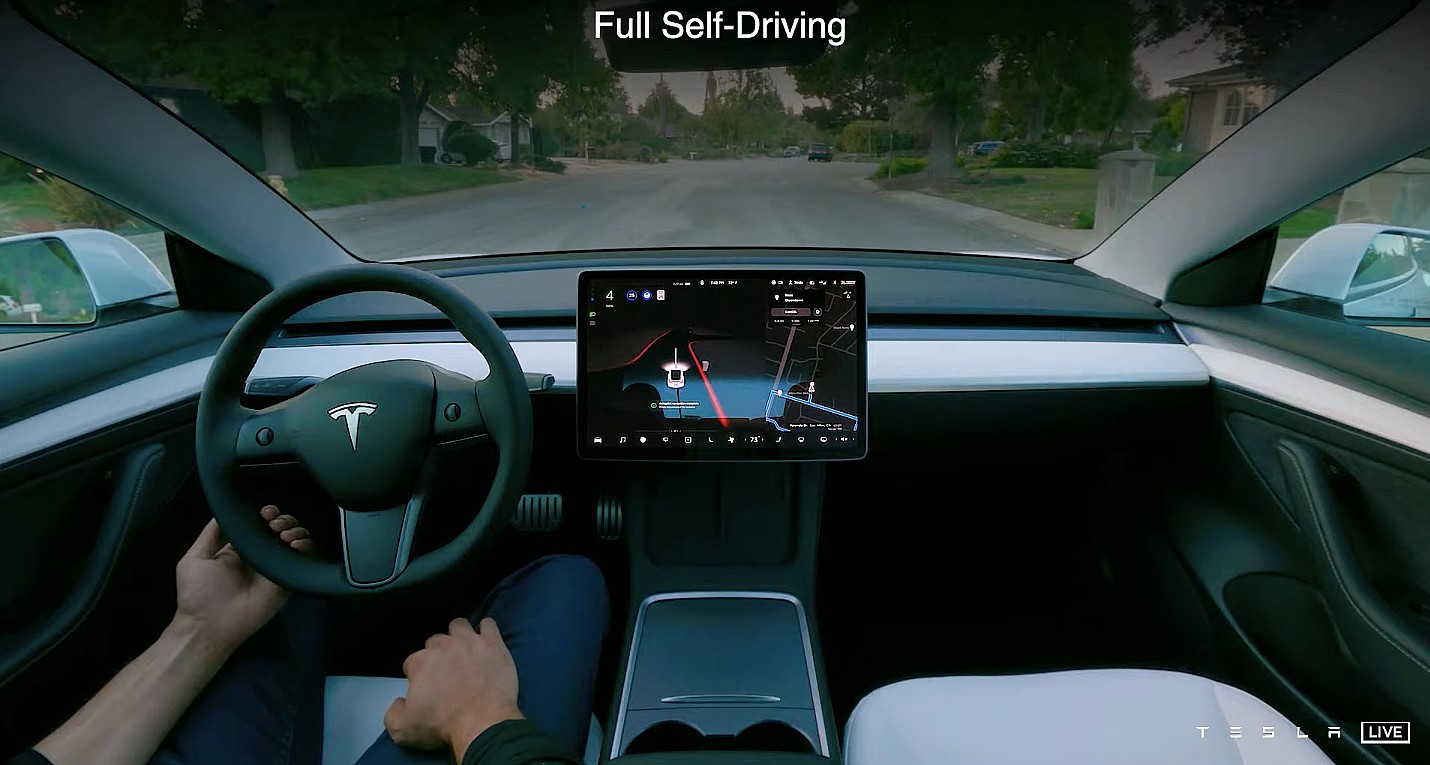

Simon 17:56 PT – Karpathy showcases a video of how Tesla used to process its image data in the past. He shows a popular video for FSD that has been shared in the past. He notes that while great, such a system proved to be inadequate, and this is something that Tesla learned when it launched Smart Summon. While per-camera detection is great, the vector space proves inadequate.

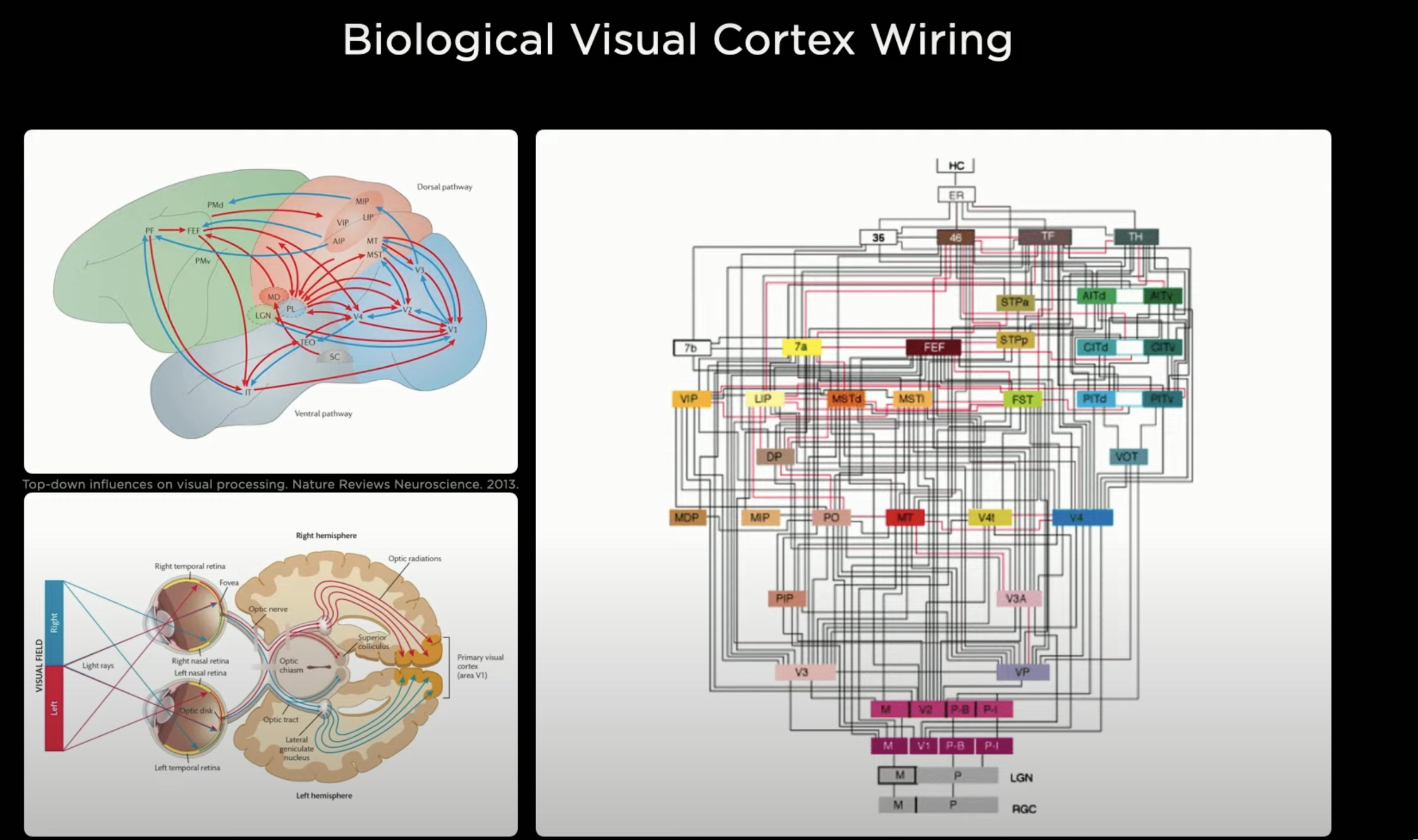

Simon 17:55 PT – Karpathy noted that when Tesla designs the visual cortex in its car, the company is modeling it to how a biological vision is perceived by eyes. He also touches on how Tesla’s visual processing strategies have evolved over the years, and how it is done today. The AI Director also touches on Tesla’s “HydraNets,” on account of their multi-task learning capabilities.

Simon 17:51 PT – Karpathy starts off by discussing the visual component of Tesla’s AI, as characterized by the eight cameras used in the company’s vehicles. The AI director notes that AI could be considered like a biological being, and it’s built from the ground up, including its synthetic visual cortex.

Simon 17:48 PT – Elon Musk takes the stage. He apologizes for the event’s delay. He jokes that Tesla probably needs AI to solve these “technical difficulties.” The CEO highlights that AI Day is a recruitment event. He calls Tesla’s head of AI Andrej Karpathy. There’s no better person to discuss AI.

Simon 17:45 PT – We’re here watching the AI Day FSD preview video and we can’t help but notice that… are those Waypoints?!

Simon 17:38 PT – Looks like we’ve got an Elon sighting! And a preview video too! Here we go, folks!

We’ve got an Elon sighting

— Rob Maurer (@TeslaPodcast) August 20, 2021

Simon 17:30 PT – A 30-minute delay. We haven’t seen this much delay in quite a bit.

Simon 17:20 PT – It’s a good thing that Tesla has great taste in music. Did Grimes mix this track?

Simon 17:15 PT – We’re 15 minutes in. “Elon Time” is going strong on AI Day. To be honest, though, this music would fit the “Rave Cave” in Giga Berlin this coming October.

Simon 17:10 PT – A good thing to keep in mind is that AI Day is a recruitment event. Some food for thought just in case the discussions take a turn for the extremely technical. AI Day is designed to attract individuals who speak Tesla’s language in its rawest form. We’re just fortunate enough to come along for the ride.

Tesla Board Member Hiro Mizuno sums it up in this tweet pretty well.

Anybody passionate about real world AI !! https://t.co/ydaWQlkE4O

— HIRO MIZUNO (@hiromichimizuno) August 20, 2021

Simon 17:05 PT – I guess AI Day is starting on “Elon Time?” We’re on to the next track of chill music.

Simon 17:00 PT – And with 5 p.m. PST here, the music is officially live on the AI Day live stream. Looks like we’re in for some wait. Wonder how many minutes it would take before it starts? Gotta love this chill music though.

Simon 16:58 PT – While waiting, I can’t help but think that a ton of TSLA bears and Wall Street would likely not understand the nuances of what Tesla would be discussing today. Will Tesla go three-for-three? It was certainly the case with Battery Day and Autonomy Day.

Made it pic.twitter.com/aAWqxgf0bP

— Johnna (@JohnnaCrider1) August 19, 2021

Simon 16:55 PT – T-minus 5 minutes. Some attendees of AI Day are now posting some photos on Twitter, but it seems like photos and videos are not allowed on the actual venue of the event. Pretty much expected, I guess.

Simon 16:50 PT – Greetings, everyone, and welcome to another Live Blog. This is Tesla’s most technical event yet, so I expect this one to go extremely in-depth on the company’s AI efforts and the technology behind it. We’re pretty excited.

Don’t hesitate to contact us with news tips. Just send a message to tips@teslarati.com to give us a heads up.

News

Tesla wins another award critics will absolutely despise

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Tesla just won another award that critics will absolutely despise, as it has been recognized once again as the company with the most sustainable supply chain.

Tesla has once again proven its critics wrong, securing the number one spot on the 2026 Lead the Charge Auto Supply Chain Leaderboard for the second consecutive year, Lead the Charge rankings show.

NEWS: Tesla ranked 1st on supply chain sustainability in the 2026 Lead the Charge auto/EV supply chain scorecard.

“@Tesla remains the top performing automaker of the Leaderboard for the second year running, and increased its overall score by 6 percentage points, while Ford only… pic.twitter.com/nAgGOIrGFS

— Sawyer Merritt (@SawyerMerritt) March 4, 2026

This independent ranking, produced by a coalition of environmental, human rights, and investor groups including the Sierra Club, Transport & Environment, and others, evaluates 18 major automakers on their efforts to build equitable, sustainable, and fossil-free supply chains for electric vehicles.

Tesla earned an overall score of 49 percent, up 6 percentage points from the previous year, widening its lead over second-place Ford (45 percent, up 2 points) to a commanding 4-percentage-point gap. The company also excelled in the Fossil Free & Environment category with a 50 percent score, reflecting strong progress in reducing emissions and decarbonizing operations.

Perhaps the most impressive achievement came in the batteries subsection, where Tesla posted a massive +20-point jump to reach 51 percent, becoming the first automaker ever to surpass 50 percent in this critical area.

Tesla achieved this milestone through transparency, fully disclosing Scope 3 emissions breakdowns for battery cell production and key materials like lithium, nickel, cobalt, and graphite.

The company also requires suppliers to conduct due diligence aligned with OECD guidelines on responsible sourcing, which it has mentioned in past Impact Reports.

While Tesla leads comfortably in climate and environmental performance, it scores 48 percent in human rights and responsible sourcing, slightly behind Ford’s 49 percent.

The company made notable gains in workers’ rights remedies, but has room to improve on issues like Indigenous Peoples’ rights.

Overall, the leaderboard highlights that a core group of leaders, Tesla, Ford, Volvo, Mercedes, and Volkswagen, are advancing twice as fast as their peers, proving that cleaner, more ethical EV supply chains are not just possible but already underway.

For Tesla detractors who claim EVs aren’t truly green or that the company cuts corners, this recognition from sustainability-focused NGOs delivers a powerful rebuttal.

Tesla’s vertical integration, direct supplier contracts, low-carbon material agreements (like its North American aluminum deal with emissions under 2kg CO₂e per kg), and raw materials reporting continue to set the industry standard.

As the world races toward electrification, Tesla isn’t just building cars; it’s building a more responsible future.

News

Tesla Full Self-Driving likely to expand to yet another Asian country

“We are aiming for implementation in 2026. [We are] doing everything in our power [to achieve this],” Richi Hashimoto, president of Tesla’s Japanese subsidiary, said.

Tesla Full Self-Driving is likely to expand to yet another Asian country, as one country seems primed for the suite to head to it for the first time.

The launch of Full Self-Driving in yet another country this year would be a major breakthrough for Tesla as it continues to expand the driver-assistance program across the world. Bureaucratic red tape has held up a lot of its efforts, but things are looking up in some regions.

Tesla is poised to transform Japan’s roads with Full Self-Driving (FSD) technology by 2026.

Richi Hashimoto, president of Tesla’s Japanese subsidiary, announced the ambitious timeline, building on successful employee test drives that began in 2025 and earned positive media reviews. Test drives, initially limited to the Model 3 since August 2025, expanded to the Model Y on March 5.

Once regulators approve, Over-the-Air (OTA) software updates could activate FSD across roughly 40,000 Teslas already on Japanese roads. Japan’s orderly traffic and strict safety culture make it an ideal testing ground for autonomous driving.

Hashimoto said:

“We are aiming for implementation in 2026. [We are] doing everything in our power [to achieve this].”

The push aligns with Hashimoto’s leadership, which has been credited for Tesla’s sales turnaround.

In 2025, Tesla delivered a record 10,600 vehicles in Japan — a nearly 90% jump from the prior year and the first time exceeding 10,000 units annually.

BREAKING 🇯🇵 FSD IS LIKELY LAUNCHING IN JAPAN IN 2026 🚨

Richi Hashimoto, President of Tesla’s Japanese subsidiary, stated: “We are aiming for implementation in 2026” and added that they are “doing everything in our power” to achieve this 🔥

Test drives in Japan began in August… pic.twitter.com/jkkrJLszXN

— Ming (@tslaming) March 5, 2026

The strategy shifted from online-only sales to adding 29 physical showrooms in high-traffic malls, plus staff training and attractive financing offers launched in January 2026. Tesla also plans to expand its Supercharger network to over 1,000 points by 2027, boosting accessibility.

This Japanese momentum reflects Tesla’s broader international expansion. In Europe, Giga Berlin produced more than 200,000 vehicles in 2025 despite a temporary halt, supplying over 30 markets with plans for sequential production growth in 2026 and battery cell manufacturing by 2027.

While regional EV sales faced headwinds, the factory remains a cornerstone for Model Y deliveries across the continent.

In Asia, Giga Shanghai continues to be recognized as Tesla’s powerhouse. China, the company’s largest market, saw January 2026 deliveries from the plant rise 9 percent year-over-year to 69,129 units, with affordable new models expected later this year.

FSD advancements, already progressing in the U.S. and South Korea, are slated for Europe and further Asian rollout, complementing plans to expand Cybercab and Optimus to new markets as well.

With OTA-enabled autonomy on the horizon and retail strategies paying dividends, Tesla is strengthening its footprint from Tokyo showrooms to Berlin assembly lines and Shanghai exports. As Hashimoto continues to push Tesla forward in Japan, the company’s global vision for sustainable, self-driving mobility gains traction across Europe and Asia.

News

Tesla ships out update that brings massive change to two big features

“This change only updates the name of certain features and text in your vehicle,” the company wrote in Release Notes for the update, “and does not change the way your features behave.”

Tesla has shipped out an update for its vehicles that was caused specifically by a California lawsuit that threatened the company’s ability to sell cars because of how it named its driver assistance suite.

Tesla shipped out Software Update 2026.2.9 starting last week; we received it already, and it only brings a few minor changes, mostly related to how things are referenced.

“This change only updates the name of certain features and text in your vehicle,” the company wrote in Release Notes for the update, “and does not change the way your features behave.”

The following changes came to Tesla vehicles in the update:

- Navigate on Autopilot has now been renamed to Navigate on Autosteer

- FSD Computer has been renamed to AI Computer

Tesla faced a 30-day sales suspension in California after the state’s Department of Motor Vehicles stated the company had to come into compliance regarding the marketing of its automated driving features.

The agency confirmed on February 18 that it had taken a “corrective action” to resolve the issue. That corrective action was renaming certain parts of its ADAS.

Tesla discontinued its standalone Autopilot offering in January and ramped up the marketing of Full Self-Driving Supervised. Tesla had said on X that the issue with naming “was a ‘consumer protection’ order about the use of the term ‘Autopilot’ in a case where not one single customer came forward to say there’s a problem.”

This was a “consumer protection” order about the use of the term “Autopilot” in a case where not one single customer came forward to say there’s a problem.

Sales in California will continue uninterrupted.

— Tesla North America (@tesla_na) December 17, 2025

It is now compliant with the wishes of the California DMV, and we’re all dealing with it now.

This was the first primary dispute over the terminology of Full Self-Driving, but it has undergone some scrutiny at the federal level, as some government officials have claimed the suite has “deceptive” names. Previous Transportation Secretary Pete Buttigieg was one of those federal-level employees who had an issue with the names “Autopilot” and “Full Self-Driving.”

Tesla sued the California DMV over the ruling last week.